Jiaole Wang

Reconfigurable Tendon-Driven Robots: Eliminating Inter-segmental Coupling via Independently Lockable Joints

Jul 23, 2025Abstract:With a slender redundant body, the tendon-driven robot (TDR) has a large workspace and great maneuverability while working in complex environments. TDR comprises multiple independently controlled robot segments, each with a set of driving tendons. While increasing the number of robot segments enhances dexterity and expands the workspace, this structural expansion also introduces intensified inter-segmental coupling. Therefore, achieving precise TDR control requires more complex models and additional motors. This paper presents a reconfigurable tendon-driven robot (RTR) equipped with innovative lockable joints. Each joint's state (locked/free) can be individually controlled through a pair of antagonistic tendons, and its structure eliminates the need for a continuous power supply to maintain the state. Operators can selectively actuate the targeted robot segments, and this scheme fundamentally eliminates the inter-segmental coupling, thereby avoiding the requirement for complex coordinated control between segments. The workspace of RTR has been simulated and compared with traditional TDRs' workspace, and RTR's advantages are further revealed. The kinematics and statics models of the RTR have been derived and validation experiments have been conducted. Demonstrations have been performed using a seven-joint RTR prototype to show its reconfigurability and moving ability in complex environments with an actuator pack comprising only six motors.

Automatic Tissue Traction with Haptics-Enabled Forceps for Minimally Invasive Surgery

Jan 25, 2024Abstract:A common limitation of autonomous tissue manipulation in robotic minimally invasive surgery (MIS) is the absence of force sensing and control at the tool level. Recently, our team has developed haptics-enabled forceps that can simultaneously measure the grasping and pulling forces during tissue manipulation. Based on this design, here we further present a method to automate tissue traction with controlled grasping and pulling forces. Specifically, the grasping stage relies on a controlled grasping force, while the pulling stage is under the guidance of a controlled pulling force. Notably, during the pulling process, the simultaneous control of both grasping and pulling forces is also enabled for more precise tissue traction, achieved through force decoupling. The force controller is built upon a static model of tissue manipulation, considering the interaction between the haptics-enabled forceps and soft tissue. The efficacy of this force control approach is validated through a series of experiments comparing targeted, estimated, and actual reference forces. To verify the feasibility of the proposed method in surgical applications, various tissue resections are conducted on ex vivo tissues employing a dual-arm robotic setup. Finally, we discuss the benefits of multi-force control in tissue traction, evidenced through comparative analyses of various ex vivo tissue resections. The results affirm the feasibility of implementing automatic tissue traction using micro-sized forceps with multi-force control, suggesting its potential to promote autonomous MIS. A video demonstrating the experiments can be found at https://youtu.be/8fe8o8IFrjE.

Minimally-intrusive Navigation in Dense Crowds with Integrated Macro and Micro-level Dynamics

Dec 28, 2023Abstract:In mobile robot navigation, despite advancements, the generation of optimal paths often disrupts pedestrian areas. To tackle this, we propose three key contributions to improve human-robot coexistence in shared spaces. Firstly, we have established a comprehensive framework to understand disturbances at individual and flow levels. Our framework provides specialized computational strategies for in-depth studies of human-robot interactions from both micro and macro perspectives. By employing novel penalty terms, namely Flow Disturbance Penalty (FDP) and Individual Disturbance Penalty (IDP), our framework facilitates a more nuanced assessment and analysis of the robot navigation's impact on pedestrians. Secondly, we introduce an innovative sampling-based navigation system that adeptly integrates a suite of safety measures with the predictability of robotic movements. This system not only accounts for traditional factors such as trajectory length and travel time but also actively incorporates pedestrian awareness. Our navigation system aims to minimize disturbances and promote harmonious coexistence by considering safety protocols, trajectory clarity, and pedestrian engagement. Lastly, we validate our algorithm's effectiveness and real-time performance through simulations and real-world tests, demonstrating its ability to navigate with minimal pedestrian disturbance in various environments.

Towards High Efficient Long-horizon Planning with Expert-guided Motion-encoding Tree Search

Sep 30, 2023Abstract:Autonomous driving holds promise for increased safety, optimized traffic management, and a new level of convenience in transportation. While model-based reinforcement learning approaches such as MuZero enables long-term planning, the exponentially increase of the number of search nodes as the tree goes deeper significantly effect the searching efficiency. To deal with this problem, in this paper we proposed the expert-guided motion-encoding tree search (EMTS) algorithm. EMTS extends the MuZero algorithm by representing possible motions with a comprehensive motion primitives latent space and incorporating expert policies toimprove the searching efficiency. The comprehensive motion primitives latent space enables EMTS to sample arbitrary trajectories instead of raw action to reduce the depth of the search tree. And the incorporation of expert policies guided the search and training phases the EMTS algorithm to enable early convergence. In the experiment section, the EMTS algorithm is compared with other four algorithms in three challenging scenarios. The experiment result verifies the effectiveness and the searching efficiency of the proposed EMTS algorithm.

Haptics-Enabled Forceps with Multi-Modal Force Sensing: Towards Task-Autonomous Robotic Surgery

Mar 15, 2023

Abstract:Current minimally invasive surgical robots are lacking in force sensing that is robust to temperature and electromagnetic variation while being compatible with micro-sized instruments. This paper presents a multi-axis force sensing module that can be integrated with micro-sized surgical instruments such as biopsy forceps. The proposed miniature sensing module mainly consists of a flexure, a camera, and a target. The deformation of the flexure is obtained by the pose variation of the top-mounted target, which is estimated by the camera with a proposed pose estimation algorithm. Then, the external force is estimated using the flexure's displacement and stiffness matrix. Integrating the sensing module, we further develop a pair of haptics-enabled forceps and realize its multi-modal force sensing, including touching, grasping, and pulling when the forceps manipulate tissues. To minimize the unexpected sliding between the forceps' clips and the tissue, we design a micro-level actuator to drive the forceps and compensate for the motion introduced by the flexure's deformation. Finally, a series of experiments are conducted to verify the feasibility of the proposed sensing module and forceps, including an automatic robotic grasping procedure on ex-vivo tissues. The results indicate the sensing module can estimate external forces accurately, and the haptics-enabled forceps can potentially realize multi-modal force sensing for task-autonomous robotic surgery. A video demonstrating the experiments can be found at https://youtu.be/4UUTT_hiFcI.

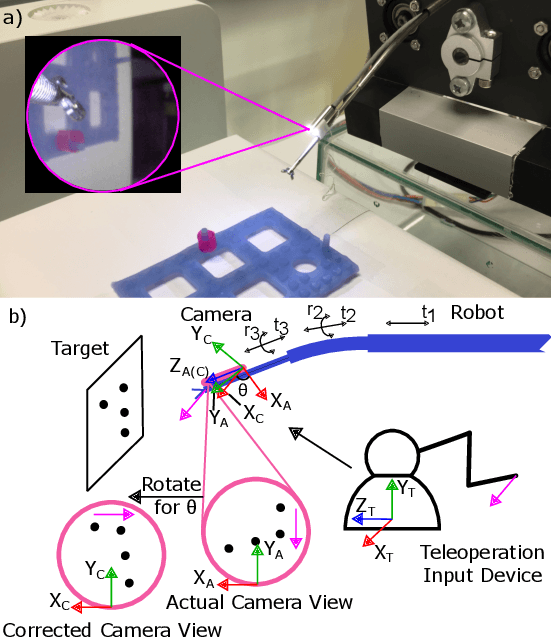

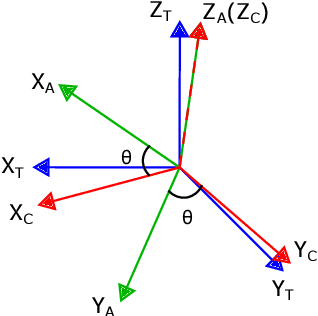

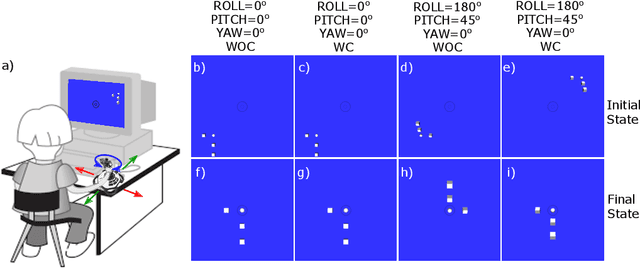

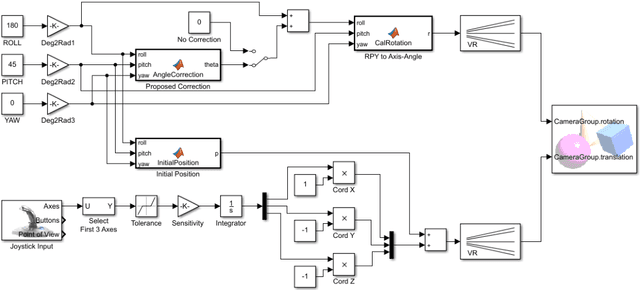

Camera Frame Misalignment in a Teleoperated Eye-in-Hand Robot: Effects and a Simple Correction Method

May 18, 2021

Abstract:Misalignment between the camera frame and the operator frame is commonly seen in a teleoperated system and usually degrades the operation performance. The effects of such misalignment have not been fully investigated for eye-in-hand systems - systems that have the camera (eye) mounted to the end-effector (hand) to gain compactness in confined spaces such as in endoscopic surgery. This paper provides a systematic study on the effects of the camera frame misalignment in a teleoperated eye-in-hand robot and proposes a simple correction method in the view display. A simulation is designed to compare the effects of the misalignment under different conditions. Users are asked to move a rigid body from its initial position to the specified target position via teleoperation, with different levels of misalignment simulated. It is found that misalignment between the input motion and the output view is much more difficult to compensate by the operators when it is in the orthogonal direction (~40s) compared with the opposite direction (~20s). An experiment on a real concentric tube robot with an eye-in-hand configuration is also conducted. Users are asked to telemanipulate the robot to complete a pick-and-place task. Results show that with the correction enabled, there is a significant improvement in the operation performance in terms of completion time (mean 40.6%, median 38.6%), trajectory length (mean 34.3%, median 28.1%), difficulty (50.5%), unsteadiness (49.4%), and mental stress (60.9%).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge