Jianzhun Du

Identifying Decision Points for Safe and Interpretable Reinforcement Learning in Hypotension Treatment

Jan 09, 2021

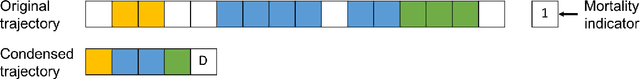

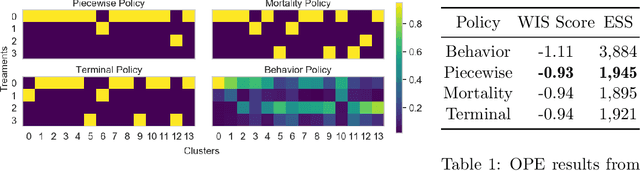

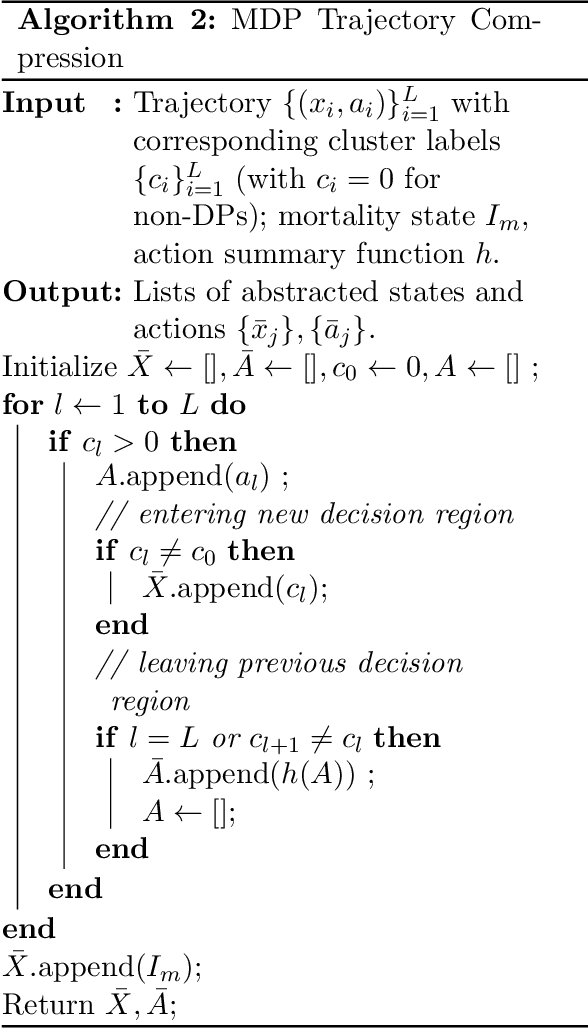

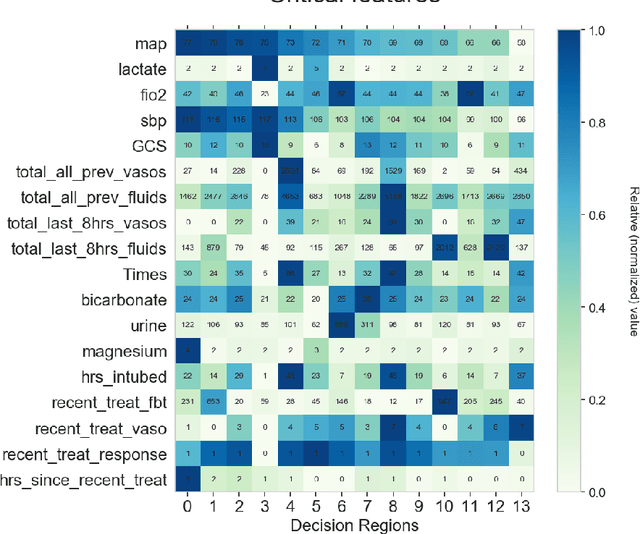

Abstract:Many batch RL health applications first discretize time into fixed intervals. However, this discretization both loses resolution and forces a policy computation at each (potentially fine) interval. In this work, we develop a novel framework to compress continuous trajectories into a few, interpretable decision points --places where the batch data support multiple alternatives. We apply our approach to create recommendations from a cohort of hypotensive patients dataset. Our reduced state space results in faster planning and allows easy inspection by a clinical expert.

Model-based Reinforcement Learning for Semi-Markov Decision Processes with Neural ODEs

Jun 29, 2020

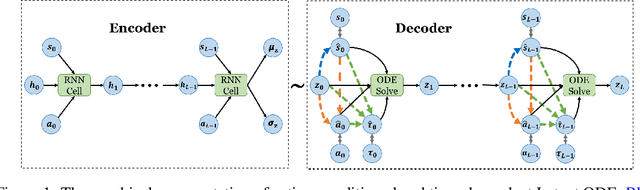

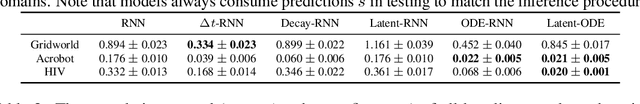

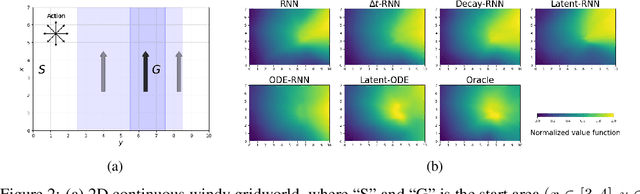

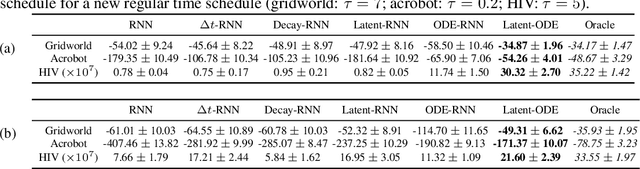

Abstract:We present two elegant solutions for modeling continuous-time dynamics, in a novel model-based reinforcement learning (RL) framework for semi-Markov decision processes (SMDPs), using neural ordinary differential equations (ODEs). Our models accurately characterize continuous-time dynamics and enable us to develop high-performing policies using a small amount of data. We also develop a model-based approach for optimizing time schedules to reduce interaction rates with the environment while maintaining the near-optimal performance, which is not possible for model-free methods. We experimentally demonstrate the efficacy of our methods across various continuous-time domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge