Jianting Wang

Shadow Generation for Composite Image Using Diffusion model

Mar 22, 2024Abstract:In the realm of image composition, generating realistic shadow for the inserted foreground remains a formidable challenge. Previous works have developed image-to-image translation models which are trained on paired training data. However, they are struggling to generate shadows with accurate shapes and intensities, hindered by data scarcity and inherent task complexity. In this paper, we resort to foundation model with rich prior knowledge of natural shadow images. Specifically, we first adapt ControlNet to our task and then propose intensity modulation modules to improve the shadow intensity. Moreover, we extend the small-scale DESOBA dataset to DESOBAv2 using a novel data acquisition pipeline. Experimental results on both DESOBA and DESOBAv2 datasets as well as real composite images demonstrate the superior capability of our model for shadow generation task. The dataset, code, and model are released at https://github.com/bcmi/Object-Shadow-Generation-Dataset-DESOBAv2.

DESOBAv2: Towards Large-scale Real-world Dataset for Shadow Generation

Aug 19, 2023

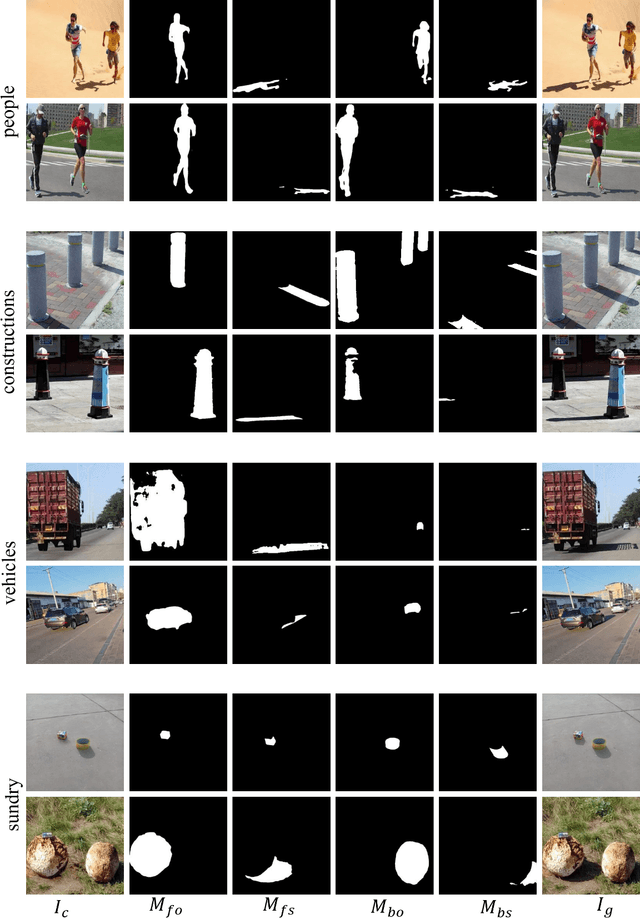

Abstract:Image composition refers to inserting a foreground object into a background image to obtain a composite image. In this work, we focus on generating plausible shadow for the inserted foreground object to make the composite image more realistic. To supplement the existing small-scale dataset DESOBA, we create a large-scale dataset called DESOBAv2 by using object-shadow detection and inpainting techniques. Specifically, we collect a large number of outdoor scene images with object-shadow pairs. Then, we use pretrained inpainting model to inpaint the shadow region, resulting in the deshadowed images. Based on real images and deshadowed images, we can construct pairs of synthetic composite images and ground-truth target images. Dataset is available at https://github.com/bcmi/Object-Shadow-Generation-Dataset-DESOBAv2.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge