Jianmin Guo

HDTest: Differential Fuzz Testing of Brain-Inspired Hyperdimensional Computing

Mar 15, 2021

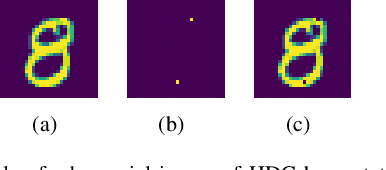

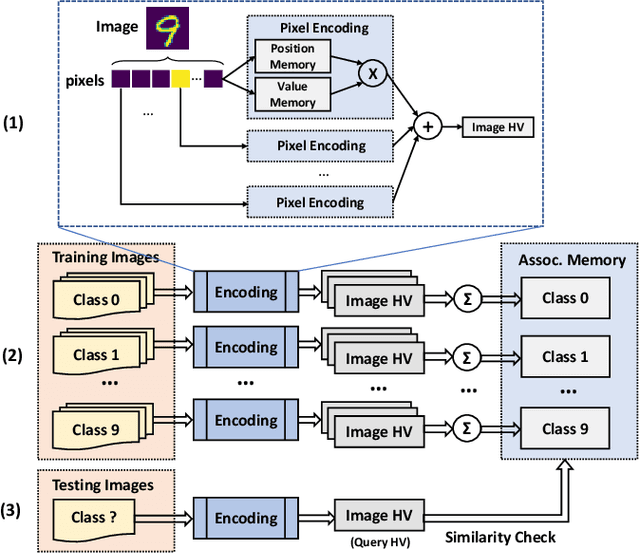

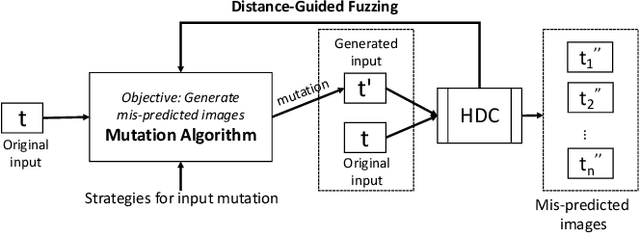

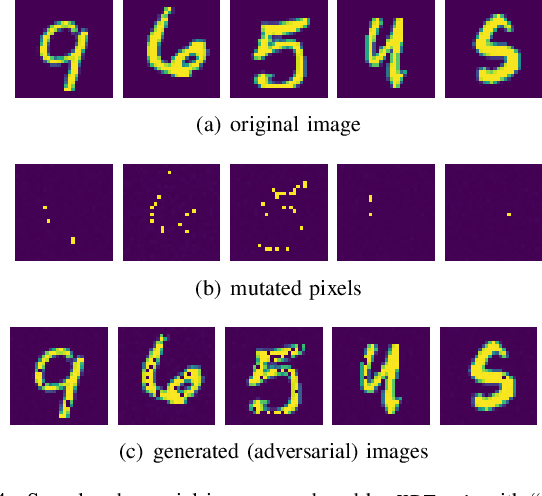

Abstract:Brain-inspired hyperdimensional computing (HDC) is an emerging computational paradigm that mimics brain cognition and leverages hyperdimensional vectors with fully distributed holographic representation and (pseudo)randomness. Compared to other machine learning (ML) methods such as deep neural networks (DNNs), HDC offers several advantages including high energy efficiency, low latency, and one-shot learning, making it a promising alternative candidate on a wide range of applications. However, the reliability and robustness of HDC models have not been explored yet. In this paper, we design, implement, and evaluate HDTest to test HDC model by automatically exposing unexpected or incorrect behaviors under rare inputs. The core idea of HDTest is based on guided differential fuzz testing. Guided by the distance between query hypervector and reference hypervector in HDC, HDTest continuously mutates original inputs to generate new inputs that can trigger incorrect behaviors of HDC model. Compared to traditional ML testing methods, HDTest does not need to manually label the original input. Using handwritten digit classification as an example, we show that HDTest can generate thousands of adversarial inputs with negligible perturbations that can successfully fool HDC models. On average, HDTest can generate around 400 adversarial inputs within one minute running on a commodity computer. Finally, by using the HDTest-generated inputs to retrain HDC models, we can strengthen the robustness of HDC models. To the best of our knowledge, this paper presents the first effort in systematically testing this emerging brain-inspired computational model.

RNN-Test: Adversarial Testing Framework for Recurrent Neural Network Systems

Nov 11, 2019

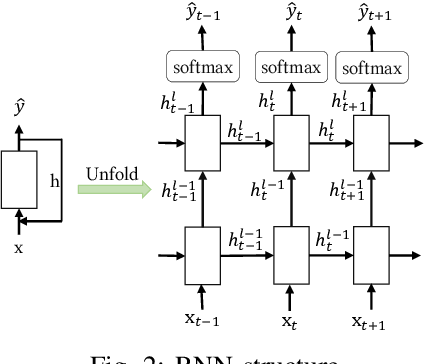

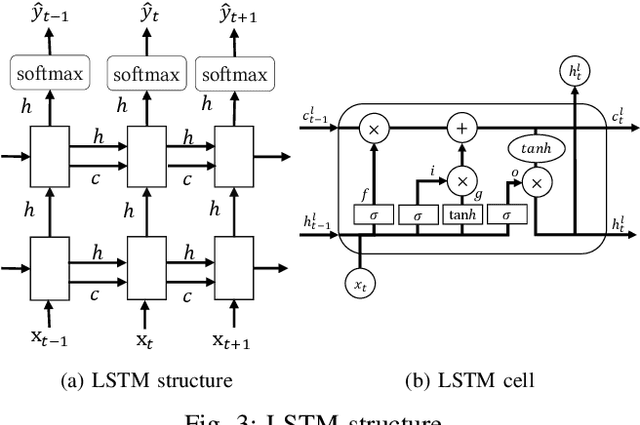

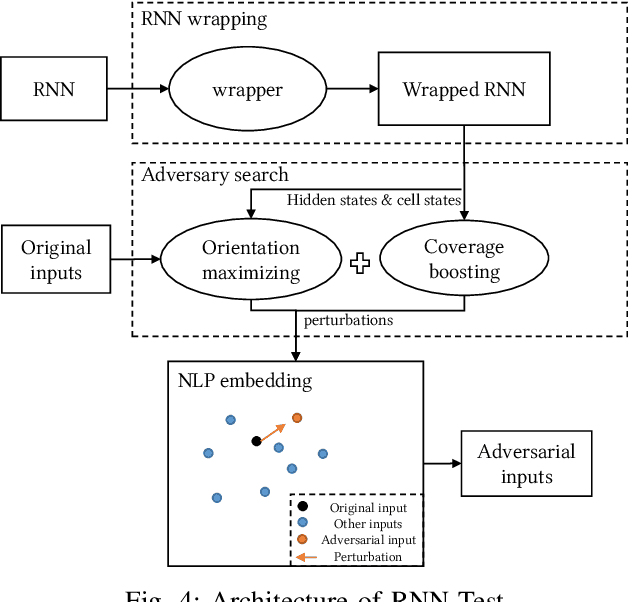

Abstract:While huge efforts have been investigated in the adversarial testing of convolutional neural networks (CNN), the testing for recurrent neural networks (RNN) is still limited to the classification context and leave threats for vast sequential application domains. In this work, we propose a generic adversarial testing framework RNN-Test. First, based on the distinctive structure of RNNs, we define three novel coverage metrics to measure the testing completeness and guide the generation of adversarial inputs. Second, we propose the state inconsistency orientation to generate the perturbations by maximizing the inconsistency of the hidden states of RNN cells. Finally, we combine orientations with coverage guidance to produce minute perturbations. Given the RNN model and the sequential inputs, RNN-Test will modify one character or one word out of the whole inputs based on the perturbations obtained, so as to lead the RNN to produce wrong outputs. For evaluation, we apply RNN-Test on two models of common RNN structure - the PTB language model and the spell checker model. RNN-Test efficiently reduces the performance of the PTB language model by increasing its test perplexity by 58.11%, and finds numbers of incorrect behaviors of the spell checker model with the success rate of 73.44% on average. With our customization, RNN-Test using the redefined neuron coverage as guidance could achieve 35.71% higher perplexity than original strategy of DeepXplore.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge