Jiahua Luo

Department of Computer and Information Science, University of Macau, Macao SAR, China

AdaMoLE: Fine-Tuning Large Language Models with Adaptive Mixture of Low-Rank Adaptation Experts

May 01, 2024

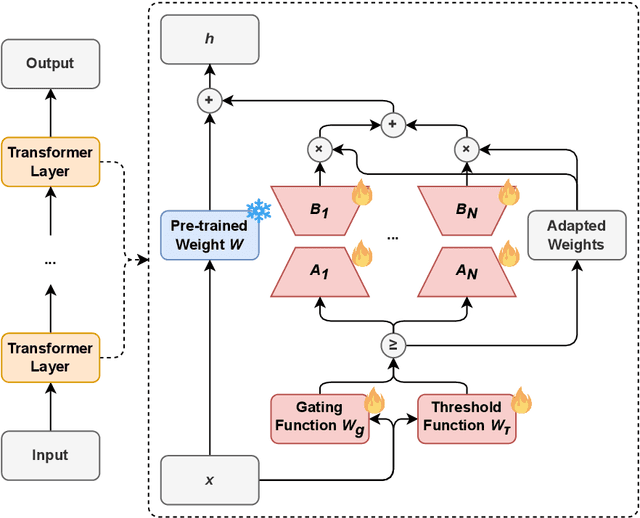

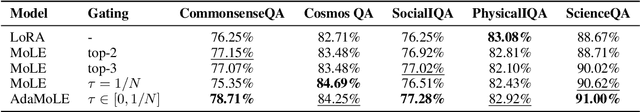

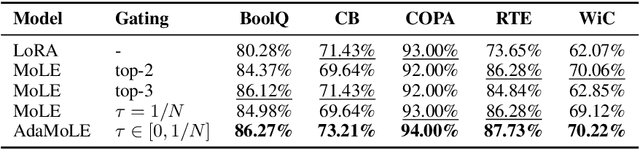

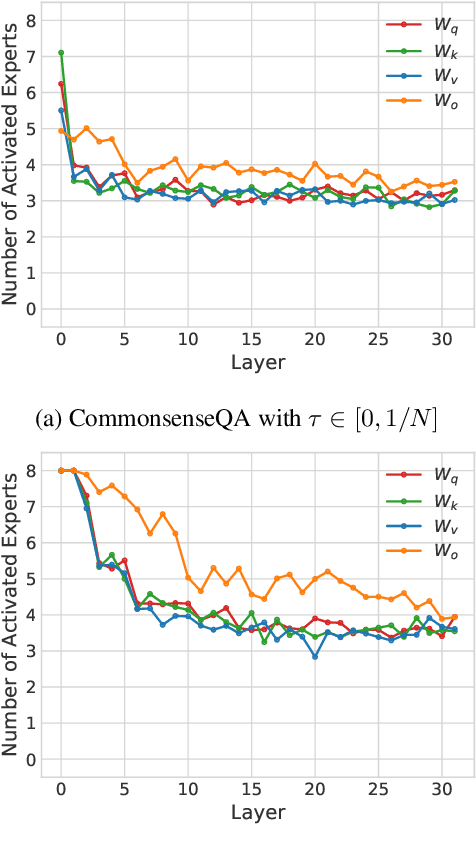

Abstract:We introduce AdaMoLE, a novel method for fine-tuning large language models (LLMs) through an Adaptive Mixture of Low-Rank Adaptation (LoRA) Experts. Moving beyond conventional methods that employ a static top-k strategy for activating experts, AdaMoLE dynamically adjusts the activation threshold using a dedicated threshold network, adaptively responding to the varying complexities of different tasks. By replacing a single LoRA in a layer with multiple LoRA experts and integrating a gating function with the threshold mechanism, AdaMoLE effectively selects and activates the most appropriate experts based on the input context. Our extensive evaluations across a variety of commonsense reasoning and natural language processing tasks show that AdaMoLE exceeds baseline performance. This enhancement highlights the advantages of AdaMoLE's adaptive selection of LoRA experts, improving model effectiveness without a corresponding increase in the expert count. The experimental validation not only confirms AdaMoLE as a robust approach for enhancing LLMs but also suggests valuable directions for future research in adaptive expert selection mechanisms, potentially broadening the scope for optimizing model performance across diverse language processing tasks.

Sparse Bayesian Learning with Diagonal Quasi-Newton Method For Large Scale Classification

Jul 17, 2021

Abstract:Sparse Bayesian Learning (SBL) constructs an extremely sparse probabilistic model with very competitive generalization. However, SBL needs to invert a big covariance matrix with complexity O(M^3 ) (M: feature size) for updating the regularization priors, making it difficult for practical use. There are three issues in SBL: 1) Inverting the covariance matrix may obtain singular solutions in some cases, which hinders SBL from convergence; 2) Poor scalability to problems with high dimensional feature space or large data size; 3) SBL easily suffers from memory overflow for large-scale data. This paper addresses these issues with a newly proposed diagonal Quasi-Newton (DQN) method for SBL called DQN-SBL where the inversion of big covariance matrix is ignored so that the complexity and memory storage are reduced to O(M). The DQN-SBL is thoroughly evaluated on non-linear classifiers and linear feature selection using various benchmark datasets of different sizes. Experimental results verify that DQN-SBL receives competitive generalization with a very sparse model and scales well to large-scale problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge