Jessica Morley

What Kind of Reasoning (if any) is an LLM actually doing? On the Stochastic Nature and Abductive Appearance of Large Language Models

Dec 10, 2025Abstract:This article looks at how reasoning works in current Large Language Models (LLMs) that function using the token-completion method. It examines their stochastic nature and their similarity to human abductive reasoning. The argument is that these LLMs create text based on learned patterns rather than performing actual abductive reasoning. When their output seems abductive, this is largely because they are trained on human-generated texts that include reasoning structures. Examples are used to show how LLMs can produce plausible ideas, mimic commonsense reasoning, and give explanatory answers without being grounded in truth, semantics, verification, or understanding, and without performing any real abductive reasoning. This dual nature, where the models have a stochastic base but appear abductive in use, has important consequences for how LLMs are evaluated and applied. They can assist with generating ideas and supporting human thinking, but their outputs must be critically assessed because they cannot identify truth or verify their explanations. The article concludes by addressing five objections to these points, noting some limitations in the analysis, and offering an overall evaluation.

Cyber Risks to Next-Gen Brain-Computer Interfaces: Analysis and Recommendations

Aug 18, 2025Abstract:Brain-computer interfaces (BCIs) show enormous potential for advancing personalized medicine. However, BCIs also introduce new avenues for cyber-attacks or security compromises. In this article, we analyze the problem and make recommendations for device manufacturers to better secure devices and to help regulators understand where more guidance is needed to protect patient safety and data confidentiality. Device manufacturers should implement the prior suggestions in their BCI products. These recommendations help protect BCI users from undue risks, including compromised personal health and genetic information, unintended BCI-mediated movement, and many other cybersecurity breaches. Regulators should mandate non-surgical device update methods, strong authentication and authorization schemes for BCI software modifications, encryption of data moving to and from the brain, and minimize network connectivity where possible. We also design a hypothetical, average-case threat model that identifies possible cybersecurity threats to BCI patients and predicts the likeliness of risk for each category of threat. BCIs are at less risk of physical compromise or attack, but are vulnerable to remote attack; we focus on possible threats via network paths to BCIs and suggest technical controls to limit network connections.

Agentic AI Optimisation (AAIO): what it is, how it works, why it matters, and how to deal with it

Apr 16, 2025

Abstract:The emergence of Agentic Artificial Intelligence (AAI) systems capable of independently initiating digital interactions necessitates a new optimisation paradigm designed explicitly for seamless agent-platform interactions. This article introduces Agentic AI Optimisation (AAIO) as an essential methodology for ensuring effective integration between websites and agentic AI systems. Like how Search Engine Optimisation (SEO) has shaped digital content discoverability, AAIO can define interactions between autonomous AI agents and online platforms. By examining the mutual interdependency between website optimisation and agentic AI success, the article highlights the virtuous cycle that AAIO can create. It further explores the governance, ethical, legal, and social implications (GELSI) of AAIO, emphasising the necessity of proactive regulatory frameworks to mitigate potential negative impacts. The article concludes by affirming AAIO's essential role as part of a fundamental digital infrastructure in the era of autonomous digital agents, advocating for equitable and inclusive access to its benefits.

Towards a framework for evaluating the safety, acceptability and efficacy of AI systems for health: an initial synthesis

Apr 14, 2021

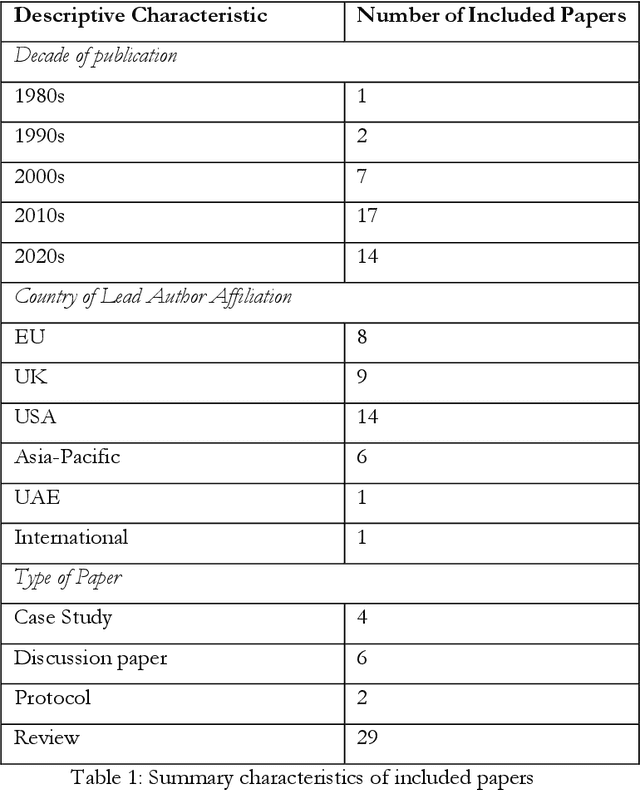

Abstract:The potential presented by Artificial Intelligence (AI) for healthcare has long been recognised by the technical community. More recently, this potential has been recognised by policymakers, resulting in considerable public and private investment in the development of AI for healthcare across the globe. Despite this, excepting limited success stories, real-world implementation of AI systems into front-line healthcare has been limited. There are numerous reasons for this, but a main contributory factor is the lack of internationally accepted, or formalised, regulatory standards to assess AI safety and impact and effectiveness. This is a well-recognised problem with numerous ongoing research and policy projects to overcome it. Our intention here is to contribute to this problem-solving effort by seeking to set out a minimally viable framework for evaluating the safety, acceptability and efficacy of AI systems for healthcare. We do this by conducting a systematic search across Scopus, PubMed and Google Scholar to identify all the relevant literature published between January 1970 and November 2020 related to the evaluation of: output performance; efficacy; and real-world use of AI systems, and synthesising the key themes according to the stages of evaluation: pre-clinical (theoretical phase); exploratory phase; definitive phase; and post-market surveillance phase (monitoring). The result is a framework to guide AI system developers, policymakers, and regulators through a sufficient evaluation of an AI system designed for use in healthcare.

From What to How. An Overview of AI Ethics Tools, Methods and Research to Translate Principles into Practices

May 15, 2019

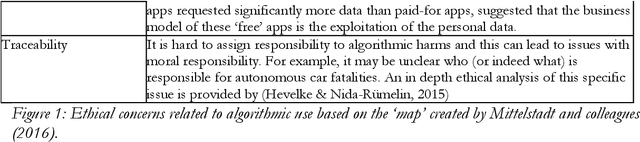

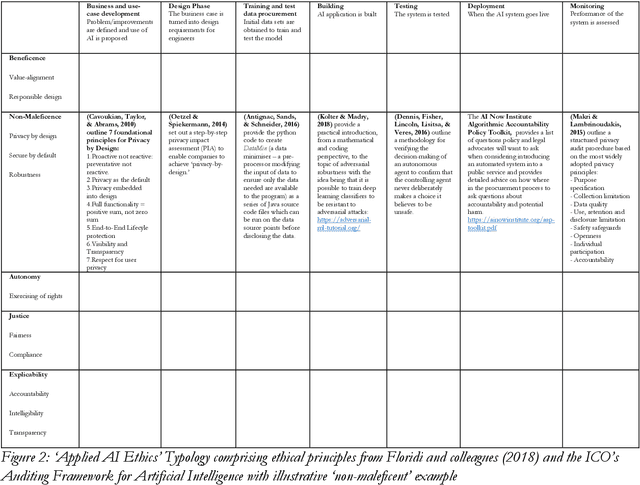

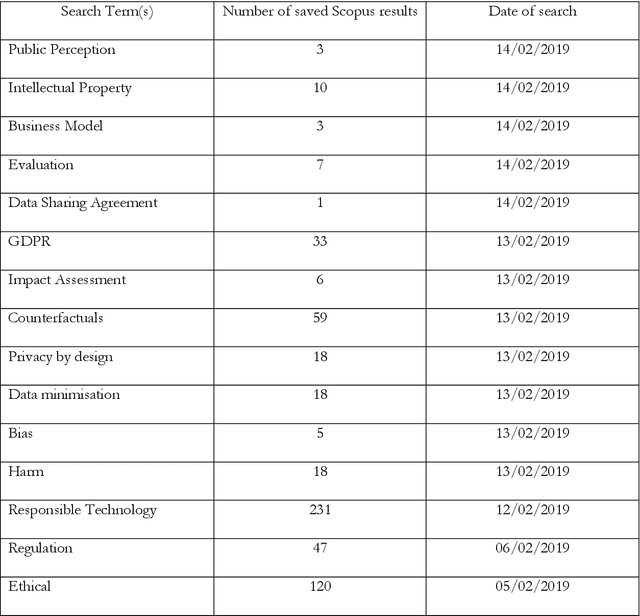

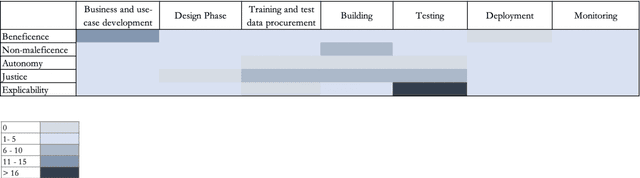

Abstract:The debate about the ethical implications of Artificial Intelligence dates from the 1960s. However, in recent years symbolic AI has been complemented and sometimes replaced by Neural Networks and Machine Learning techniques. This has vastly increased its potential utility and impact on society, with the consequence that the ethical debate has gone mainstream. Such debate has primarily focused on principles - the what of AI ethics - rather than on practices, the how. Awareness of the potential issues is increasing at a fast rate, but the AI community's ability to take action to mitigate the associated risks is still at its infancy. Therefore, our intention in presenting this research is to contribute to closing the gap between principles and practices by constructing a typology that may help practically-minded developers apply ethics at each stage of the pipeline, and to signal to researchers where further work is needed. The focus is exclusively on Machine Learning, but it is hoped that the results of this research may be easily applicable to other branches of AI. The article outlines the research method for creating this typology, the initial findings, and provides a summary of future research needs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge