Jerome R. Bellegarda

Embedded Large-Scale Handwritten Chinese Character Recognition

Apr 13, 2020

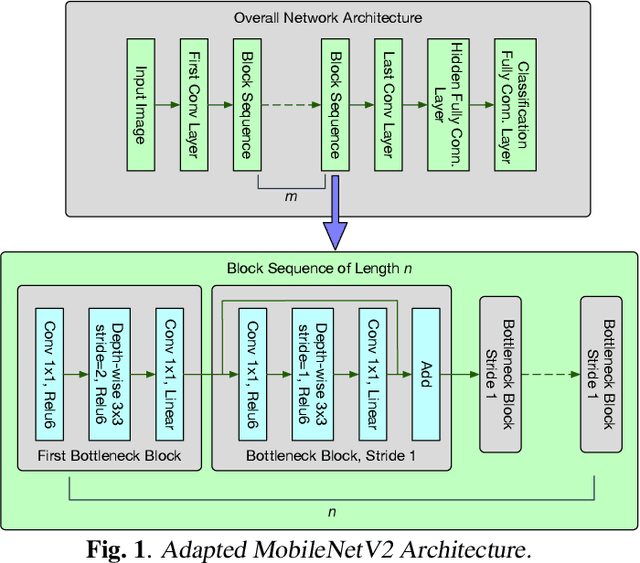

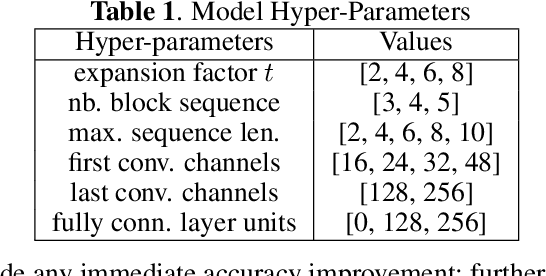

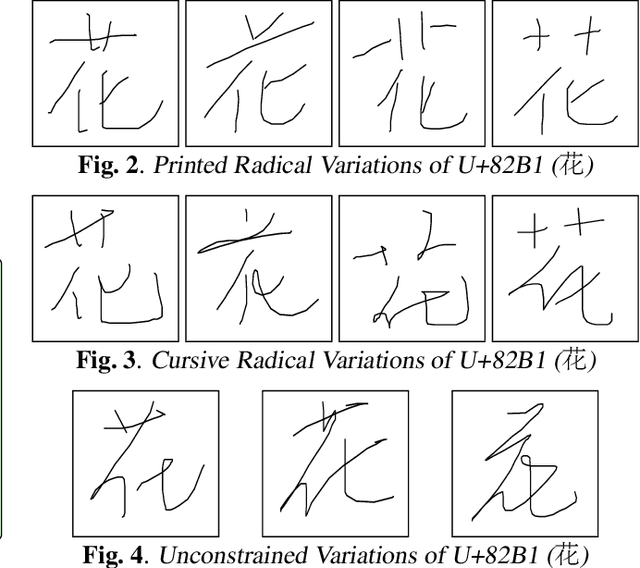

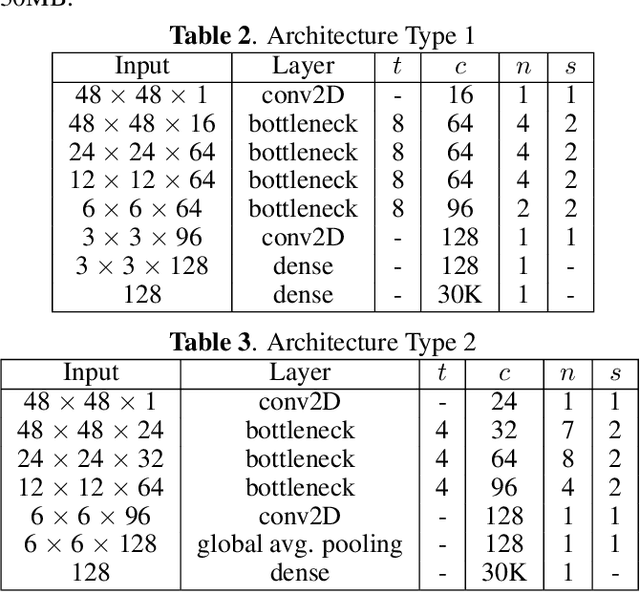

Abstract:As handwriting input becomes more prevalent, the large symbol inventory required to support Chinese handwriting recognition poses unique challenges. This paper describes how the Apple deep learning recognition system can accurately handle up to 30,000 Chinese characters while running in real-time across a range of mobile devices. To achieve acceptable accuracy, we paid particular attention to data collection conditions, representativeness of writing styles, and training regimen. We found that, with proper care, even larger inventories are within reach. Our experiments show that accuracy only degrades slowly as the inventory increases, as long as we use training data of sufficient quality and in sufficient quantity.

Reverse Transfer Learning: Can Word Embeddings Trained for Different NLP Tasks Improve Neural Language Models?

Sep 09, 2019

Abstract:Natural language processing (NLP) tasks tend to suffer from a paucity of suitably annotated training data, hence the recent success of transfer learning across a wide variety of them. The typical recipe involves: (i) training a deep, possibly bidirectional, neural network with an objective related to language modeling, for which training data is plentiful; and (ii) using the trained network to derive contextual representations that are far richer than standard linear word embeddings such as word2vec, and thus result in important gains. In this work, we wonder whether the opposite perspective is also true: can contextual representations trained for different NLP tasks improve language modeling itself? Since language models (LMs) are predominantly locally optimized, other NLP tasks may help them make better predictions based on the entire semantic fabric of a document. We test the performance of several types of pre-trained embeddings in neural LMs, and we investigate whether it is possible to make the LM more aware of global semantic information through embeddings pre-trained with a domain classification model. Initial experiments suggest that as long as the proper objective criterion is used during training, pre-trained embeddings are likely to be beneficial for neural language modeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge