Jeremie Laydevant

Self-Contrastive Forward-Forward Algorithm

Sep 17, 2024

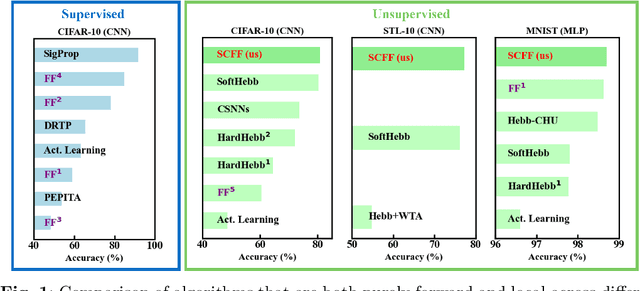

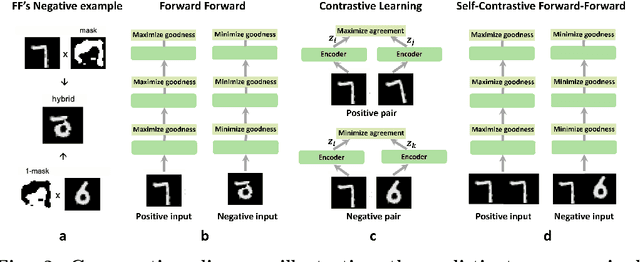

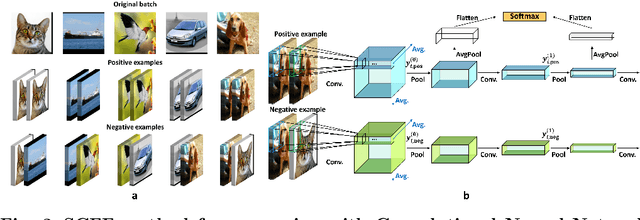

Abstract:The Forward-Forward (FF) algorithm is a recent, purely forward-mode learning method, that updates weights locally and layer-wise and supports supervised as well as unsupervised learning. These features make it ideal for applications such as brain-inspired learning, low-power hardware neural networks, and distributed learning in large models. However, while FF has shown promise on written digit recognition tasks, its performance on natural images and time-series remains a challenge. A key limitation is the need to generate high-quality negative examples for contrastive learning, especially in unsupervised tasks, where versatile solutions are currently lacking. To address this, we introduce the Self-Contrastive Forward-Forward (SCFF) method, inspired by self-supervised contrastive learning. SCFF generates positive and negative examples applicable across different datasets, surpassing existing local forward algorithms for unsupervised classification accuracy on MNIST (MLP: 98.7%), CIFAR-10 (CNN: 80.75%), and STL-10 (CNN: 77.3%). Additionally, SCFF is the first to enable FF training of recurrent neural networks, opening the door to more complex tasks and continuous-time video and text processing.

The hardware is the software

Oct 20, 2023Abstract:Human brains and bodies are not hardware running software: the hardware is the software. We reason that because the microscopic physics of artificial-intelligence hardware and of human biological "hardware" is distinct, neuromorphic engineers need to be cautious (and yet also creative) in how we take inspiration from biological intelligence. We should focus primarily on principles and design ideas that respect -- and embrace -- the underlying hardware physics of non-biological intelligent systems, rather than abstracting it away. We see a major role for neuroscience in neuromorphic computing as identifying the physics-agnostic principles of biological intelligence -- that is the principles of biological intelligence that can be gainfully adapted and applied to any physical hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge