Jeongkyu Lee

KLO-Net: A Dynamic K-NN Attention U-Net with CSP Encoder for Efficient Prostate Gland Segmentation from MRI

Dec 15, 2025

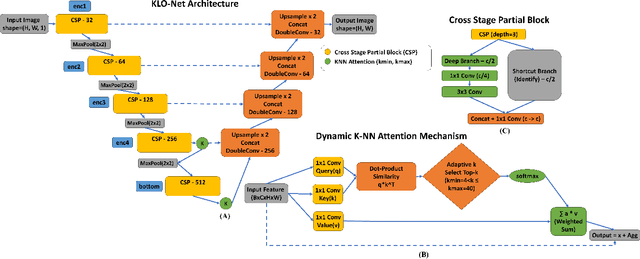

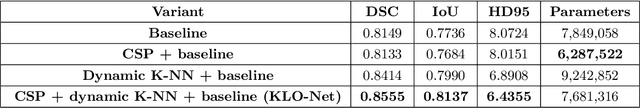

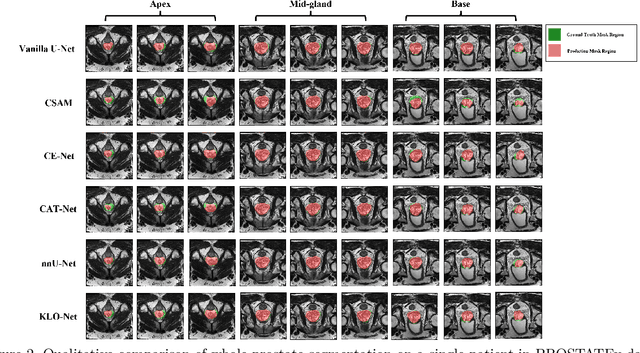

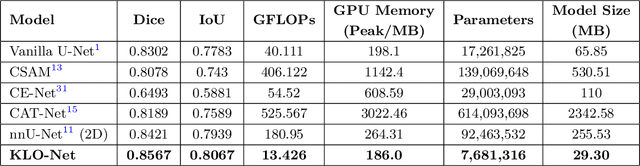

Abstract:Real-time deployment of prostate MRI segmentation on clinical workstations is often bottlenecked by computational load and memory footprint. Deep learning-based prostate gland segmentation approaches remain challenging due to anatomical variability. To bridge this efficiency gap while still maintaining reliable segmentation accuracy, we propose KLO-Net, a dynamic K-Nearest Neighbor attention U-Net with Cross Stage Partial, i.e., CSP, encoder for efficient prostate gland segmentation from MRI scan. Unlike the regular K-NN attention mechanism, the proposed dynamic K-NN attention mechanism allows the model to adaptively determine the number of attention connections for each spatial location within a slice. In addition, CSP blocks address the computational load to reduce memory consumption. To evaluate the model's performance, comprehensive experiments and ablation studies are conducted on two public datasets, i.e., PROMISE12 and PROSTATEx, to validate the proposed architecture. The detailed comparative analysis demonstrates the model's advantage in computational efficiency and segmentation quality.

XAG-Net: A Cross-Slice Attention and Skip Gating Network for 2.5D Femur MRI Segmentation

Aug 08, 2025Abstract:Accurate segmentation of femur structures from Magnetic Resonance Imaging (MRI) is critical for orthopedic diagnosis and surgical planning but remains challenging due to the limitations of existing 2D and 3D deep learning-based segmentation approaches. In this study, we propose XAG-Net, a novel 2.5D U-Net-based architecture that incorporates pixel-wise cross-slice attention (CSA) and skip attention gating (AG) mechanisms to enhance inter-slice contextual modeling and intra-slice feature refinement. Unlike previous CSA-based models, XAG-Net applies pixel-wise softmax attention across adjacent slices at each spatial location for fine-grained inter-slice modeling. Extensive evaluations demonstrate that XAG-Net surpasses baseline 2D, 2.5D, and 3D U-Net models in femur segmentation accuracy while maintaining computational efficiency. Ablation studies further validate the critical role of the CSA and AG modules, establishing XAG-Net as a promising framework for efficient and accurate femur MRI segmentation.

Performance Analysis of Deep Learning Models for Femur Segmentation in MRI Scan

Apr 05, 2025Abstract:Convolutional neural networks like U-Net excel in medical image segmentation, while attention mechanisms and KAN enhance feature extraction. Meta's SAM 2 uses Vision Transformers for prompt-based segmentation without fine-tuning. However, biases in these models impact generalization with limited data. In this study, we systematically evaluate and compare the performance of three CNN-based models, i.e., U-Net, Attention U-Net, and U-KAN, and one transformer-based model, i.e., SAM 2 for segmenting femur bone structures in MRI scan. The dataset comprises 11,164 MRI scans with detailed annotations of femoral regions. Performance is assessed using the Dice Similarity Coefficient, which ranges from 0.932 to 0.954. Attention U-Net achieves the highest overall scores, while U-KAN demonstrated superior performance in anatomical regions with a smaller region of interest, leveraging its enhanced learning capacity to improve segmentation accuracy.

Mapping Areas using Computer Vision Algorithms and Drones

Jan 01, 2019

Abstract:The goal of this paper is to implement a system, titled as Drone Map Creator (DMC) using Computer Vision techniques. DMC can process visual information from an HD camera in a drone and automatically create a map by stitching together visual information captured by a drone. The proposed approach employs the Speeded up robust features (SURF) method to detect the key points for each image frame; then the corresponding points between the frames are identified by maximizing the determinant of a Hessian matrix. Finally, two images are stitched together by using the identified points. Our results show that despite some limitations from the external environment, we could have successfully stitched images together along video sequences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge