Jennifer J. Gago

Neural Policy Style Transfer

Feb 01, 2024

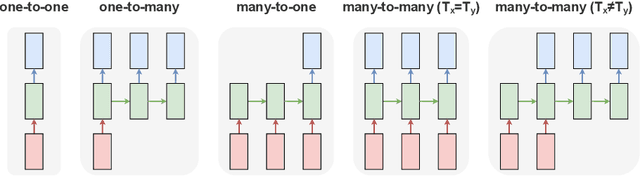

Abstract:Style Transfer has been proposed in a number of fields: fine arts, natural language processing, and fixed trajectories. We scale this concept up to control policies within a Deep Reinforcement Learning infrastructure. Each network is trained to maximize the expected reward, which typically encodes the goal of an action, and can be described as the content. The expressive power of deep neural networks enables encoding a secondary task, which can be described as the style. The Neural Policy Style Transfer (NPST) algorithm is proposed to transfer the style of one policy to another, while maintaining the content of the latter. Different policies are defined via Deep Q-Network architectures. These models are trained using demonstrations through Inverse Reinforcement Learning. Two different sets of user demonstrations are performed, one for content and other for style. Different styles are encoded as defined by user demonstrations. The generated policy is the result of feeding a content policy and a style policy to the NPST algorithm. Experiments are performed in a catch-ball game inspired by the Deep Reinforcement Learning classical Atari games; and a real-world painting scenario with a full-sized humanoid robot, based on previous works of the authors. The implementation of three different Q-Network architectures (Shallow, Deep and Deep Recurrent Q-Network) to encode the policies within the NPST framework is proposed and the results obtained in the experiments with each of these architectures compared.

Sequence-to-Sequence Natural Language to Humanoid Robot Sign Language

Jul 09, 2019

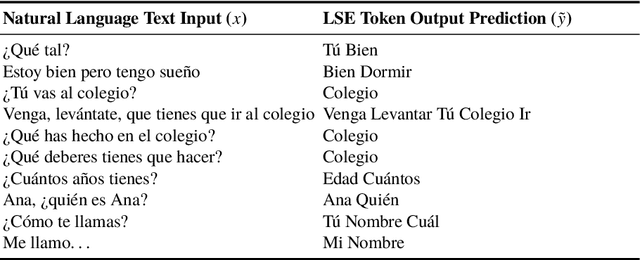

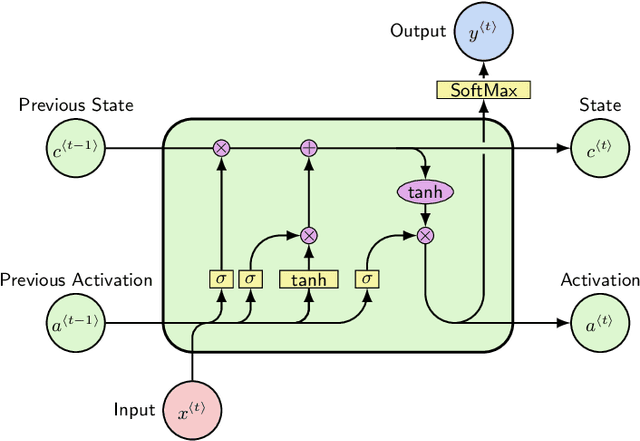

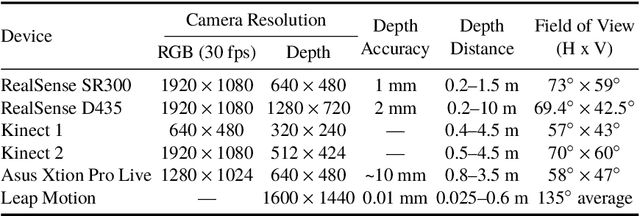

Abstract:This paper presents a study on natural language to sign language translation with human-robot interaction application purposes. By means of the presented methodology, the humanoid robot TEO is expected to represent Spanish sign language automatically by converting text into movements, thanks to the performance of neural networks. Natural language to sign language translation presents several challenges to developers, such as the discordance between the length of input and output data and the use of non-manual markers. Therefore, neural networks and, consequently, sequence-to-sequence models, are selected as a data-driven system to avoid traditional expert system approaches or temporal dependencies limitations that lead to limited or too complex translation systems. To achieve these objectives, it is necessary to find a way to perform human skeleton acquisition in order to collect the signing input data. OpenPose and skeletonRetriever are proposed for this purpose and a 3D sensor specification study is developed to select the best acquisition hardware.

Sign Language Representation by TEO Humanoid Robot: End-User Interest, Comprehension and Satisfaction

Jan 17, 2019

Abstract:In this paper, we illustrate our work on improving the accessibility of Cyber-Physical Systems (CPS), presenting a study on human-robot interaction where the end-users are either deaf or hearing-impaired people. Current trends in robotic designs include devices with robotic arms and hands capable of performing manipulation and grasping tasks. This paper focuses on how these devices can be used for a different purpose, which is that of enabling robotic communication via sign language. For the study, several tests and questionnaires are run to check and measure how end-users feel about interpreting sign language represented by a humanoid robotic assistant as opposed to subtitles on a screen. Stemming from this dichotomy, dactylology, basic vocabulary representation and end-user satisfaction are the main topics covered by a delivered form, in which additional commentaries are valued and taken into consideration for further decision taking regarding robot-human interaction. The experiments were performed using TEO, a household companion humanoid robot developed at the University Carlos III de Madrid (UC3M), via representations in Spanish Sign Language (LSE), and a total of 16 deaf and hearing-impaired participants.

* 21 pages, 11 figures, MDPI Electronics Journal

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge