Jeffrey N. Chiang

Clinical ModernBERT: An efficient and long context encoder for biomedical text

Apr 04, 2025Abstract:We introduce Clinical ModernBERT, a transformer based encoder pretrained on large scale biomedical literature, clinical notes, and medical ontologies, incorporating PubMed abstracts, MIMIC IV clinical data, and medical codes with their textual descriptions. Building on ModernBERT the current state of the art natural language text encoder featuring architectural upgrades such as rotary positional embeddings (RoPE), Flash Attention, and extended context length up to 8,192 tokens our model adapts these innovations specifically for biomedical and clinical domains. Clinical ModernBERT excels at producing semantically rich representations tailored for long context tasks. We validate this both by analyzing its pretrained weights and through empirical evaluation on a comprehensive suite of clinical NLP benchmarks.

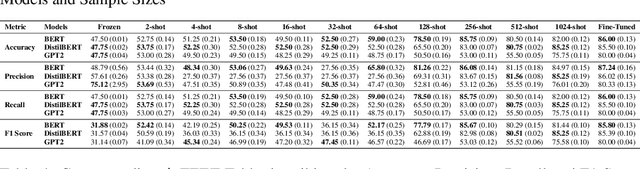

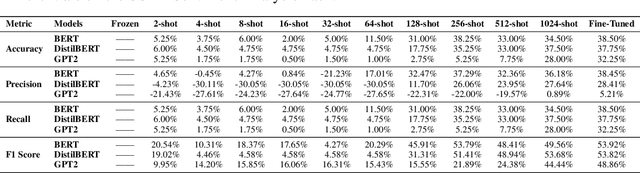

FEET: A Framework for Evaluating Embedding Techniques

Nov 02, 2024

Abstract:In this study, we introduce FEET, a standardized protocol designed to guide the development and benchmarking of foundation models. While numerous benchmark datasets exist for evaluating these models, we propose a structured evaluation protocol across three distinct scenarios to gain a comprehensive understanding of their practical performance. We define three primary use cases: frozen embeddings, few-shot embeddings, and fully fine-tuned embeddings. Each scenario is detailed and illustrated through two case studies: one in sentiment analysis and another in the medical domain, demonstrating how these evaluations provide a thorough assessment of foundation models' effectiveness in research applications. We recommend this protocol as a standard for future research aimed at advancing representation learning models.

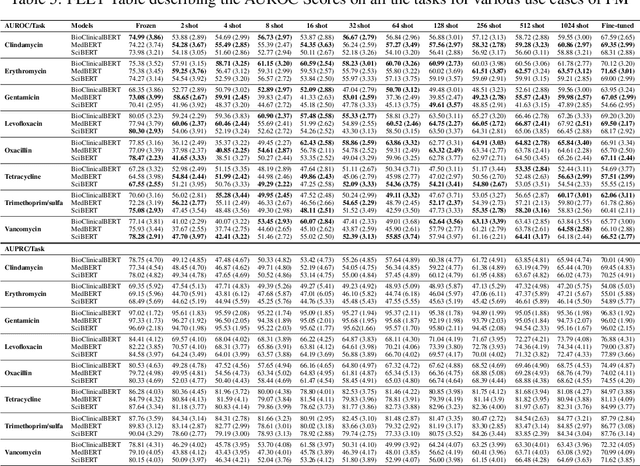

Enhancing Antibiotic Stewardship using a Natural Language Approach for Better Feature Representation

May 30, 2024Abstract:The rapid emergence of antibiotic-resistant bacteria is recognized as a global healthcare crisis, undermining the efficacy of life-saving antibiotics. This crisis is driven by the improper and overuse of antibiotics, which escalates bacterial resistance. In response, this study explores the use of clinical decision support systems, enhanced through the integration of electronic health records (EHRs), to improve antibiotic stewardship. However, EHR systems present numerous data-level challenges, complicating the effective synthesis and utilization of data. In this work, we transform EHR data into a serialized textual representation and employ pretrained foundation models to demonstrate how this enhanced feature representation can aid in antibiotic susceptibility predictions. Our results suggest that this text representation, combined with foundation models, provides a valuable tool to increase interpretability and support antibiotic stewardship efforts.

Multimodal Clinical Pseudo-notes for Emergency Department Prediction Tasks using Multiple Embedding Model for EHR (MEME)

Jan 31, 2024

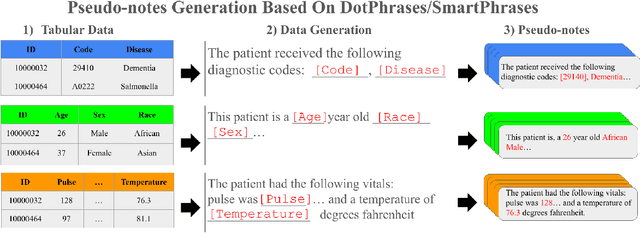

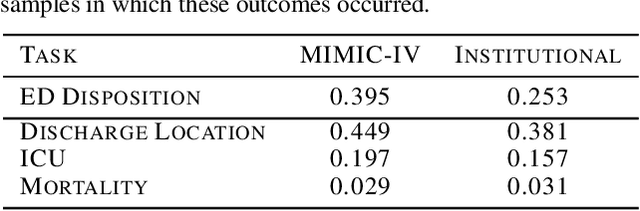

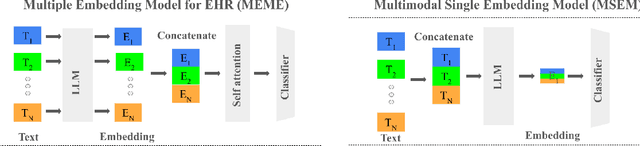

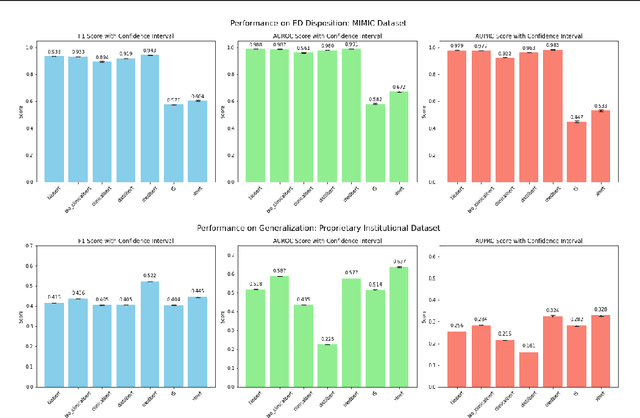

Abstract:In this work, we introduce Multiple Embedding Model for EHR (MEME), an approach that views Electronic Health Records (EHR) as multimodal data. This approach incorporates "pseudo-notes", textual representations of tabular EHR concepts such as diagnoses and medications, and allows us to effectively employ Large Language Models (LLMs) for EHR representation. This framework also adopts a multimodal approach, embedding each EHR modality separately. We demonstrate the effectiveness of MEME by applying it to several tasks within the Emergency Department across multiple hospital systems. Our findings show that MEME surpasses the performance of both single modality embedding methods and traditional machine learning approaches. However, we also observe notable limitations in generalizability across hospital institutions for all tested models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge