Jeffrey M. Shainline

Relating Superconducting Optoelectronic Networks to Classical Neurodynamics

Sep 26, 2024

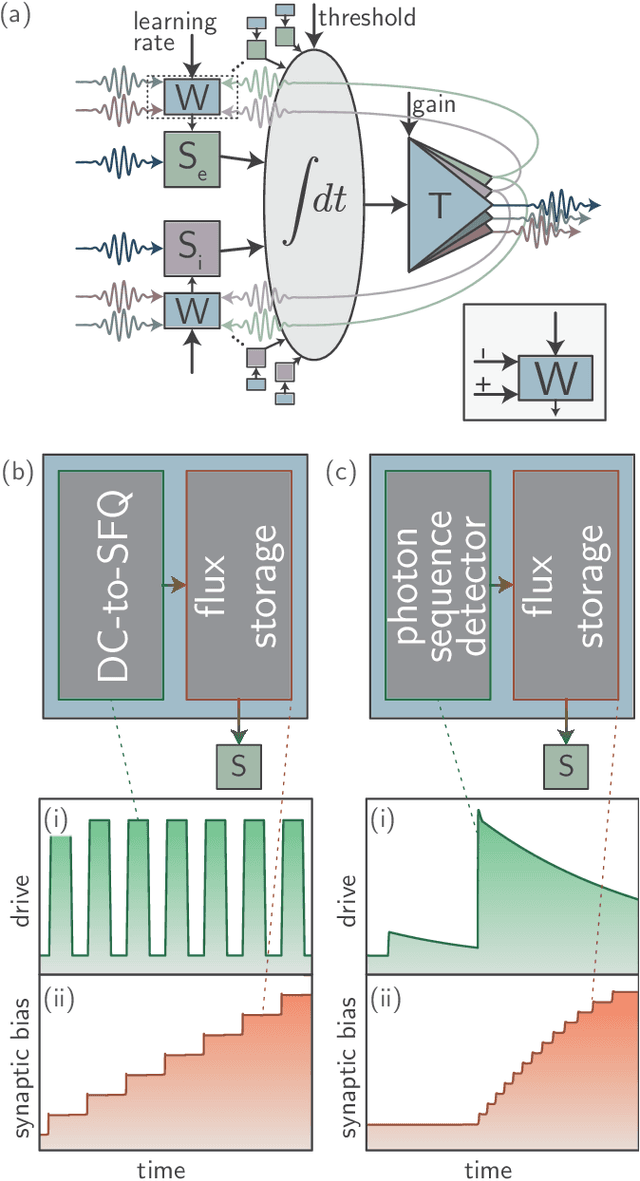

Abstract:The circuits comprising superconducting optoelectronic synapses, dendrites, and neurons are described by numerically cumbersome and formally opaque coupled differential equations. Reference 1 showed that a phenomenological model of superconducting loop neurons eliminates the need to solve the Josephson circuit equations that describe synapses and dendrites. The initial goal of the model was to decrease the time required for simulations, yet an additional benefit of the model was increased transparency of the underlying neural circuit operations and conceptual clarity regarding the connection of loop neurons to other physical systems. Whereas the original model simplified the treatment of the Josephson-junction dynamics, essentially by only considering low-pass versions of the dendritic outputs, the model resorted to an awkward treatment of spikes generated by semiconductor transmitter circuits that required explicitly checking for threshold crossings and distinct treatment of time steps wherein somatic threshold is reached. Here we extend that model to simplify the treatment of spikes coming from somas, again making use of the fact that in neural systems the downstream recipients of spike events almost always perform low-pass filtering. We provide comparisons between the first and second phenomenological models, quantifying the accuracy of the additional approximations. We identify regions of circuit parameter space in which the extended model works well and regions where it works poorly. For some circuit parameters it is possible to represent the downstream dendritic response to a single spike as well as coincidences or sequences of spikes, indicating the model is not simply a reduction to rate coding. The governing equations are shown to be nearly identical to those ubiquitous in the neuroscience literature for modeling leaky-integrator dendrites and neurons.

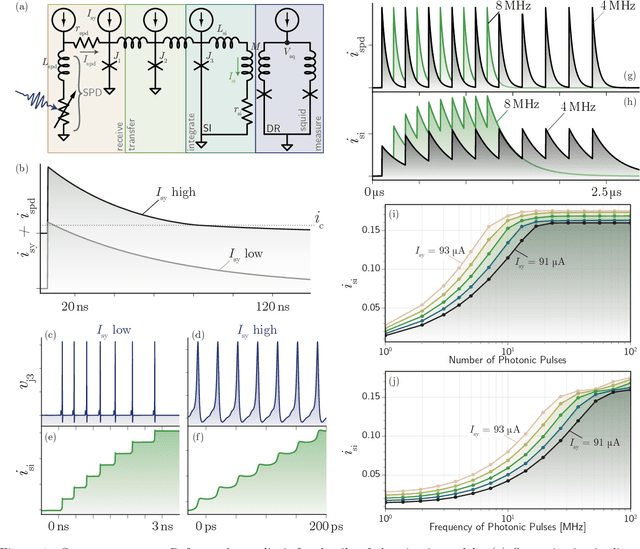

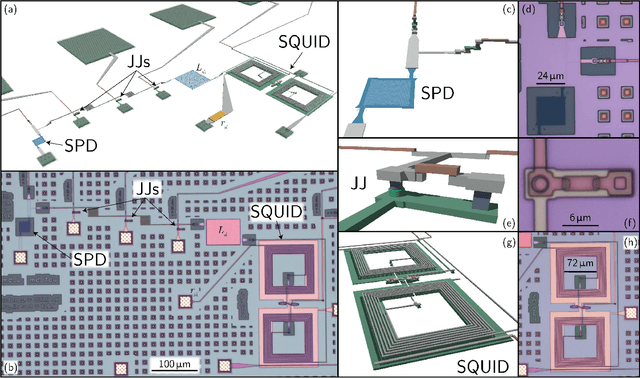

Programmable Superconducting Optoelectronic Single-Photon Synapses with Integrated Multi-State Memory

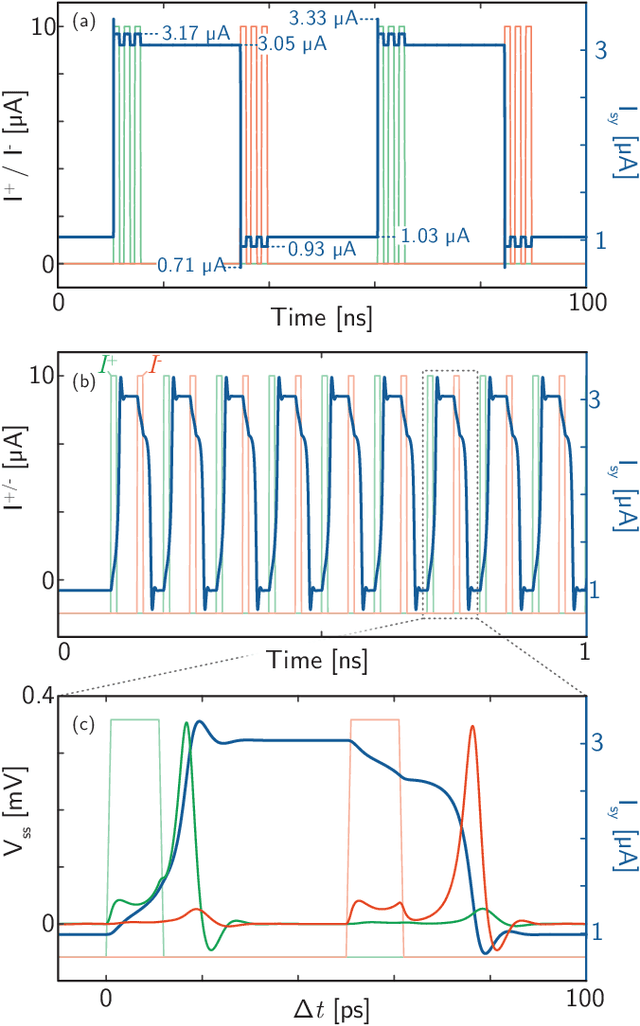

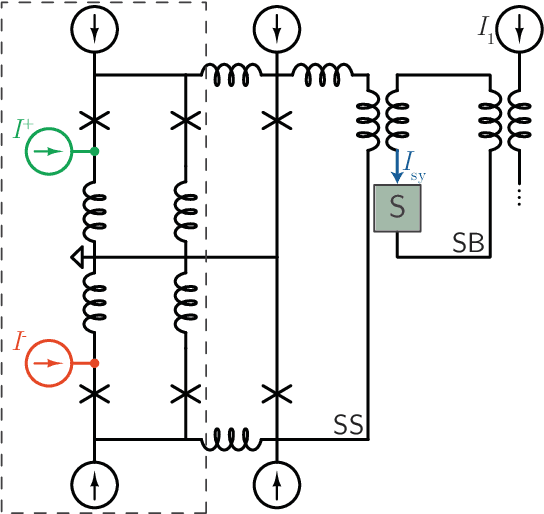

Nov 10, 2023Abstract:The co-location of memory and processing is a core principle of neuromorphic computing. A local memory device for synaptic weight storage has long been recognized as an enabling element for large-scale, high-performance neuromorphic hardware. In this work, we demonstrate programmable superconducting synapses with integrated memories for use in superconducting optoelectronic neural systems. Superconducting nanowire single-photon detectors and Josephson junctions are combined into programmable synaptic circuits that exhibit single-photon sensitivity, memory cells with more than 400 internal states, leaky integration of input spike events, and 0.4 fJ programming energies (including cooling power). These results are attractive for implementing a variety of supervised and unsupervised learning algorithms and lay the foundation for a new hardware platform optimized for large-scale spiking network accelerators.

Phenomenological Model of Superconducting Optoelectronic Loop Neurons

Oct 18, 2022

Abstract:Superconducting optoelectronic loop neurons are a class of circuits potentially conducive to networks for large-scale artificial cognition. These circuits employ superconducting components including single-photon detectors, Josephson junctions, and transformers to achieve neuromorphic functions. To date, all simulations of loop neurons have used first-principles circuit analysis to model the behavior of synapses, dendrites, and neurons. These circuit models are computationally inefficient and leave opaque the relationship between loop neurons and other complex systems. Here we introduce a modeling framework that captures the behavior of the relevant synaptic, dendritic, and neuronal circuits at a phenomenological level without resorting to full circuit equations. Within this compact model, each dendrite is discovered to obey a single nonlinear leaky-integrator ordinary differential equation, while a neuron is modeled as a dendrite with a thresholding element and an additional feedback mechanism for establishing a refractory period. A synapse is modeled as a single-photon detector coupled to a dendrite, where the response of the single-photon detector follows a closed-form expression. We quantify the accuracy of the phenomenological model relative to circuit simulations and find that the approach reduces computational time by a factor of ten thousand while maintaining accuracy of one part in ten thousand. We demonstrate the use of the model with several basic examples. The net increase in computational efficiency enables future simulation of large networks, while the formulation provides a connection to a large body of work in applied mathematics, computational neuroscience, and physical systems such as spin glasses.

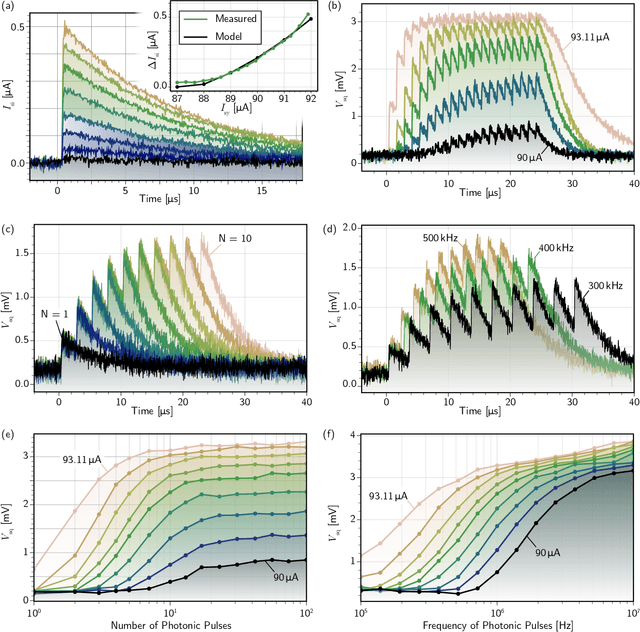

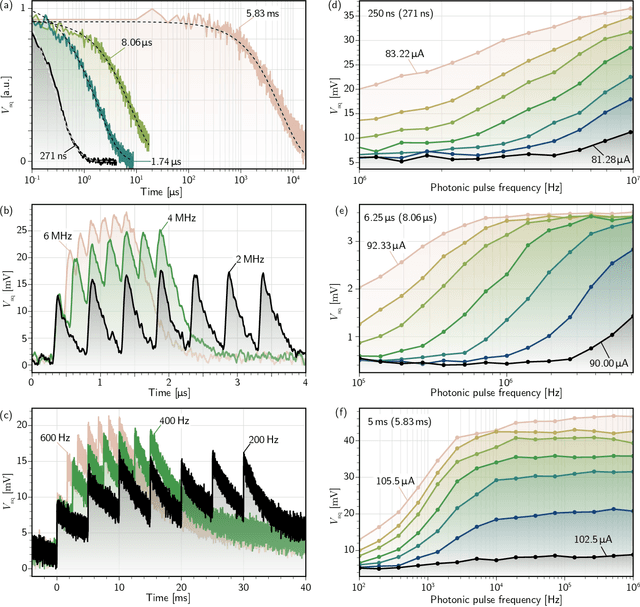

Demonstration of Superconducting Optoelectronic Single-Photon Synapses

Apr 20, 2022

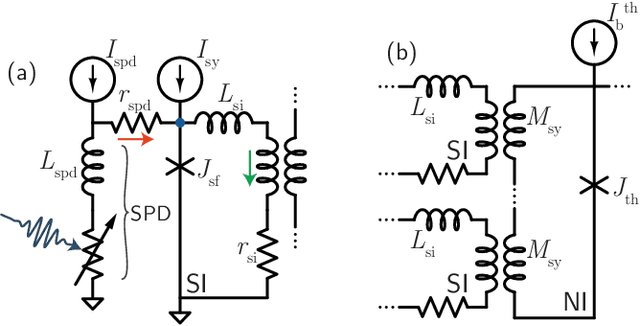

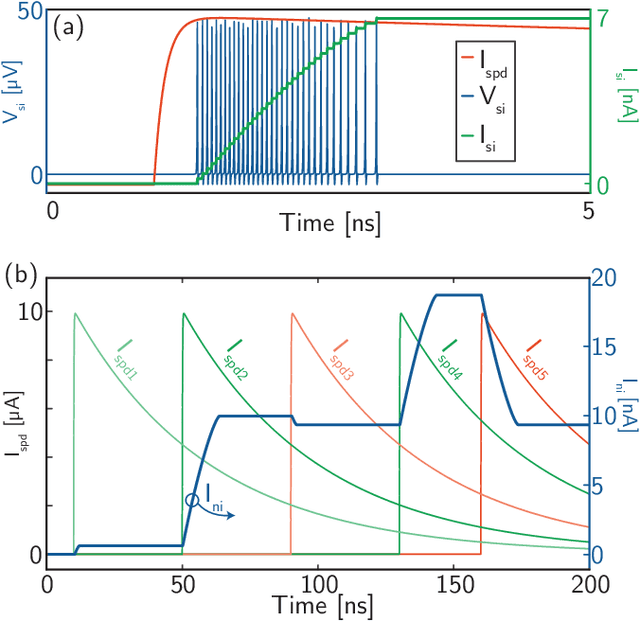

Abstract:Superconducting optoelectronic hardware is being explored as a path towards artificial spiking neural networks with unprecedented scales of complexity and computational ability. Such hardware combines integrated-photonic components for few-photon, light-speed communication with superconducting circuits for fast, energy-efficient computation. Monolithic integration of superconducting and photonic devices is necessary for the scaling of this technology. In the present work, superconducting-nanowire single-photon detectors are monolithically integrated with Josephson junctions for the first time, enabling the realization of superconducting optoelectronic synapses. We present circuits that perform analog weighting and temporal leaky integration of single-photon presynaptic signals. Synaptic weighting is implemented in the electronic domain so that binary, single-photon communication can be maintained. Records of recent synaptic activity are locally stored as current in superconducting loops. Dendritic and neuronal nonlinearities are implemented with a second stage of Josephson circuitry. The hardware presents great design flexibility, with demonstrated synaptic time constants spanning four orders of magnitude (hundreds of nanoseconds to milliseconds). The synapses are responsive to presynaptic spike rates exceeding 10 MHz and consume approximately 33 aJ of dynamic power per synapse event before accounting for cooling. In addition to neuromorphic hardware, these circuits introduce new avenues towards realizing large-scale single-photon-detector arrays for diverse imaging, sensing, and quantum communication applications.

An active dendritic tree can mitigate fan-in limitations in superconducting neurons

Jul 12, 2021

Abstract:Superconducting electronic circuits have much to offer with regard to neuromorphic hardware. Superconducting quantum interference devices (SQUIDs) can serve as an active element to perform the thresholding operation of a neuron's soma. However, a SQUID has a response function that is periodic in the applied signal. We show theoretically that if one restricts the total input to a SQUID to maintain a monotonically increasing response, a large fraction of synapses must be active to drive a neuron to threshold. We then demonstrate that an active dendritic tree (also based on SQUIDs) can significantly reduce the fraction of synapses that must be active to drive the neuron to threshold. In this context, the inclusion of a dendritic tree provides the dual benefits of enhancing the computational abilities of each neuron and allowing the neuron to spike with sparse input activity.

Optoelectronic Intelligence

Oct 17, 2020

Abstract:To design and construct hardware for general intelligence, we must consider principles of both neuroscience and very-large-scale integration. For large neural systems capable of general intelligence, the attributes of photonics for communication and electronics for computation are complementary and interdependent. Using light for communication enables high fan-out as well as low-latency signaling across large systems with no traffic-dependent bottlenecks. For computation, the inherent nonlinearities, high speed, and low power consumption of Josephson circuits are conducive to complex neural functions. Operation at 4\,K enables the use of single-photon detectors and silicon light sources, two features that lead to efficiency and economical scalability. Here I sketch a concept for optoelectronic hardware, beginning with synaptic circuits, continuing through wafer-scale integration, and extending to systems interconnected with fiber-optic white matter, potentially at the scale of the human brain and beyond.

Fluxonic Processing of Photonic Synapse Events

Apr 04, 2019

Abstract:Much of the information processing performed by a neuron occurs in the dendritic tree. For neural systems using light for communication, it is advantageous to convert signals to the electronic domain at synaptic terminals so dendritic computation can be performed with electrical circuits. Here we present circuits based on Josephson junctions and mutual inductors that act as dendrites, processing signals from synapses receiving single-photon communication events with superconducting detectors. We show simulations of circuits performing basic temporal filtering, logical operations, and nonlinear transfer functions. We further show how the synaptic signal from a single-photon can fan out locally in the electronic domain to enable the dendrites of the receiving neuron to process a photonic synapse event or pulse train in multiple different ways simultaneously. Such a technique makes efficient use of photons, energy, space, and information.

Circuit designs for superconducting optoelectronic loop neurons

Sep 07, 2018

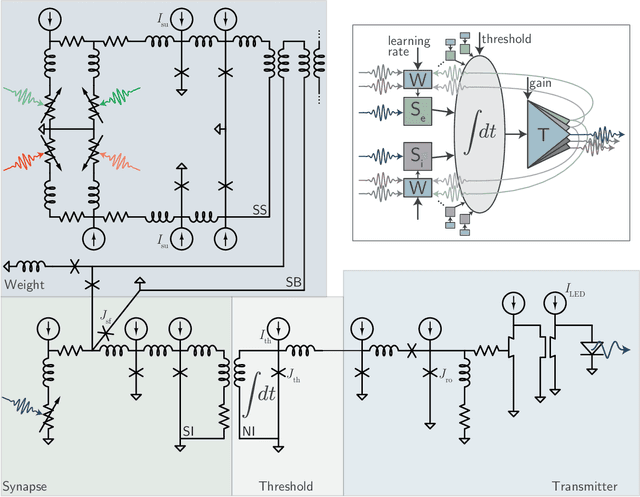

Abstract:Optical communication achieves high fanout and short delay advantageous for information integration in neural systems. Superconducting detectors enable signaling with single photons for maximal energy efficiency. We present designs of superconducting optoelectronic neurons based on superconducting single-photon detectors, Josephson junctions, semiconductor light sources, and multi-planar dielectric waveguides. These circuits achieve complex synaptic and neuronal functions with high energy efficiency, leveraging the strengths of light for communication and superconducting electronics for computation. The neurons send few-photon signals to synaptic connections. These signals communicate neuronal firing events as well as update synaptic weights. Spike-timing-dependent plasticity is implemented with a single photon triggering each step of the process. Microscale light-emitting diodes and waveguide networks enable connectivity from a neuron to thousands of synaptic connections, and the use of light for communication enables synchronization of neurons across an area limited only by the distance light can travel within the period of a network oscillation. Experimentally, each of the requisite circuit elements has been demonstrated, yet a hardware platform combining them all has not been attempted. Compared to digital logic or quantum computing, device tolerances are relaxed. For this neural application, optical sources providing incoherent pulses with 10,000 photons produced with efficiency of 10$^{-3}$ operating at 20\,MHz at 4.2\,K are sufficient to enable a massively scalable neural computing platform with connectivity comparable to the brain and thirty thousand times higher speed.

The largest cognitive systems will be optoelectronic

Sep 07, 2018

Abstract:Electrons and photons offer complementary strengths for information processing. Photons are excellent for communication, while electrons are superior for computation and memory. Cognition requires distributed computation to be communicated across the system for information integration. We present reasoning from neuroscience, network theory, and device physics supporting the conjecture that large-scale cognitive systems will benefit from electronic devices performing synaptic, dendritic, and neuronal information processing operating in conjunction with photonic communication. On the chip scale, integrated dielectric waveguides enable fan-out to thousands of connections. On the system scale, fiber and free-space optics can be employed. The largest cognitive systems will be limited by the distance light can travel during the period of a network oscillation. We calculate that optoelectronic networks the area of a large data center ($10^5$\,m$^2$) will be capable of system-wide information integration at $1$\,MHz. At frequencies of cortex-wide integration in the human brain ($4$\,Hz, theta band), optoelectronic systems could integrate information across the surface of the earth.

Superconducting Optoelectronic Neurons III: Synaptic Plasticity

Jul 03, 2018

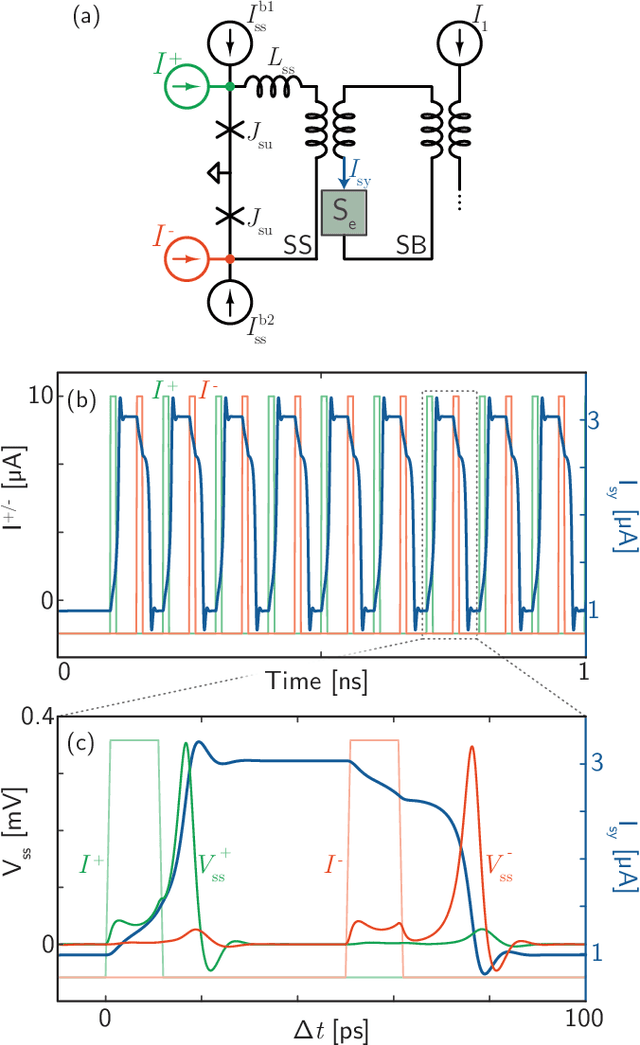

Abstract:As a means of dynamically reconfiguring the synaptic weight of a superconducting optoelectronic loop neuron, a superconducting flux storage loop is inductively coupled to the synaptic current bias of the neuron. A standard flux memory cell is used to achieve a binary synapse, and loops capable of storing many flux quanta are used to enact multi-stable synapses. Circuits are designed to implement supervised learning wherein current pulses add or remove flux from the loop to strengthen or weaken the synaptic weight. Designs are presented for circuits with hundreds of intermediate synaptic weights between minimum and maximum strengths. Circuits for implementing unsupervised learning are modeled using two photons to strengthen and two photons to weaken the synaptic weight via Hebbian and anti-Hebbian learning rules, and techniques are proposed to control the learning rate. Implementation of short-term plasticity, homeostatic plasticity, and metaplasticity in loop neurons is discussed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge