Adam N. McCaughan

Scaling of hardware-compatible perturbative training algorithms

Jan 26, 2025

Abstract:In this work, we explore the capabilities of multiplexed gradient descent (MGD), a scalable and efficient perturbative zeroth-order training method for estimating the gradient of a loss function in hardware and training it via stochastic gradient descent. We extend the framework to include both weight and node perturbation, and discuss the advantages and disadvantages of each approach. We investigate the time to train networks using MGD as a function of network size and task complexity. Previous research has suggested that perturbative training methods do not scale well to large problems, since in these methods the time to estimate the gradient scales linearly with the number of network parameters. However, in this work we show that the time to reach a target accuracy--that is, actually solve the problem of interest--does not follow this undesirable linear scaling, and in fact often decreases with network size. Furthermore, we demonstrate that MGD can be used to calculate a drop-in replacement for the gradient in stochastic gradient descent, and therefore optimization accelerators such as momentum can be used alongside MGD, ensuring compatibility with existing machine learning practices. Our results indicate that MGD can efficiently train large networks on hardware, achieving accuracy comparable to backpropagation, thus presenting a practical solution for future neuromorphic computing systems.

Machine Learning-powered Compact Modeling of Stochastic Electronic Devices using Mixture Density Networks

Nov 10, 2023Abstract:The relentless pursuit of miniaturization and performance enhancement in electronic devices has led to a fundamental challenge in the field of circuit design and simulation: how to accurately account for the inherent stochastic nature of certain devices. While conventional deterministic models have served as indispensable tools for circuit designers, they fall short when it comes to capture the subtle yet critical variability exhibited by many electronic components. In this paper, we present an innovative approach that transcends the limitations of traditional modeling techniques by harnessing the power of machine learning, specifically Mixture Density Networks (MDNs), to faithfully represent and simulate the stochastic behavior of electronic devices. We demonstrate our approach to model heater cryotrons, where the model is able to capture the stochastic switching dynamics observed in the experiment. Our model shows 0.82% mean absolute error for switching probability. This paper marks a significant step forward in the quest for accurate and versatile compact models, poised to drive innovation in the realm of electronic circuits.

Multiplexed gradient descent: Fast online training of modern datasets on hardware neural networks without backpropagation

Mar 05, 2023Abstract:We present multiplexed gradient descent (MGD), a gradient descent framework designed to easily train analog or digital neural networks in hardware. MGD utilizes zero-order optimization techniques for online training of hardware neural networks. We demonstrate its ability to train neural networks on modern machine learning datasets, including CIFAR-10 and Fashion-MNIST, and compare its performance to backpropagation. Assuming realistic timescales and hardware parameters, our results indicate that these optimization techniques can train a network on emerging hardware platforms orders of magnitude faster than the wall-clock time of training via backpropagation on a standard GPU, even in the presence of imperfect weight updates or device-to-device variations in the hardware. We additionally describe how it can be applied to existing hardware as part of chip-in-the-loop training, or integrated directly at the hardware level. Crucially, the MGD framework is highly flexible, and its gradient descent process can be optimized to compensate for specific hardware limitations such as slow parameter-update speeds or limited input bandwidth.

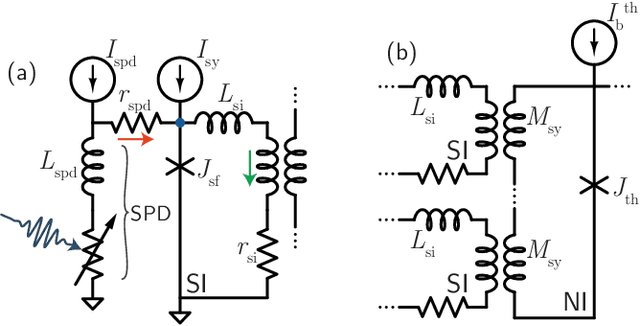

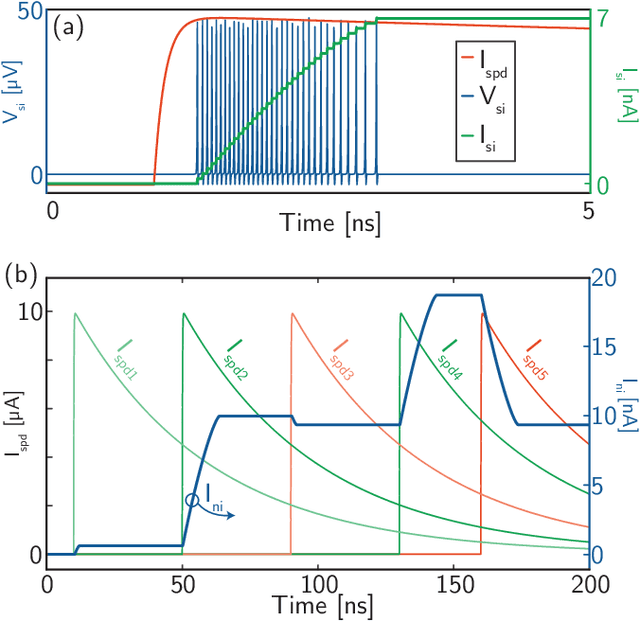

Demonstration of Superconducting Optoelectronic Single-Photon Synapses

Apr 20, 2022

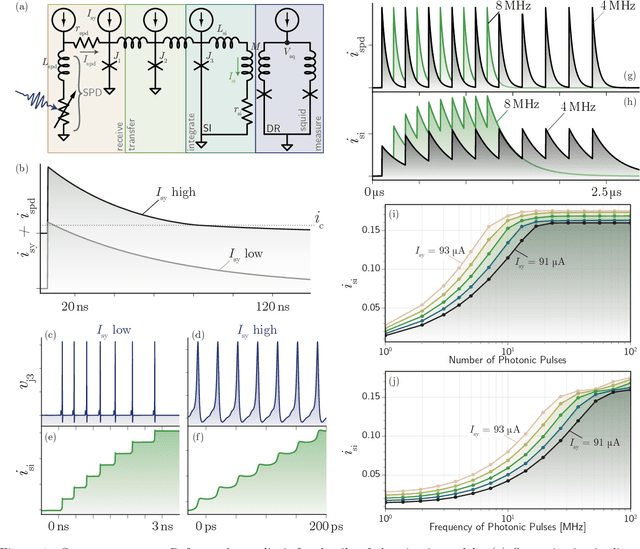

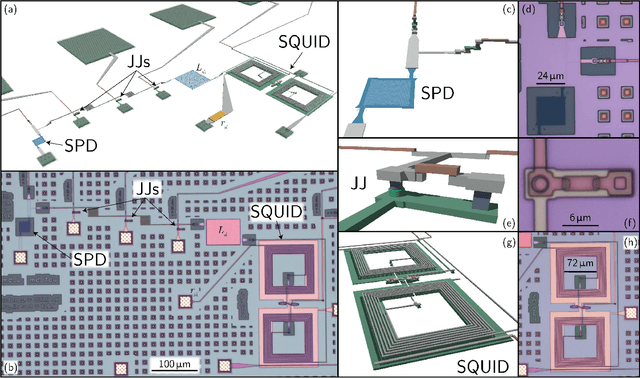

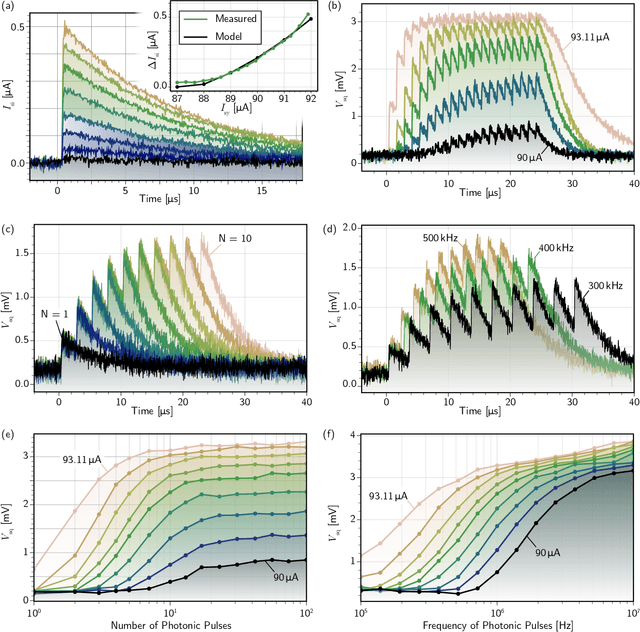

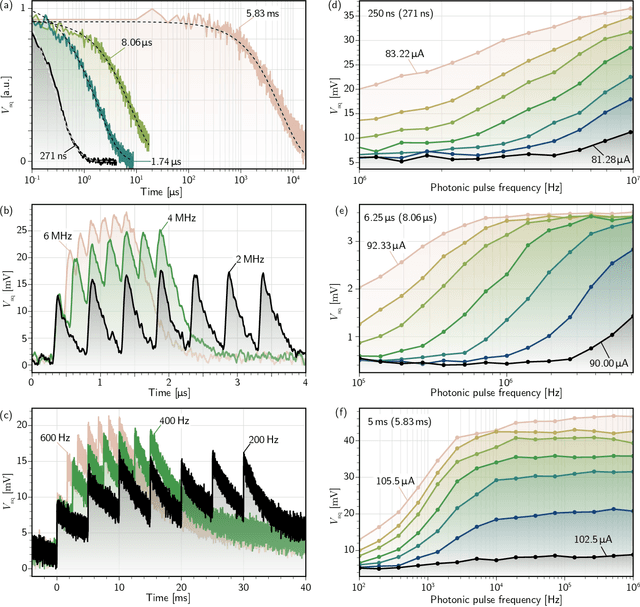

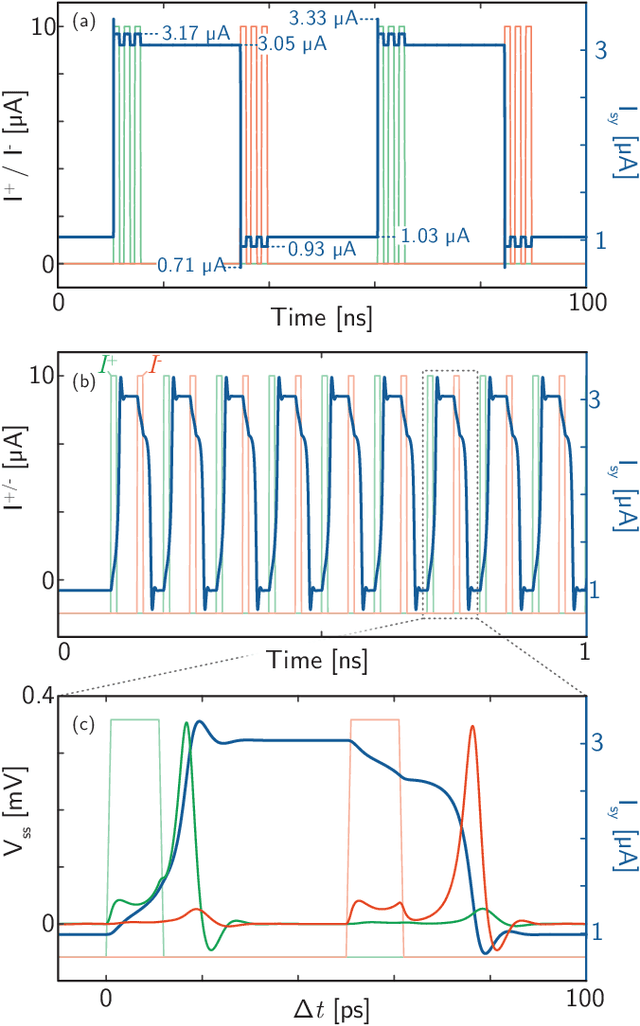

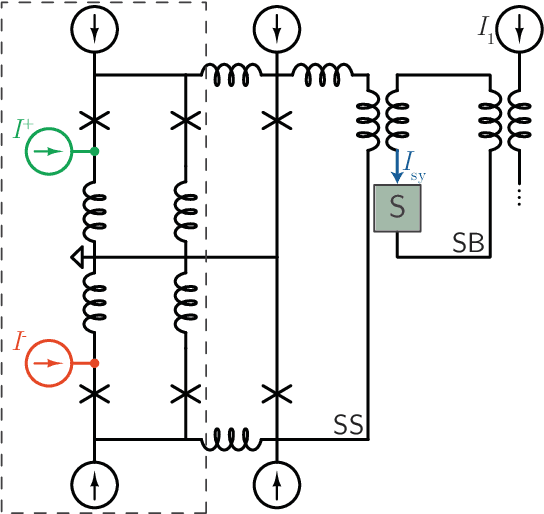

Abstract:Superconducting optoelectronic hardware is being explored as a path towards artificial spiking neural networks with unprecedented scales of complexity and computational ability. Such hardware combines integrated-photonic components for few-photon, light-speed communication with superconducting circuits for fast, energy-efficient computation. Monolithic integration of superconducting and photonic devices is necessary for the scaling of this technology. In the present work, superconducting-nanowire single-photon detectors are monolithically integrated with Josephson junctions for the first time, enabling the realization of superconducting optoelectronic synapses. We present circuits that perform analog weighting and temporal leaky integration of single-photon presynaptic signals. Synaptic weighting is implemented in the electronic domain so that binary, single-photon communication can be maintained. Records of recent synaptic activity are locally stored as current in superconducting loops. Dendritic and neuronal nonlinearities are implemented with a second stage of Josephson circuitry. The hardware presents great design flexibility, with demonstrated synaptic time constants spanning four orders of magnitude (hundreds of nanoseconds to milliseconds). The synapses are responsive to presynaptic spike rates exceeding 10 MHz and consume approximately 33 aJ of dynamic power per synapse event before accounting for cooling. In addition to neuromorphic hardware, these circuits introduce new avenues towards realizing large-scale single-photon-detector arrays for diverse imaging, sensing, and quantum communication applications.

Circuit designs for superconducting optoelectronic loop neurons

Sep 07, 2018

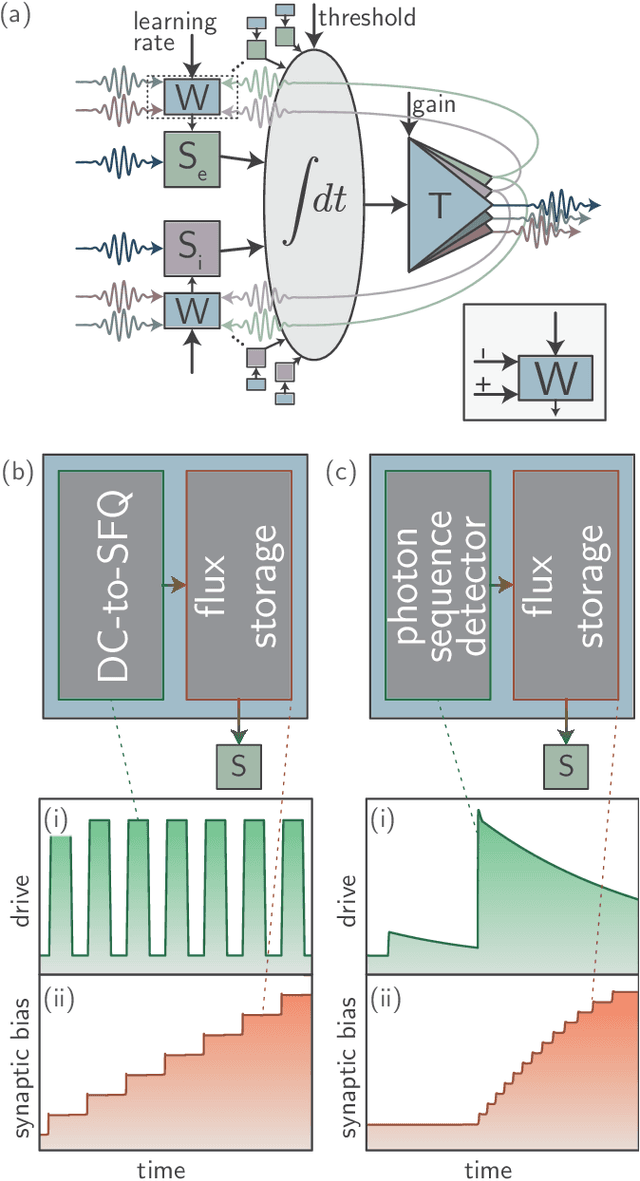

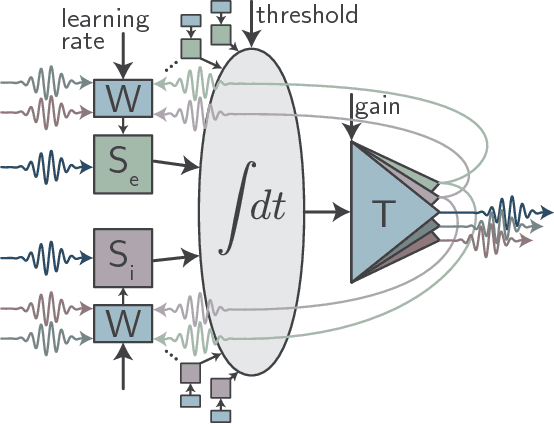

Abstract:Optical communication achieves high fanout and short delay advantageous for information integration in neural systems. Superconducting detectors enable signaling with single photons for maximal energy efficiency. We present designs of superconducting optoelectronic neurons based on superconducting single-photon detectors, Josephson junctions, semiconductor light sources, and multi-planar dielectric waveguides. These circuits achieve complex synaptic and neuronal functions with high energy efficiency, leveraging the strengths of light for communication and superconducting electronics for computation. The neurons send few-photon signals to synaptic connections. These signals communicate neuronal firing events as well as update synaptic weights. Spike-timing-dependent plasticity is implemented with a single photon triggering each step of the process. Microscale light-emitting diodes and waveguide networks enable connectivity from a neuron to thousands of synaptic connections, and the use of light for communication enables synchronization of neurons across an area limited only by the distance light can travel within the period of a network oscillation. Experimentally, each of the requisite circuit elements has been demonstrated, yet a hardware platform combining them all has not been attempted. Compared to digital logic or quantum computing, device tolerances are relaxed. For this neural application, optical sources providing incoherent pulses with 10,000 photons produced with efficiency of 10$^{-3}$ operating at 20\,MHz at 4.2\,K are sufficient to enable a massively scalable neural computing platform with connectivity comparable to the brain and thirty thousand times higher speed.

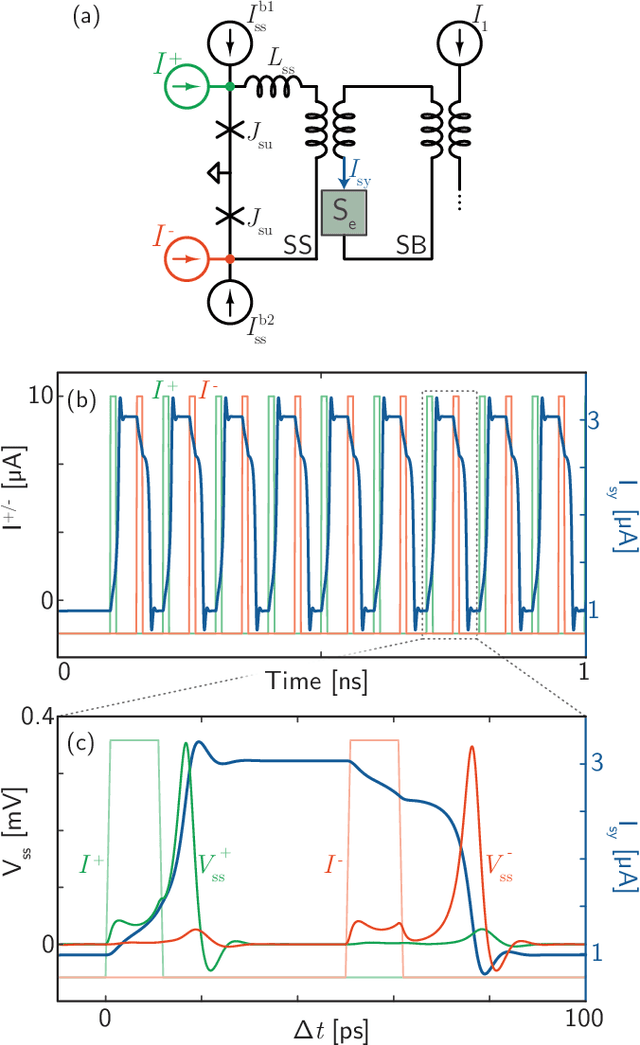

Superconducting Optoelectronic Neurons III: Synaptic Plasticity

Jul 03, 2018

Abstract:As a means of dynamically reconfiguring the synaptic weight of a superconducting optoelectronic loop neuron, a superconducting flux storage loop is inductively coupled to the synaptic current bias of the neuron. A standard flux memory cell is used to achieve a binary synapse, and loops capable of storing many flux quanta are used to enact multi-stable synapses. Circuits are designed to implement supervised learning wherein current pulses add or remove flux from the loop to strengthen or weaken the synaptic weight. Designs are presented for circuits with hundreds of intermediate synaptic weights between minimum and maximum strengths. Circuits for implementing unsupervised learning are modeled using two photons to strengthen and two photons to weaken the synaptic weight via Hebbian and anti-Hebbian learning rules, and techniques are proposed to control the learning rate. Implementation of short-term plasticity, homeostatic plasticity, and metaplasticity in loop neurons is discussed.

Superconducting Optoelectronic Neurons I: General Principles

May 24, 2018

Abstract:The design of neural hardware is informed by the prominence of differentiated processing and information integration in cognitive systems. The central role of communication leads to the principal assumption of the hardware platform: signals between neurons should be optical to enable fanout and communication with minimal delay. The requirement of energy efficiency leads to the utilization of superconducting detectors to receive single-photon signals. We discuss the potential of superconducting optoelectronic hardware to achieve the spatial and temporal information integration advantageous for cognitive processing, and we consider physical scaling limits based on light-speed communication. We introduce the superconducting optoelectronic neurons and networks that are the subject of the subsequent papers in this series.

Superconducting Optoelectronic Neurons V: Networks and Scaling

May 15, 2018

Abstract:Networks of superconducting optoelectronic neurons are investigated for their near-term technological potential and long-term physical limitations. Networks with short average path length, high clustering coefficient, and power-law degree distribution are designed using a growth model that assigns connections between new and existing nodes based on spatial distance as well as degree of existing nodes. The network construction algorithm is scalable to arbitrary levels of network hierarchy and achieves systems with fractal spatial properties and efficient wiring. By modeling the physical size of superconducting optoelectronic neurons, we calculate the area of these networks. A system with 8100 neurons and 330,430 total synapses will fit on a 1\,cm $\times$ 1\,cm die. Systems of millions of neurons with hundreds of millions of synapses will fit on a 300\,mm wafer. For multi-wafer assemblies, communication at light speed enables a neuronal pool the size of a large data center comprising 100 trillion neurons with coherent oscillations at 1\,MHz. Assuming a power law frequency distribution, as is necessary for self-organized criticality, we calculate the power consumption of the networks. We find the use of single photons for communication and superconducting circuits for computation leads to power density low enough to be cooled by liquid $^4$He for networks of any scale.

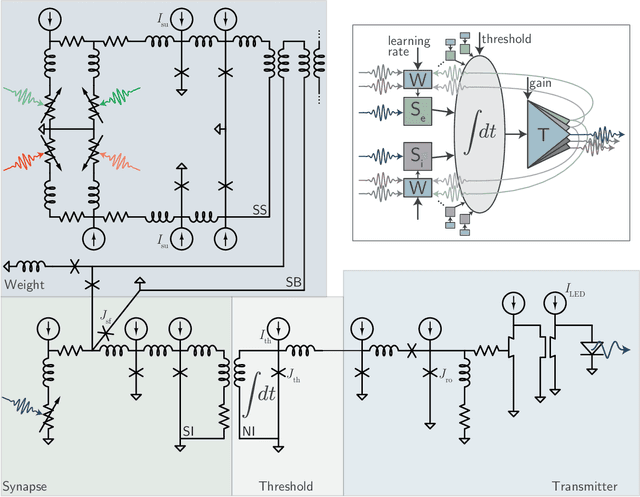

Superconducting Optoelectronic Neurons II: Receiver Circuits

May 15, 2018

Abstract:Circuits using superconducting single-photon detectors and Josephson junctions to perform signal reception, synaptic weighting, and integration are investigated. The circuits convert photon-detection events into flux quanta, the number of which is determined by the synaptic weight. The current from many synaptic connections is inductively coupled to a superconducting loop that implements the neuronal threshold operation. Designs are presented for synapses and neurons that perform integration as well as detect coincidence events for temporal coding. Both excitatory and inhibitory connections are demonstrated. It is shown that a neuron with a single integration loop can receive input from 1000 such synaptic connections, and neurons of similar design could employ many loops for dendritic processing.

Superconducting Optoelectronic Neurons IV: Transmitter Circuits

May 08, 2018

Abstract:A superconducting optoelectronic neuron will produce a small current pulse upon reaching threshold. We present an amplifier chain that converts this small current pulse to a voltage pulse sufficient to produce light from a semiconductor diode. This light is the signal used to communicate between neurons in the network. The amplifier chain comprises a thresholding Josephson junction, a relaxation oscillator Josephson junction, a superconducting thin-film current-gated current amplifier, and a superconducting thin-film current-gated voltage amplifier. We analyze the performance of the elements in the amplifier chain in the time domain to calculate the energy consumption per photon created for several values of light-emitting diode capacitance and efficiency. The speed of the amplification sequence allows neuronal firing up to at least 20\,MHz with power density low enough to be cooled easily with standard $^4$He cryogenic systems operating at 4.2\,K.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge