Jeffrey A. Ruffolo

Conditional Enzyme Generation Using Protein Language Models with Adapters

Oct 04, 2024

Abstract:The conditional generation of proteins with desired functions and/or properties is a key goal for generative models. Existing methods based on prompting of language models can generate proteins conditioned on a target functionality, such as a desired enzyme family. However, these methods are limited to simple, tokenized conditioning and have not been shown to generalize to unseen functions. In this study, we propose ProCALM (Protein Conditionally Adapted Language Model), an approach for the conditional generation of proteins using adapters to protein language models. Our specific implementation of ProCALM involves finetuning ProGen2 to incorporate conditioning representations of enzyme function and taxonomy. ProCALM matches existing methods at conditionally generating sequences from target enzyme families. Impressively, it can also generate within the joint distribution of enzymatic function and taxonomy, and it can generalize to rare and unseen enzyme families and taxonomies. Overall, ProCALM is a flexible and computationally efficient approach, and we expect that it can be extended to a wide range of generative language models.

Deciphering antibody affinity maturation with language models and weakly supervised learning

Dec 14, 2021

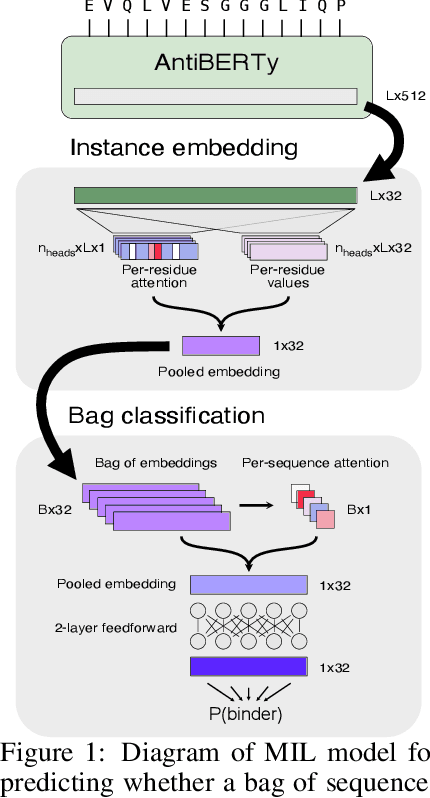

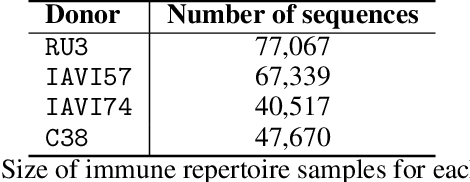

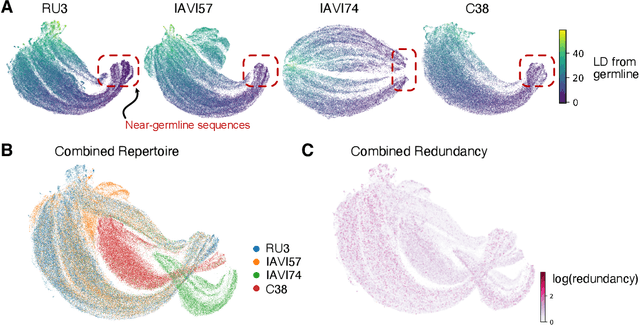

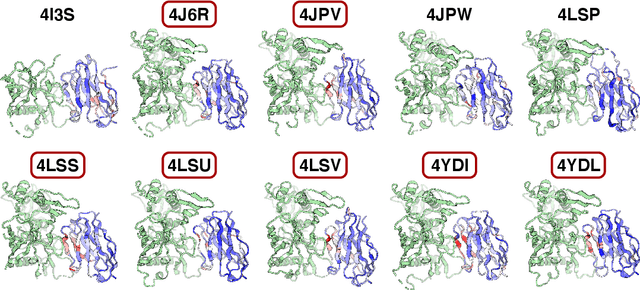

Abstract:In response to pathogens, the adaptive immune system generates specific antibodies that bind and neutralize foreign antigens. Understanding the composition of an individual's immune repertoire can provide insights into this process and reveal potential therapeutic antibodies. In this work, we explore the application of antibody-specific language models to aid understanding of immune repertoires. We introduce AntiBERTy, a language model trained on 558M natural antibody sequences. We find that within repertoires, our model clusters antibodies into trajectories resembling affinity maturation. Importantly, we show that models trained to predict highly redundant sequences under a multiple instance learning framework identify key binding residues in the process. With further development, the methods presented here will provide new insights into antigen binding from repertoire sequences alone.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge