Jedrzej Kozerawski

Taming the Long Tail of Deep Probabilistic Forecasting

Mar 02, 2022

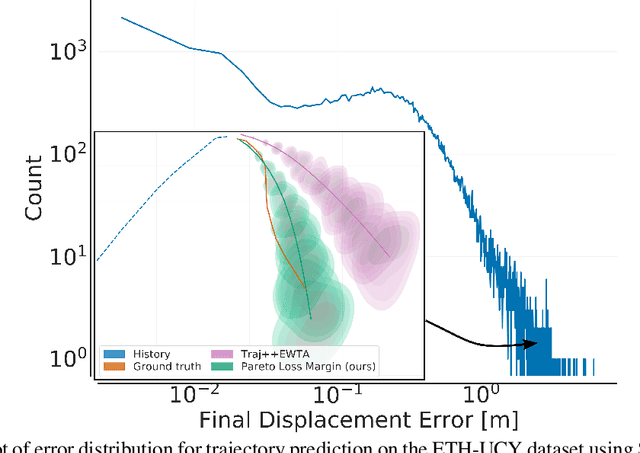

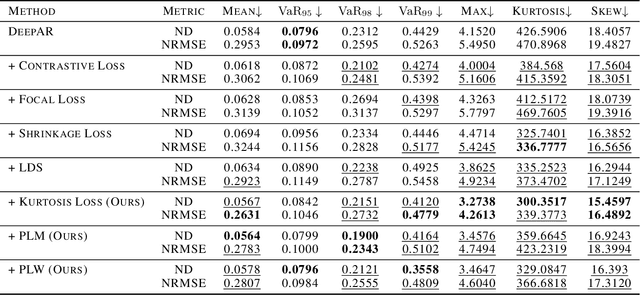

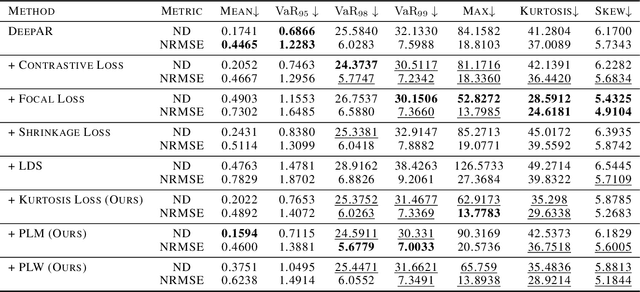

Abstract:Deep probabilistic forecasting is gaining attention in numerous applications ranging from weather prognosis, through electricity consumption estimation, to autonomous vehicle trajectory prediction. However, existing approaches focus on improvements on the most common scenarios without addressing the performance on rare and difficult cases. In this work, we identify a long tail behavior in the performance of state-of-the-art deep learning methods on probabilistic forecasting. We present two moment-based tailedness measurement concepts to improve performance on the difficult tail examples: Pareto Loss and Kurtosis Loss. Kurtosis loss is a symmetric measurement as the fourth moment about the mean of the loss distribution. Pareto loss is asymmetric measuring right tailedness, modeling the loss using a generalized Pareto distribution (GPD). We demonstrate the performance of our approach on several real-world datasets including time series and spatiotemporal trajectories, achieving significant improvements on the tail examples.

One-Class Meta-Learning: Towards Generalizable Few-Shot Open-Set Classification

Sep 14, 2021

Abstract:Real-world classification tasks are frequently required to work in an open-set setting. This is especially challenging for few-shot learning problems due to the small sample size for each known category, which prevents existing open-set methods from working effectively; however, most multiclass few-shot methods are limited to closed-set scenarios. In this work, we address the problem of few-shot open-set classification by first proposing methods for few-shot one-class classification and then extending them to few-shot multiclass open-set classification. We introduce two independent few-shot one-class classification methods: Meta Binary Cross-Entropy (Meta-BCE), which learns a separate feature representation for one-class classification, and One-Class Meta-Learning (OCML), which learns to generate one-class classifiers given standard multiclass feature representation. Both methods can augment any existing few-shot learning method without requiring retraining to work in a few-shot multiclass open-set setting without degrading its closed-set performance. We demonstrate the benefits and drawbacks of both methods in different problem settings and evaluate them on three standard benchmark datasets, miniImageNet, tieredImageNet, and Caltech-UCSD-Birds-200-2011, where they surpass the state-of-the-art methods in the few-shot multiclass open-set and few-shot one-class tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge