Jean-Pierre Wigneron

SERA-H: Beyond Native Sentinel Spatial Limits for High-Resolution Canopy Height Mapping

Dec 19, 2025

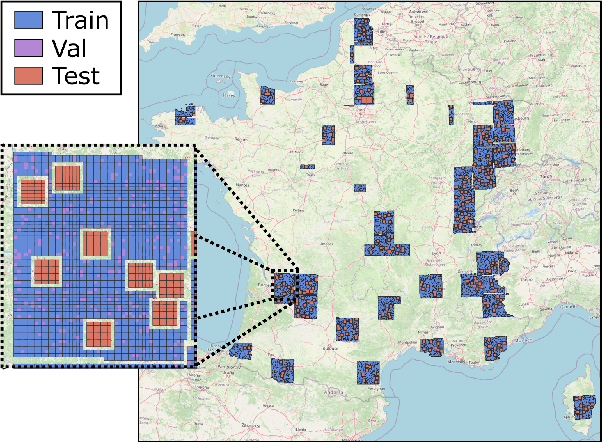

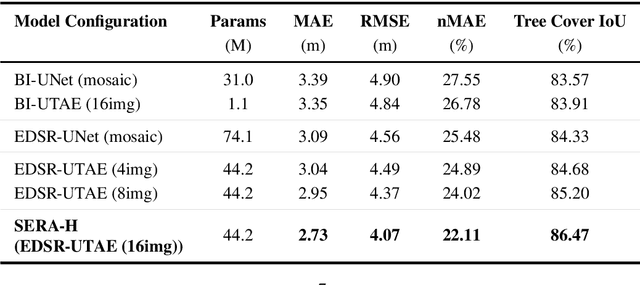

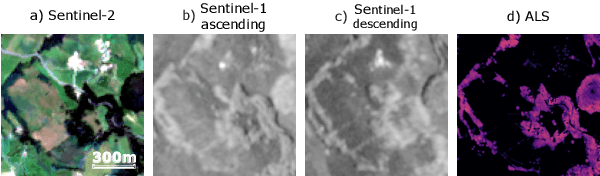

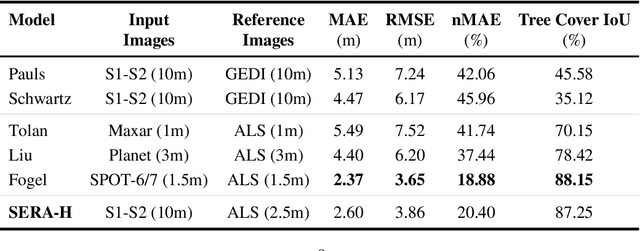

Abstract:High-resolution mapping of canopy height is essential for forest management and biodiversity monitoring. Although recent studies have led to the advent of deep learning methods using satellite imagery to predict height maps, these approaches often face a trade-off between data accessibility and spatial resolution. To overcome these limitations, we present SERA-H, an end-to-end model combining a super-resolution module (EDSR) and temporal attention encoding (UTAE). Trained under the supervision of high-density LiDAR data (ALS), our model generates 2.5 m resolution height maps from freely available Sentinel-1 and Sentinel-2 (10 m) time series data. Evaluated on an open-source benchmark dataset in France, SERA-H, with a MAE of 2.6 m and a coefficient of determination of 0.82, not only outperforms standard Sentinel-1/2 baselines but also achieves performance comparable to or better than methods relying on commercial very high-resolution imagery (SPOT-6/7, PlanetScope, Maxar). These results demonstrate that combining high-resolution supervision with the spatiotemporal information embedded in time series enables the reconstruction of details beyond the input sensors' native resolution. SERA-H opens the possibility of freely mapping forests with high revisit frequency, achieving accuracy comparable to that of costly commercial imagery. The source code is available at https://github.com/ThomasBoudras/SERA-H#

Vision Transformers, a new approach for high-resolution and large-scale mapping of canopy heights

Apr 22, 2023

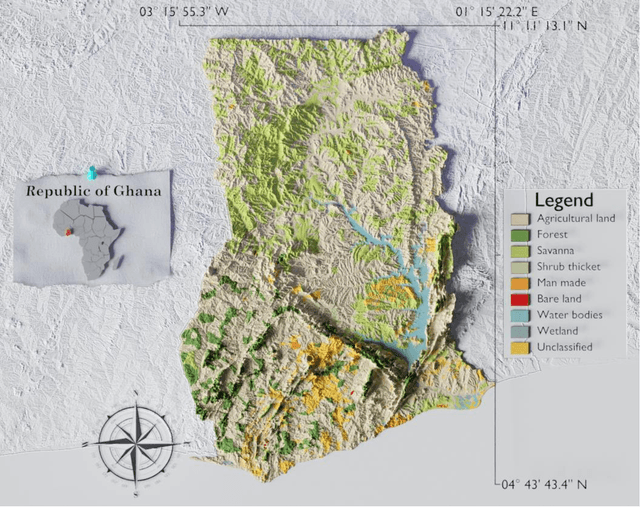

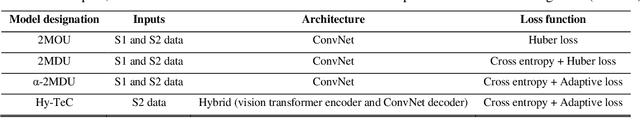

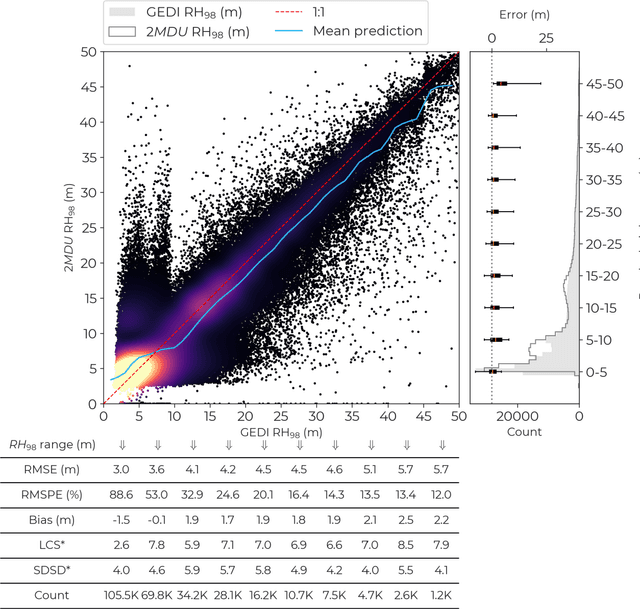

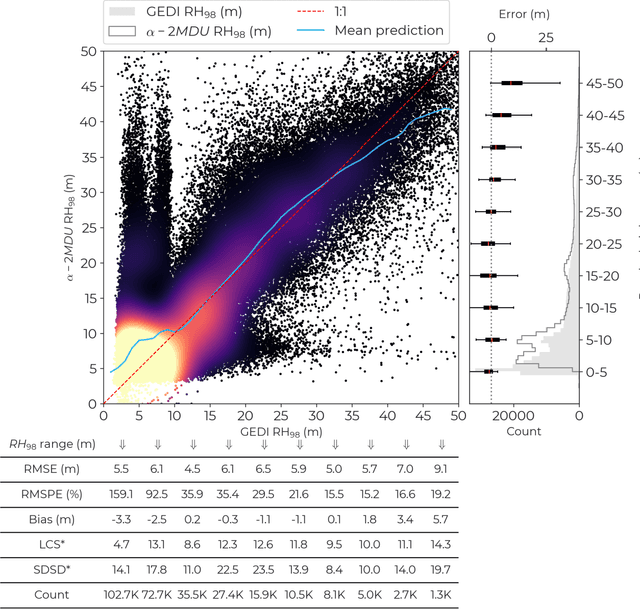

Abstract:Accurate and timely monitoring of forest canopy heights is critical for assessing forest dynamics, biodiversity, carbon sequestration as well as forest degradation and deforestation. Recent advances in deep learning techniques, coupled with the vast amount of spaceborne remote sensing data offer an unprecedented opportunity to map canopy height at high spatial and temporal resolutions. Current techniques for wall-to-wall canopy height mapping correlate remotely sensed 2D information from optical and radar sensors to the vertical structure of trees using LiDAR measurements. While studies using deep learning algorithms have shown promising performances for the accurate mapping of canopy heights, they have limitations due to the type of architectures and loss functions employed. Moreover, mapping canopy heights over tropical forests remains poorly studied, and the accurate height estimation of tall canopies is a challenge due to signal saturation from optical and radar sensors, persistent cloud covers and sometimes the limited penetration capabilities of LiDARs. Here, we map heights at 10 m resolution across the diverse landscape of Ghana with a new vision transformer (ViT) model optimized concurrently with a classification (discrete) and a regression (continuous) loss function. This model achieves better accuracy than previously used convolutional based approaches (ConvNets) optimized with only a continuous loss function. The ViT model results show that our proposed discrete/continuous loss significantly increases the sensitivity for very tall trees (i.e., > 35m), for which other approaches show saturation effects. The height maps generated by the ViT also have better ground sampling distance and better sensitivity to sparse vegetation in comparison to a convolutional model. Our ViT model has a RMSE of 3.12m in comparison to a reference dataset while the ConvNet model has a RMSE of 4.3m.

High-resolution canopy height map in the Landes forest based on GEDI, Sentinel-1, and Sentinel-2 data with a deep learning approach

Dec 20, 2022Abstract:In intensively managed forests in Europe, where forests are divided into stands of small size and may show heterogeneity within stands, a high spatial resolution (10 - 20 meters) is arguably needed to capture the differences in canopy height. In this work, we developed a deep learning model based on multi-stream remote sensing measurements to create a high-resolution canopy height map over the "Landes de Gascogne" forest in France, a large maritime pine plantation of 13,000 km$^2$ with flat terrain and intensive management. This area is characterized by even-aged and mono-specific stands, of a typical length of a few hundred meters, harvested every 35 to 50 years. Our deep learning U-Net model uses multi-band images from Sentinel-1 and Sentinel-2 with composite time averages as input to predict tree height derived from GEDI waveforms. The evaluation is performed with external validation data from forest inventory plots and a stereo 3D reconstruction model based on Skysat imagery available at specific locations. We trained seven different U-net models based on a combination of Sentinel-1 and Sentinel-2 bands to evaluate the importance of each instrument in the dominant height retrieval. The model outputs allow us to generate a 10 m resolution canopy height map of the whole "Landes de Gascogne" forest area for 2020 with a mean absolute error of 2.02 m on the Test dataset. The best predictions were obtained using all available satellite layers from Sentinel-1 and Sentinel-2 but using only one satellite source also provided good predictions. For all validation datasets in coniferous forests, our model showed better metrics than previous canopy height models available in the same region.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge