Jean-Christophe Dubois

DRUID

Evidential uncertainties on rich labels for active learning

Sep 21, 2023

Abstract:Recent research in active learning, and more precisely in uncertainty sampling, has focused on the decomposition of model uncertainty into reducible and irreducible uncertainties. In this paper, we propose to simplify the computational phase and remove the dependence on observations, but more importantly to take into account the uncertainty already present in the labels, \emph{i.e.} the uncertainty of the oracles. Two strategies are proposed, sampling by Klir uncertainty, which addresses the exploration-exploitation problem, and sampling by evidential epistemic uncertainty, which extends the reducible uncertainty to the evidential framework, both using the theory of belief functions.

Estimation of the qualification and behavior of a contributor and aggregation of his answers in a crowdsourcing context

Mar 08, 2023

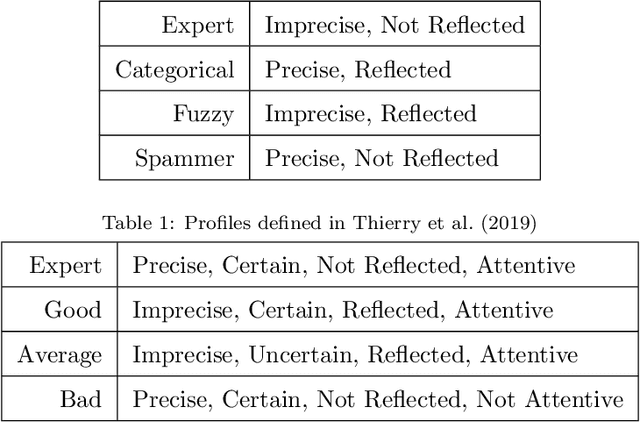

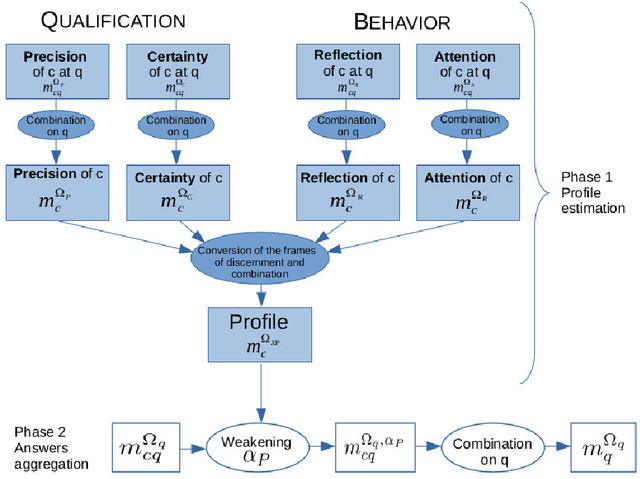

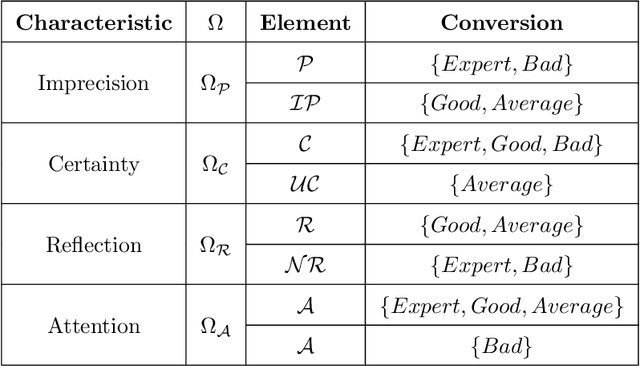

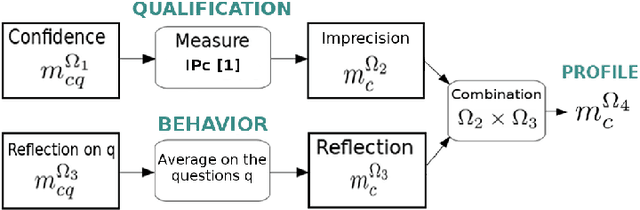

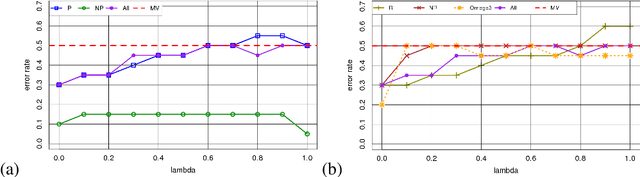

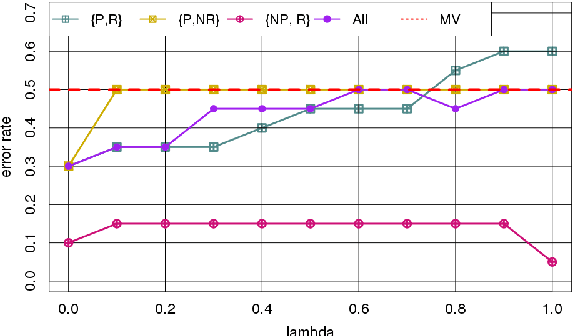

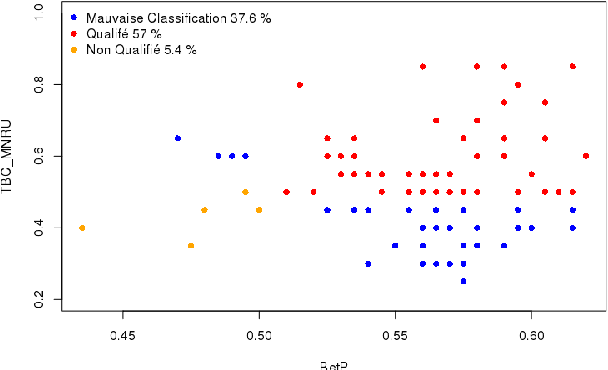

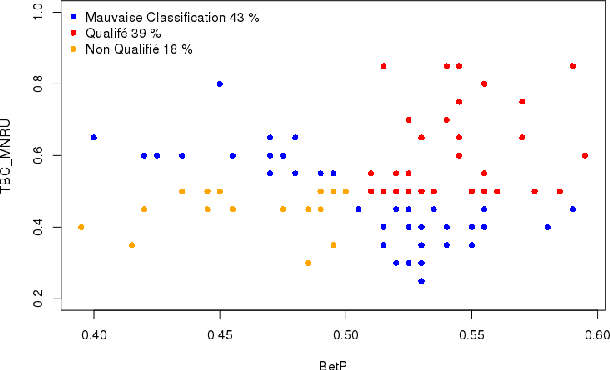

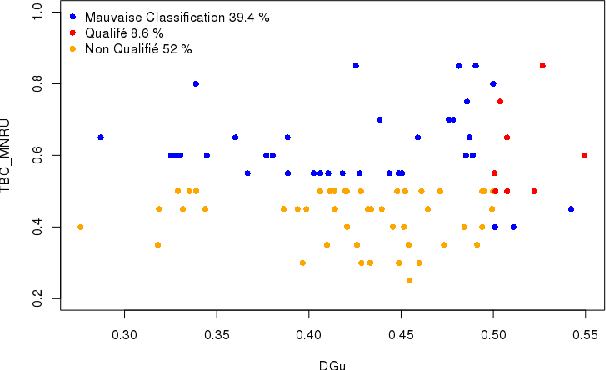

Abstract:Crowdsourcing is the outsourcing of tasks to a crowd of contributors on a dedicated platform. The crowd on these platforms is very diversified and includes various profiles of contributors which generates data of uneven quality. However, majority voting, which is the aggregating method commonly used in platforms, gives equal weight to each contribution. To overcome this problem, we propose a method, MONITOR, which estimates the contributor's profile and aggregates the collected data by taking into account their possible imperfections thanks to the theory of belief functions. To do so, MONITOR starts by estimating the profile of the contributor through his qualification for the task and his behavior.Crowdsourcing campaigns have been carried out to collect the necessary data to test MONITOR on real data in order to compare it to existing approaches. The results of the experiments show that thanks to the use of the MONITOR method, we obtain a better rate of correct answer after aggregation of the contributions compared to the majority voting. Our contributions in this article are for the first time the proposal of a model that takes into account both the qualification of the contributor and his behavior in the estimation of his profile. For the second one, the weakening and the aggregation of the answers according to the estimated profiles.

Modelisation de l'incertitude et de l'imprecision de donnees de crowdsourcing : MONITOR

Feb 26, 2020

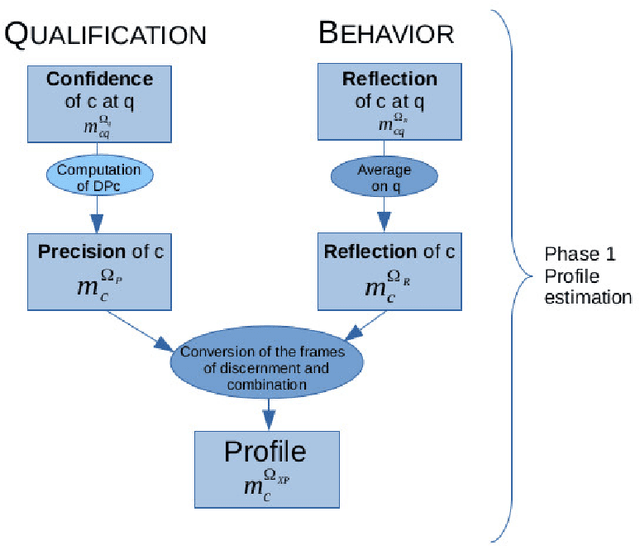

Abstract:Crowdsourcing is defined as the outsourcing of tasks to a crowd of contributors. The crowd is very diverse on these platforms and includes malicious contributors attracted by the remuneration of tasks and not conscientiously performing them. It is essential to identify these contributors in order to avoid considering their responses. As not all contributors have the same aptitude for a task, it seems appropriate to give weight to their answers according to their qualifications. This paper, published at the ICTAI 2019 conference, proposes a method, MONITOR, for estimating the profile of the contributor and aggregating the responses using belief function theory.

Contributors profile modelization in crowdsourcing platforms

Nov 19, 2018

Abstract:The crowdsourcing consists in the externalisation of tasks to a crowd of people remunerated to execute this ones. The crowd, usually diversified, can include users without qualification and/or motivation for the tasks. In this paper we will introduce a new method of user expertise modelization in the crowdsourcing platforms based on the theory of belief functions in order to identify serious and qualificated users.

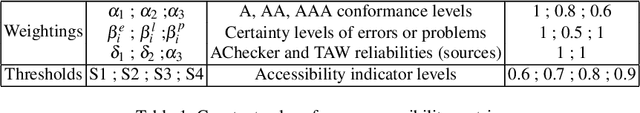

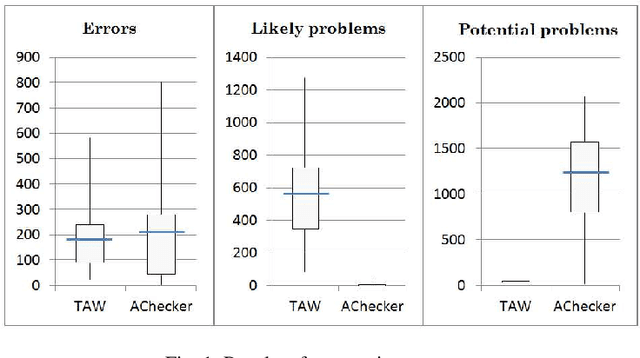

Designing a Belief Function-Based Accessibility Indicator to Improve Web Browsing for Disabled People

Jan 20, 2015

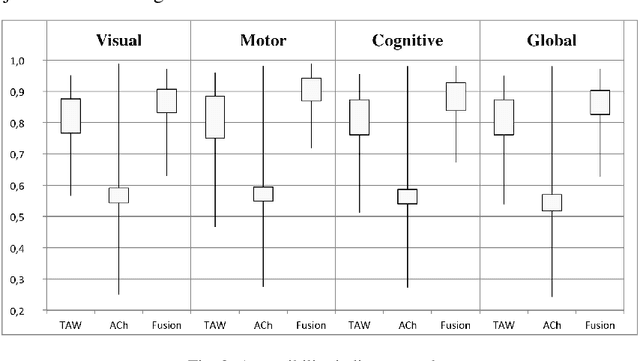

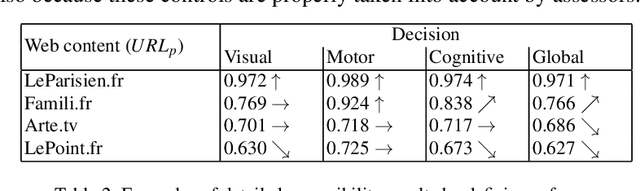

Abstract:The purpose of this study is to provide an accessibility measure of web-pages, in order to draw disabled users to the pages that have been designed to be ac-cessible to them. Our approach is based on the theory of belief functions, using data which are supplied by reports produced by automatic web content assessors that test the validity of criteria defined by the WCAG 2.0 guidelines proposed by the World Wide Web Consortium (W3C) organization. These tools detect errors with gradual degrees of certainty and their results do not always converge. For these reasons, to fuse information coming from the reports, we choose to use an information fusion framework which can take into account the uncertainty and imprecision of infor-mation as well as divergences between sources. Our accessibility indicator covers four categories of deficiencies. To validate the theoretical approach in this context, we propose an evaluation completed on a corpus of 100 most visited French news websites, and 2 evaluation tools. The results obtained illustrate the interest of our accessibility indicator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge