Jayden Hong

Using Implicit Behavior Cloning and Dynamic Movement Primitive to Facilitate Reinforcement Learning for Robot Motion Planning

Jul 29, 2023

Abstract:Reinforcement learning (RL) for motion planning of multi-degree-of-freedom robots still suffers from low efficiency in terms of slow training speed and poor generalizability. In this paper, we propose a novel RL-based robot motion planning framework that uses implicit behavior cloning (IBC) and dynamic movement primitive (DMP) to improve the training speed and generalizability of an off-policy RL agent. IBC utilizes human demonstration data to leverage the training speed of RL, and DMP serves as a heuristic model that transfers motion planning into a simpler planning space. To support this, we also create a human demonstration dataset using a pick-and-place experiment that can be used for similar studies. Comparison studies in simulation reveal the advantage of the proposed method over the conventional RL agents with faster training speed and higher scores. A real-robot experiment indicates the applicability of the proposed method to a simple assembly task. Our work provides a novel perspective on using motion primitives and human demonstration to leverage the performance of RL for robot applications.

Human-Robot Skill Transfer with Enhanced Compliance via Dynamic Movement Primitives

Apr 12, 2023

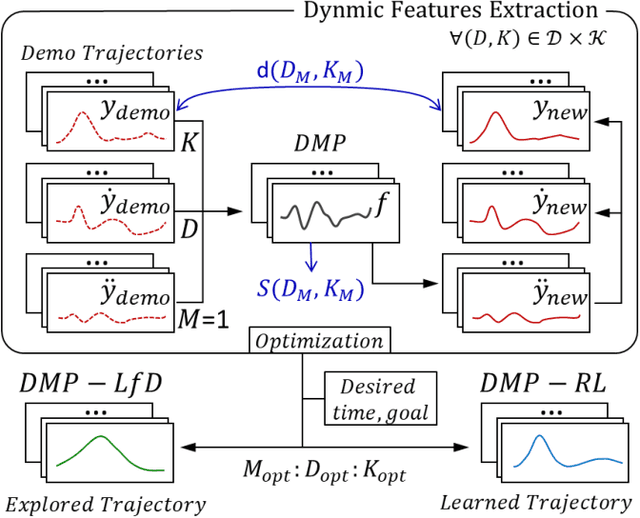

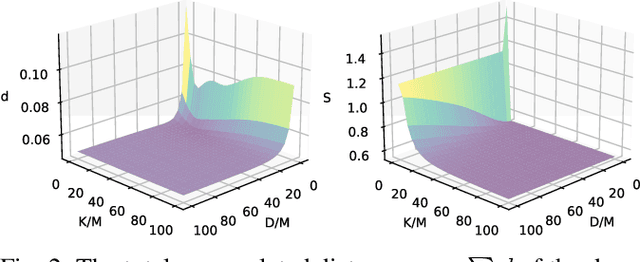

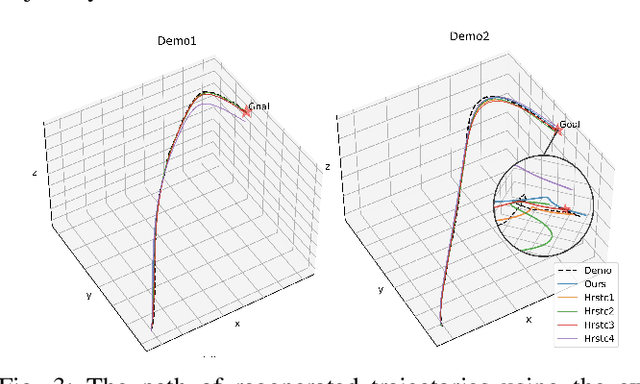

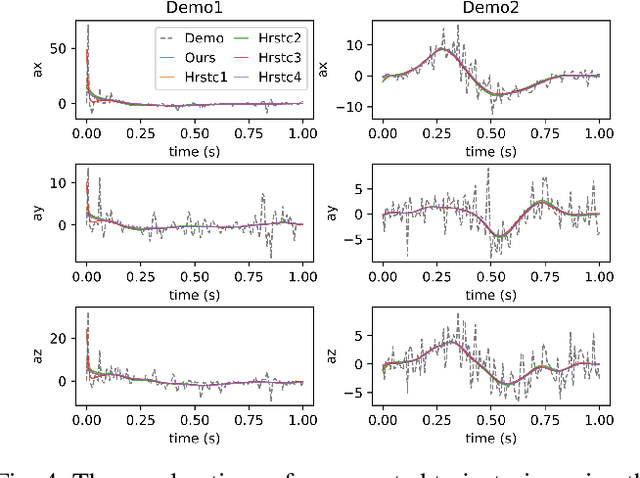

Abstract:Finding an efficient way to adapt robot trajectory is a priority to improve overall performance of robots. One approach for trajectory planning is through transferring human-like skills to robots by Learning from Demonstrations (LfD). The human demonstration is considered the target motion to mimic. However, human motion is typically optimal for human embodiment but not for robots because of the differences between human biomechanics and robot dynamics. The Dynamic Movement Primitives (DMP) framework is a viable solution for this limitation of LfD, but it requires tuning the second-order dynamics in the formulation. Our contribution is introducing a systematic method to extract the dynamic features from human demonstration to auto-tune the parameters in the DMP framework. In addition to its use with LfD, another utility of the proposed method is that it can readily be used in conjunction with Reinforcement Learning (RL) for robot training. In this way, the extracted features facilitate the transfer of human skills by allowing the robot to explore the possible trajectories more efficiently and increasing robot compliance significantly. We introduced a methodology to extract the dynamic features from multiple trajectories based on the optimization of human-likeness and similarity in the parametric space. Our method was implemented into an actual human-robot setup to extract human dynamic features and used to regenerate the robot trajectories following both LfD and RL with DMP. It resulted in a stable performance of the robot, maintaining a high degree of human-likeness based on accumulated distance error as good as the best heuristic tuning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge