Jaume Anguera Peris

Restless Multi-Process Multi-Armed Bandits with Applications to Self-Driving Microscopies

Dec 16, 2025

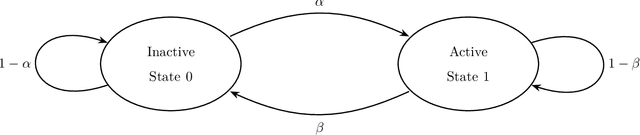

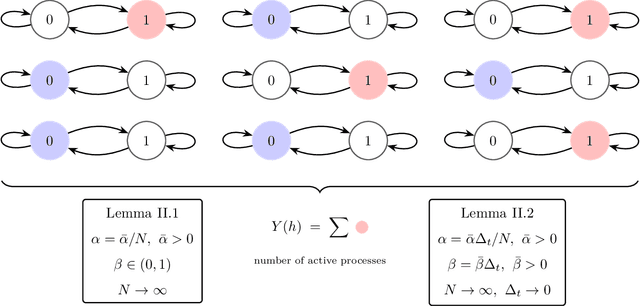

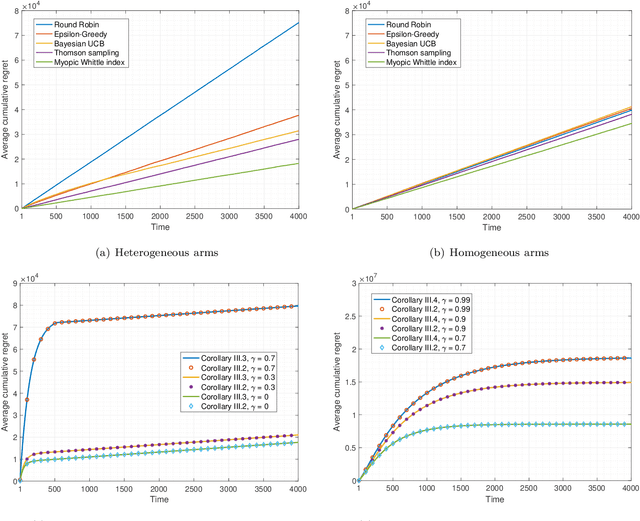

Abstract:High-content screening microscopy generates large amounts of live-cell imaging data, yet its potential remains constrained by the inability to determine when and where to image most effectively. Optimally balancing acquisition time, computational capacity, and photobleaching budgets across thousands of dynamically evolving regions of interest remains an open challenge, further complicated by limited field-of-view adjustments and sensor sensitivity. Existing approaches either rely on static sampling or heuristics that neglect the dynamic evolution of biological processes, leading to inefficiencies and missed events. Here, we introduce the restless multi-process multi-armed bandit (RMPMAB), a new decision-theoretic framework in which each experimental region is modeled not as a single process but as an ensemble of Markov chains, thereby capturing the inherent heterogeneity of biological systems such as asynchronous cell cycles and heterogeneous drug responses. Building upon this foundation, we derive closed-form expressions for transient and asymptotic behaviors of aggregated processes, and design scalable Whittle index policies with sub-linear complexity in the number of imaging regions. Through both simulations and a real biological live-cell imaging dataset, we show that our approach achieves substantial improvements in throughput under resource constraints. Notably, our algorithm outperforms Thomson Sampling, Bayesian UCB, epsilon-Greedy, and Round Robin by reducing cumulative regret by more than 37% in simulations and capturing 93% more biologically relevant events in live imaging experiments, underscoring its potential for transformative smart microscopy. Beyond improving experimental efficiency, the RMPMAB framework unifies stochastic decision theory with optimal autonomous microscopy control, offering a principled approach to accelerate discovery across multidisciplinary sciences.

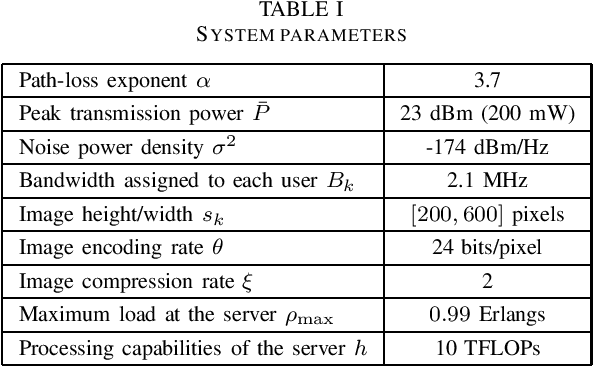

Resource Dimensioning for Single-Cell Edge Video Analytics

May 09, 2023Abstract:Edge intelligence is an emerging technology where the base stations located at the edge of the network are equipped with computing units that provide machine learning services to the end users. To provide high-quality services in a cost-efficient way, the wireless and computing resources need to be dimensioned carefully. In this paper, we address the problem of resource dimensioning in a single-cell system that supports edge video analytics under latency and accuracy constraints. We show that the resource-dimensioning problem can be transformed into a convex optimization problem, and we provide numerical results that give insights into the trade-offs between the wireless and computing resources for varying cell sizes and for varying intensity of incoming tasks. Overall, we observe that the wireless and computing resources exhibit opposite trends; the wireless resources favor from smaller cells, where high attenuation losses are avoided, and the computing resources favor from larger cells, where statistical multiplexing allows for computing more tasks. We also show that small cells with low loads have high per-request costs, even when the wireless resources are increased to compensate for the low multiplexing gain at the servers.

Blind Asynchronous Over-the-Air Federated Edge Learning

Oct 31, 2022

Abstract:Federated Edge Learning (FEEL) is a distributed machine learning technique where each device contributes to training a global inference model by independently performing local computations with their data. More recently, FEEL has been merged with over-the-air computation (OAC), where the global model is calculated over the air by leveraging the superposition of analog signals. However, when implementing FEEL with OAC, there is the challenge on how to precode the analog signals to overcome any time misalignment at the receiver. In this work, we propose a novel synchronization-free method to recover the parameters of the global model over the air without requiring any prior information about the time misalignments. For that, we construct a convex optimization based on the norm minimization problem to directly recover the global model by solving a convex semi-definite program. The performance of the proposed method is evaluated in terms of accuracy and convergence via numerical experiments. We show that our proposed algorithm is close to the ideal synchronized scenario by $10\%$, and performs $4\times$ better than the simple case where no recovering method is used.

Modelling multi-cell edge video analytics

Feb 16, 2022

Abstract:Edge intelligence is a scalable solution for analyzing distributed data, but it cannot provide reliable services in large-scale cellular networks unless the inherent aspects of fading and interference are also taken into consideration. In this paper, we present the first mathematical framework for modelling edge video analytics in multi-cell cellular systems. We derive the expressions for the coverage probability, the ergodic capacity, the probability of successfully completing the video analytics within a target delay requirement, and the effective frame rate. We also analyze the effect of the system parameters on the accuracy of the detection algorithm, the supported frame rate at the edge server, and the system fairness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge