Modelling multi-cell edge video analytics

Paper and Code

Feb 16, 2022

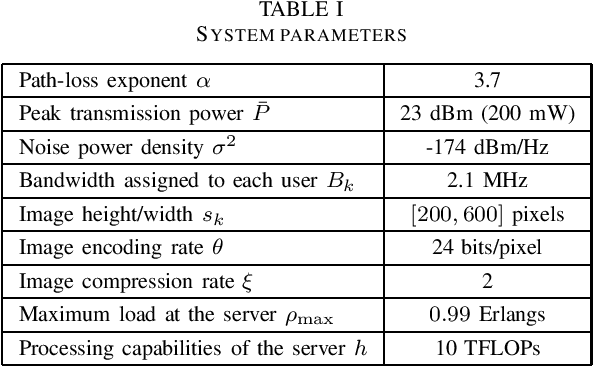

Edge intelligence is a scalable solution for analyzing distributed data, but it cannot provide reliable services in large-scale cellular networks unless the inherent aspects of fading and interference are also taken into consideration. In this paper, we present the first mathematical framework for modelling edge video analytics in multi-cell cellular systems. We derive the expressions for the coverage probability, the ergodic capacity, the probability of successfully completing the video analytics within a target delay requirement, and the effective frame rate. We also analyze the effect of the system parameters on the accuracy of the detection algorithm, the supported frame rate at the edge server, and the system fairness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge