Jatan Loya

SAGE-RT: Synthetic Alignment data Generation for Safety Evaluation and Red Teaming

Aug 14, 2024

Abstract:We introduce Synthetic Alignment data Generation for Safety Evaluation and Red Teaming (SAGE-RT or SAGE) a novel pipeline for generating synthetic alignment and red-teaming data. Existing methods fall short in creating nuanced and diverse datasets, providing necessary control over the data generation and validation processes, or require large amount of manually generated seed data. SAGE addresses these limitations by using a detailed taxonomy to produce safety-alignment and red-teaming data across a wide range of topics. We generated 51,000 diverse and in-depth prompt-response pairs, encompassing over 1,500 topics of harmfulness and covering variations of the most frequent types of jailbreaking prompts faced by large language models (LLMs). We show that the red-teaming data generated through SAGE jailbreaks state-of-the-art LLMs in more than 27 out of 32 sub-categories, and in more than 58 out of 279 leaf-categories (sub-sub categories). The attack success rate for GPT-4o, GPT-3.5-turbo is 100% over the sub-categories of harmfulness. Our approach avoids the pitfalls of synthetic safety-training data generation such as mode collapse and lack of nuance in the generation pipeline by ensuring a detailed coverage of harmful topics using iterative expansion of the topics and conditioning the outputs on the generated raw-text. This method can be used to generate red-teaming and alignment data for LLM Safety completely synthetically to make LLMs safer or for red-teaming the models over a diverse range of topics.

ViT-Inception-GAN for Image Colourising

Jun 11, 2021

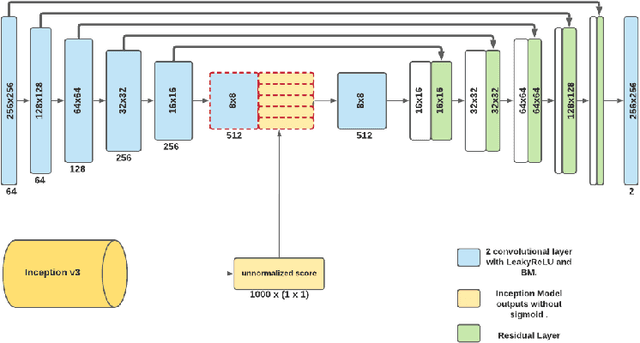

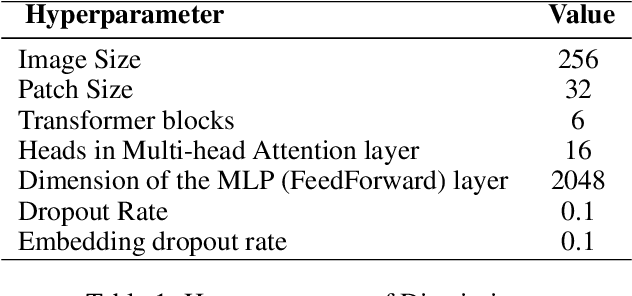

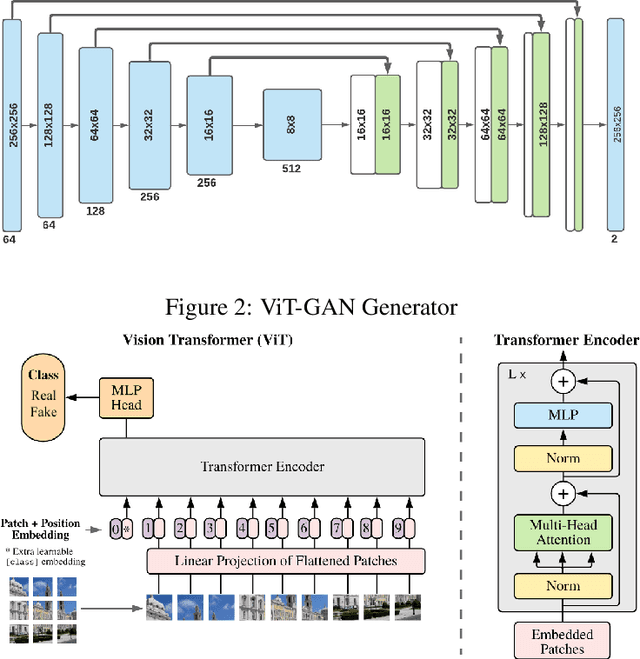

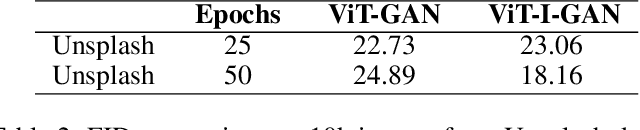

Abstract:Studies involving colourising images has been garnering researchers' keen attention over time, assisted by significant advances in various Machine Learning techniques and compute power availability. Traditionally, colourising images have been an intricate task that gave a substantial degree of freedom during the assignment of chromatic information. In our proposed method, we attempt to colourise images using Vision Transformer - Inception - Generative Adversarial Network (ViT-I-GAN), which has an Inception-v3 fusion embedding in the generator. For a stable and robust network, we have used Vision Transformer (ViT) as the discriminator. We trained the model on the Unsplash and the COCO dataset for demonstrating the improvement made by the Inception-v3 embedding. We have compared the results between ViT-GANs with and without Inception-v3 embedding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge