Jasper Brown

Manipulating UAV Imagery for Satellite Model Training, Calibration and Testing

Mar 22, 2022

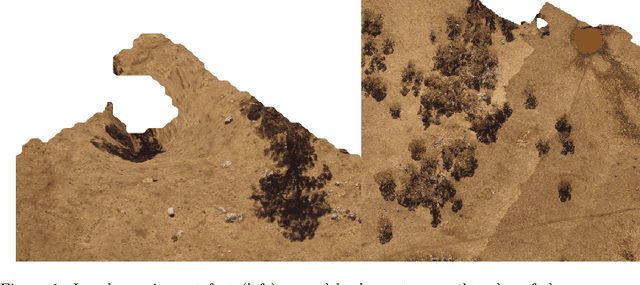

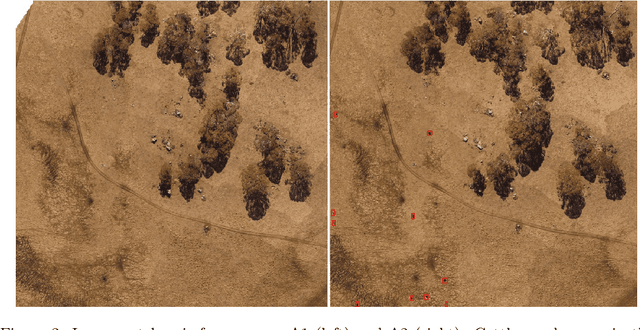

Abstract:Modern livestock farming is increasingly data driven and frequently relies on efficient remote sensing to gather data over wide areas. High resolution satellite imagery is one such data source, which is becoming more accessible for farmers as coverage increases and cost falls. Such images can be used to detect and track animals, monitor pasture changes, and understand land use. Many of the data driven models being applied to these tasks require ground truthing at resolutions higher than satellites can provide. Simultaneously, there is a lack of available aerial imagery focused on farmland changes that occur over days or weeks, such as herd movement. With this goal in mind, we present a new multi-temporal dataset of high resolution UAV imagery which is artificially degraded to match satellite data quality. An empirical blurring metric is used to calibrate the degradation process against actual satellite imagery of the area. UAV surveys were flown repeatedly over several weeks, for specific farm locations. This 5cm/pixel data is sufficiently high resolution to accurately ground truth cattle locations, and other factors such as grass cover. From 33 wide area UAV surveys, 1869 patches were extracted and artificially degraded using an accurate satellite optical model to simulate satellite data. Geographic patches from multiple time periods are aligned and presented as sets, providing a multi-temporal dataset that can be used for detecting changes on farms. The geo-referenced images and 27,853 manually annotated cattle labels are made publicly available.

Automated Aerial Animal Detection When Spatial Resolution Conditions Are Varied

Oct 04, 2021

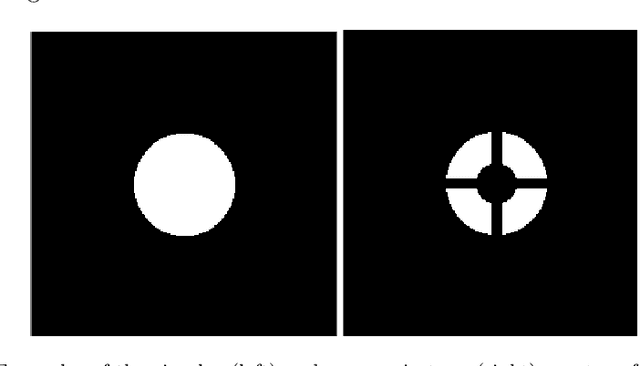

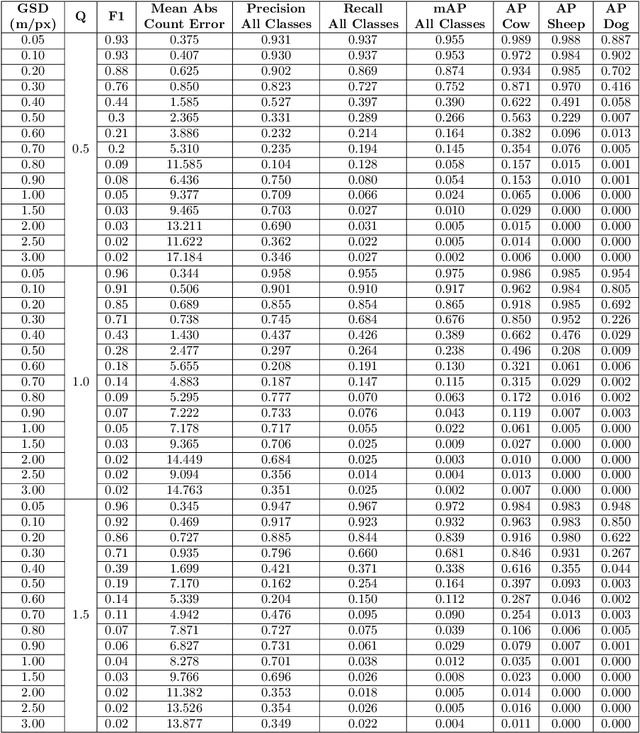

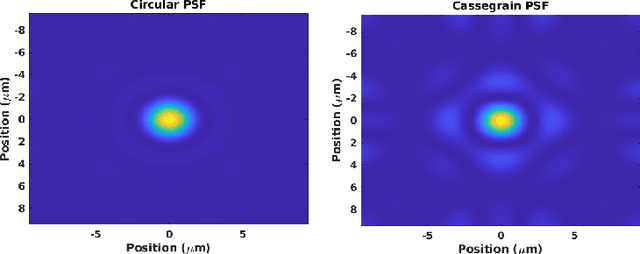

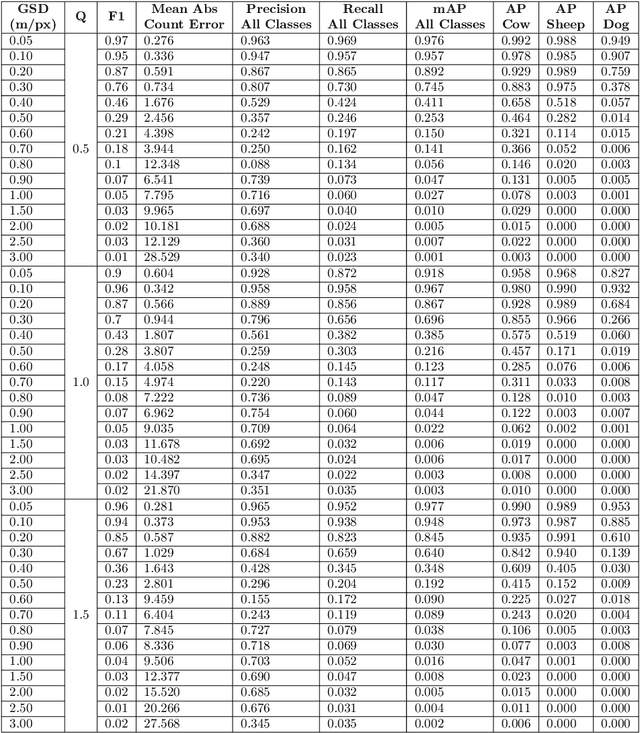

Abstract:Knowing where livestock are located enables optimized management and mustering. However, Australian farms are large meaning that many of Australia's livestock are unmonitored which impacts farm profit, animal welfare and the environment. Effective animal localisation and counting by analysing satellite imagery overcomes this management hurdle however, high resolution satellite imagery is expensive. Thus, to minimise cost the lowest spatial resolution data that enables accurate livestock detection should be selected. In our work, we determine the association between object detector performance and spatial degradation for cattle, sheep and dogs. Accurate ground truth was established using high resolution drone images which were then downsampled to various ground sample distances (GSDs). Both circular and cassegrain aperture optics were simulated to generate point spread functions (PSFs) corresponding to various optical qualities. By simulating the PSF, rather than approximating it as a Gaussian, the images were accurately degraded to match the spatial resolution and blurring structure of satellite imagery. Two existing datasets were combined and used to train and test a YoloV5 object detection network. Detector performance was found to drop steeply around a GSD of 0.5m/px and was associated with PSF matrix structure within this GSD region. Detector mAP performance fell by 52 percent when a cassegrain, rather than circular, aperture was used at a 0.5m/px GSD. Overall blurring magnitude also had a small impact when matched to GSD, as did the internal network resolution. Our results here inform the selection of remote sensing data requirements for animal detection tasks, allowing farmers and ecologists to use more accessible medium resolution imagery with confidence.

Dataset and Performance Comparison of Deep Learning Architectures for Plum Detection and Robotic Harvesting

May 09, 2021

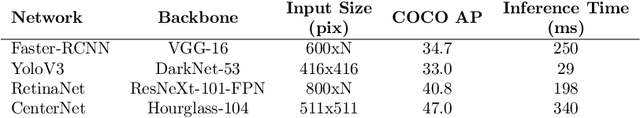

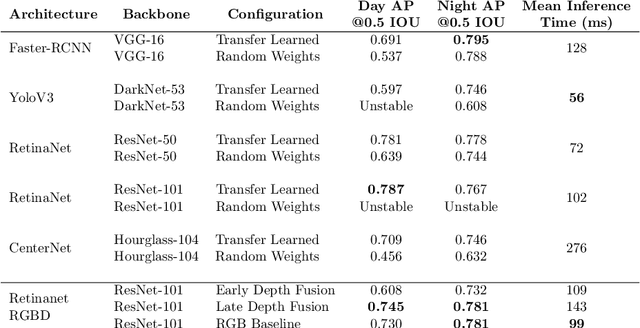

Abstract:Many automated operations in agriculture, such as weeding and plant counting, require robust and accurate object detectors. Robotic fruit harvesting is one of these, and is an important technology to address the increasing labour shortages and uncertainty suffered by tree crop growers. An eye-in-hand sensing setup is commonly used in harvesting systems and provides benefits to sensing accuracy and flexibility. However, as the hand and camera move from viewing the entire trellis to picking a specific fruit, large changes in lighting, colour, obscuration and exposure occur. Object detection algorithms used in harvesting should be robust to these challenges, but few datasets for assessing this currently exist. In this work, two new datasets are gathered during day and night operation of an actual robotic plum harvesting system. A range of current generation deep learning object detectors are benchmarked against these. Additionally, two methods for fusing depth and image information are tested for their impact on detector performance. Significant differences between day and night accuracy of different detectors is found, transfer learning is identified as essential in all cases, and depth information fusion is assessed as only marginally effective. The dataset and benchmark models are made available online.

Design and Evaluation of a Modular Robotic Plum Harvesting System Utilising Soft Components

Jul 13, 2020

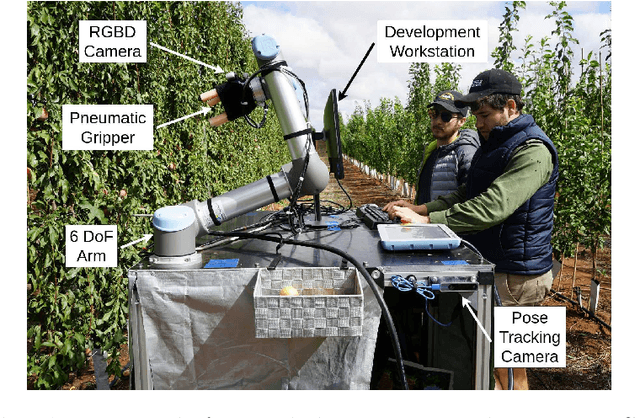

Abstract:The human labour required for tree crop harvesting is a major cost component in fruit production and is increasing. To address this, many existing research works have sought to demonstrate commercially viable robotic harvesting for tree crops, though successful commercial products resulting from these have been few and far between. Systems developed for specific crops such as sweet peppers or apples have shown promise, but the vast majority of cultivar types remain unaddressed and developing a specific system for each one is inefficient. In this work a flexible and modular development platform is presented, this can be used to test specific design choices on different fruit and growing conditions. The system is evaluated in a commercial plum orchard, with no crop modifications. Some existing techniques are found to be counterproductive for plums, while soft robotics and persistent target tracking significantly improve performance. A harvest success rate of 42% was observed, with lower than expected effectiveness based on prior testing with apples. The plum type and growing style pose unique challenges which are examined in the context of system module design choices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge