Jason Tang

Analyzing Customer-Facing Vendor Experiences with Time Series Forecasting and Monte Carlo Techniques

Jul 30, 2024Abstract:eBay partners with external vendors, which allows customers to freely select a vendor to complete their eBay experiences. However, vendor outages can hinder customer experiences. Consequently, eBay can disable a problematic vendor to prevent customer loss. Disabling the vendor too late risks losing customers willing to switch to other vendors, while disabling it too early risks losing those unwilling to switch. In this paper, we propose a data-driven solution to answer whether eBay should disable a problematic vendor and when to disable it. Our solution involves forecasting customer behavior. First, we use a multiplicative seasonality model to represent behavior if all vendors are fully functioning. Next, we use a Monte Carlo simulation to represent behavior if the problematic vendor remains enabled. Finally, we use a linear model to represent behavior if the vendor is disabled. By comparing these forecasts, we determine the optimal time for eBay to disable the problematic vendor.

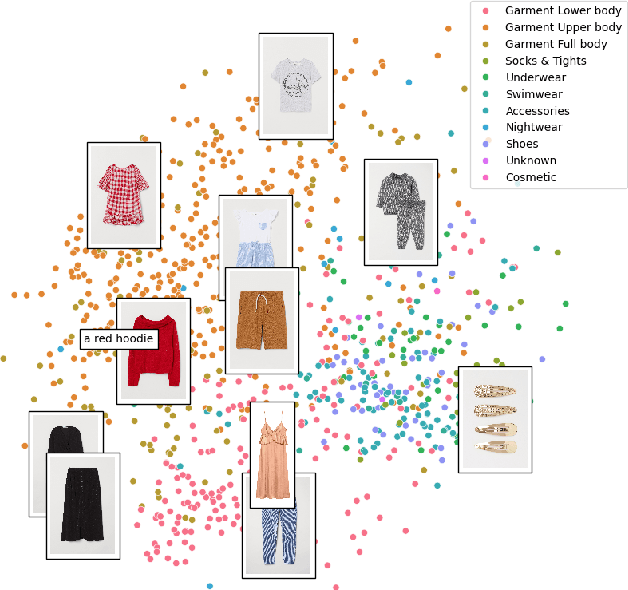

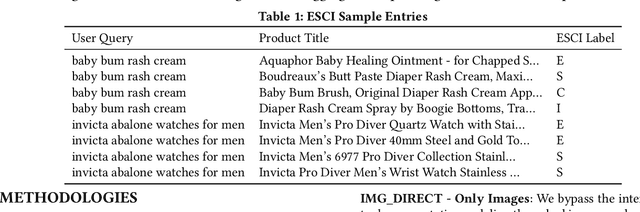

Shopping Queries Image Dataset (SQID): An Image-Enriched ESCI Dataset for Exploring Multimodal Learning in Product Search

May 24, 2024Abstract:Recent advances in the fields of Information Retrieval and Machine Learning have focused on improving the performance of search engines to enhance the user experience, especially in the world of online shopping. The focus has thus been on leveraging cutting-edge learning techniques and relying on large enriched datasets. This paper introduces the Shopping Queries Image Dataset (SQID), an extension of the Amazon Shopping Queries Dataset enriched with image information associated with 190,000 products. By integrating visual information, SQID facilitates research around multimodal learning techniques that can take into account both textual and visual information for improving product search and ranking. We also provide experimental results leveraging SQID and pretrained models, showing the value of using multimodal data for search and ranking. SQID is available at: https://github.com/Crossing-Minds/shopping-queries-image-dataset.

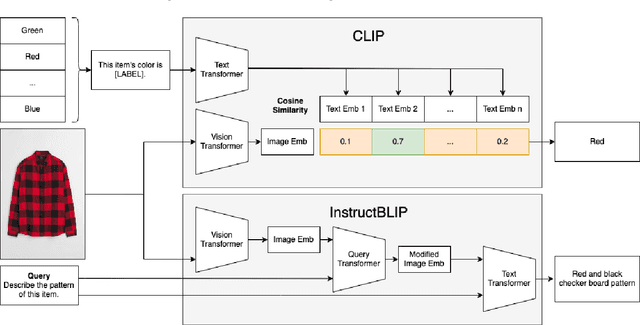

Captions Are Worth a Thousand Words: Enhancing Product Retrieval with Pretrained Image-to-Text Models

Feb 13, 2024

Abstract:This paper explores the usage of multimodal image-to-text models to enhance text-based item retrieval. We propose utilizing pre-trained image captioning and tagging models, such as instructBLIP and CLIP, to generate text-based product descriptions which are combined with existing text descriptions. Our work is particularly impactful for smaller eCommerce businesses who are unable to maintain the high-quality text descriptions necessary to effectively perform item retrieval for search and recommendation use cases. We evaluate the searchability of ground-truth text, image-generated text, and combinations of both texts on several subsets of Amazon's publicly available ESCI dataset. The results demonstrate the dual capability of our proposed models to enhance the retrieval of existing text and generate highly-searchable standalone descriptions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge