Janghyeon Kim

LoL-PIM: Long-Context LLM Decoding with Scalable DRAM-PIM System

Dec 28, 2024

Abstract:The expansion of large language models (LLMs) with hundreds of billions of parameters presents significant challenges to computational resources, particularly data movement and memory bandwidth. Long-context LLMs, which process sequences of tens of thousands of tokens, further increase the demand on the memory system as the complexity in attention layers and key-value cache sizes is proportional to the context length. Processing-in-Memory (PIM) maximizes memory bandwidth by moving compute to the data and can address the memory bandwidth challenges; however, PIM is not necessarily scalable to accelerate long-context LLM because of limited per-module memory capacity and the inflexibility of fixed-functional unit PIM architecture and static memory management. In this work, we propose LoL-PIM which is a multi-node PIM architecture that accelerates long context LLM through hardware-software co-design. In particular, we propose how pipeline parallelism can be exploited across a multi-PIM module while a direct PIM access (DPA) controller (or DMA for PIM) is proposed that enables dynamic PIM memory management and results in efficient PIM utilization across a diverse range of context length. We developed an MLIR-based compiler for LoL-PIM extending a commercial PIM-based compiler where the software modifications were implemented and evaluated, while the hardware changes were modeled in the simulator. Our evaluations demonstrate that LoL-PIM significantly improves throughput and reduces latency for long-context LLM inference, outperforming both multi-GPU and GPU-PIM systems (up to 8.54x and 16.0x speedup, respectively), thereby enabling more efficient deployment of LLMs in real-world applications.

Human-Robot Interface to Operate Robotic Systems via Muscle Synergy-Based Kinodynamic Information Transfer

May 11, 2022

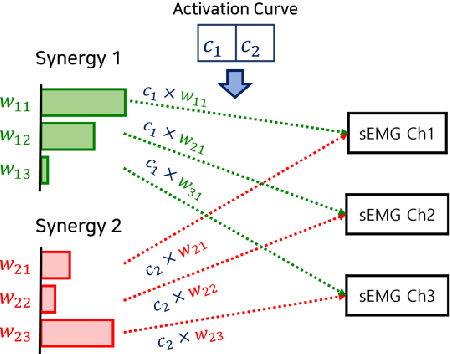

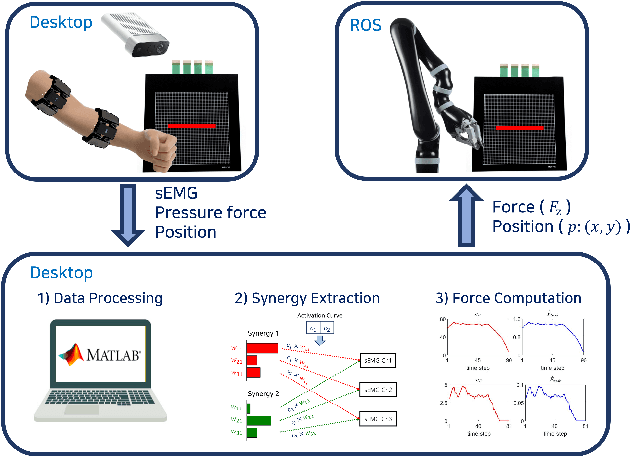

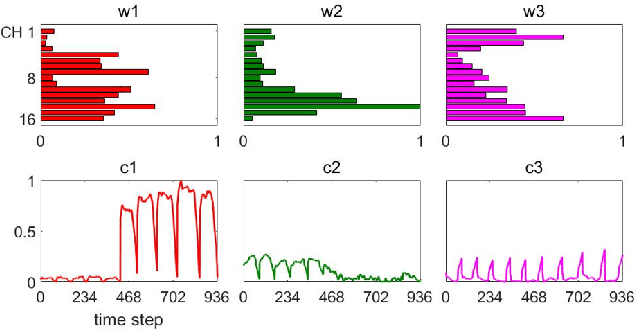

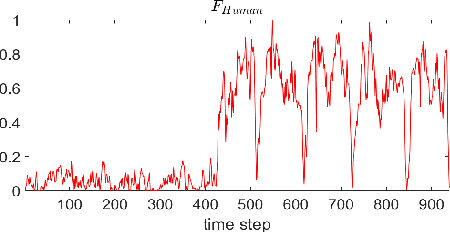

Abstract:When a human performs a given specific task, it has been known that the central nervous system controls modularized muscle group, which is called muscle synergy. For human-robot interface design problem, therefore, the muscle synergy can be utilized to reduce the dimensionality of control signal as well as the complexity of classifying human posture and motion. In this paper, we propose an approach to design a human-robot interface which enables a human operator to transfer a kinodynamic control command to robotic systems. A key feature of the proposed approach is that the muscle synergy and corresponding activation curve are employed to calculate a force generated by a tool at the robot end effector. A test bed for experiments consisted of two armband type surface electromyography sensors, an RGB-d camera, and a Kinova Gen2 robotic manipulator to verify the proposed approach. The result showed that both force and position commands could be successfully transferred to the robotic manipulator via our muscle synergy-based kinodynamic interface.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge