Jamison McGinley

Semantic Sensing and Planning for Human-Robot Collaboration in Uncertain Environments

Oct 20, 2021

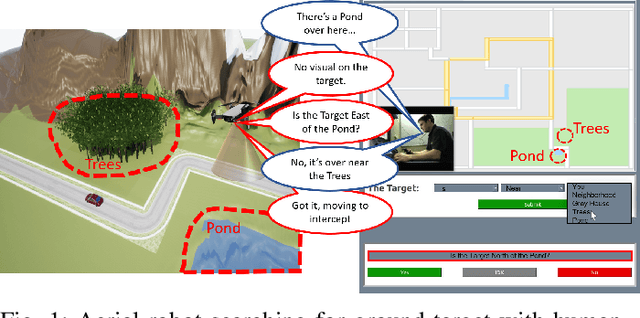

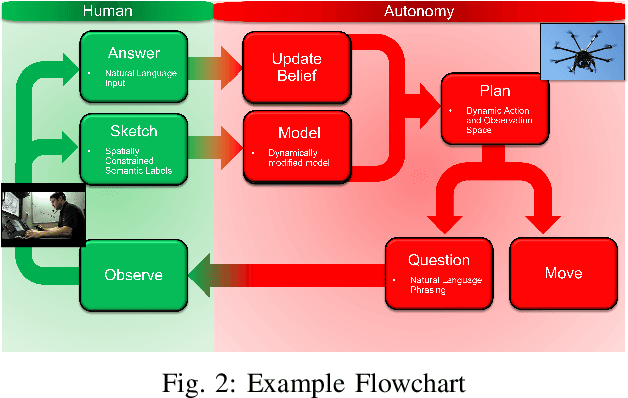

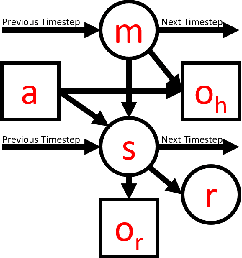

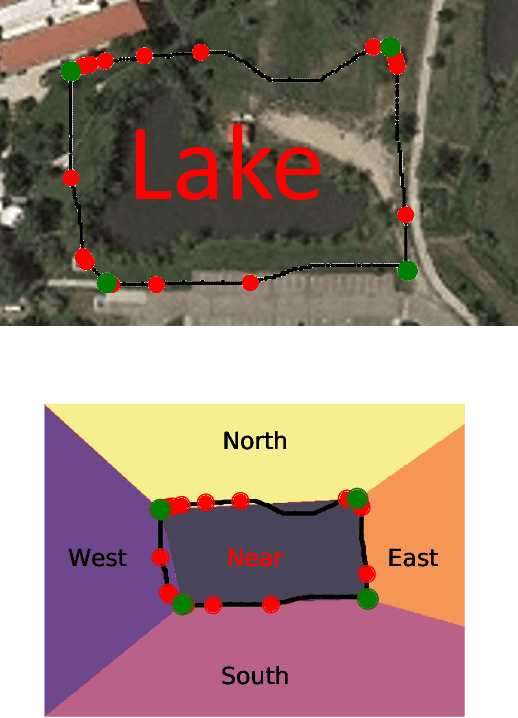

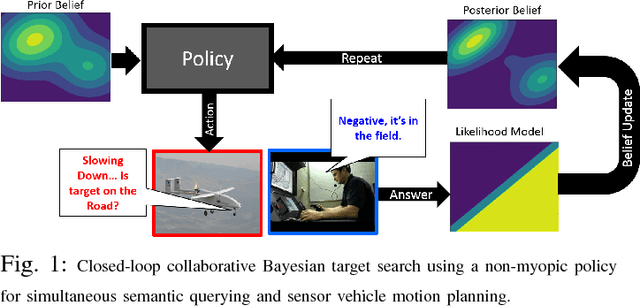

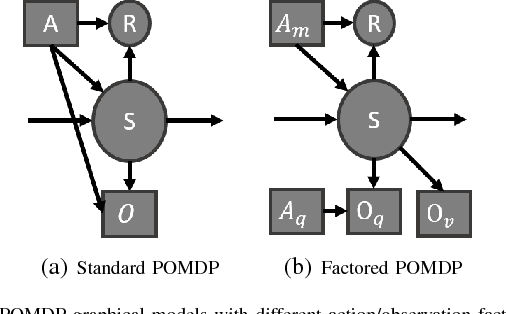

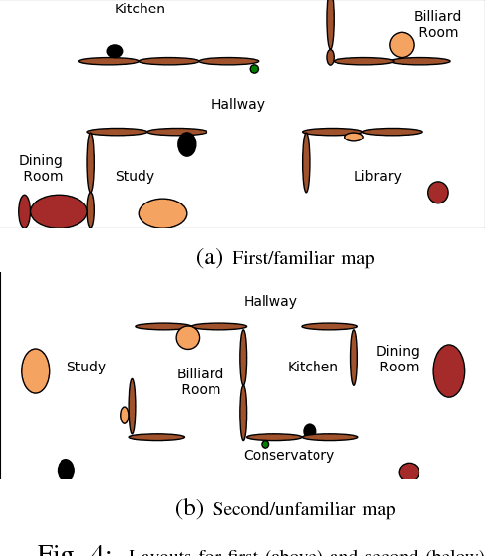

Abstract:Autonomous robots can benefit greatly from human-provided semantic characterizations of uncertain task environments and states. However, the development of integrated strategies which let robots model, communicate, and act on such soft data remains challenging. Here, a framework is presented for active semantic sensing and planning in human-robot teams which addresses these gaps by formally combining the benefits of online sampling-based POMDP policies, multi-modal semantic interaction, and Bayesian data fusion. This approach lets humans opportunistically impose model structure and extend the range of semantic soft data in uncertain environments by sketching and labeling arbitrary landmarks across the environment. Dynamic updating of the environment while searching for a mobile target allows robotic agents to actively query humans for novel and relevant semantic data, thereby improving beliefs of unknown environments and target states for improved online planning. Target search simulations show significant improvements in time and belief state estimates required for interception versus conventional planning based solely on robotic sensing. Human subject studies demonstrate a average doubling in dynamic target capture rate compared to the lone robot case, employing reasoning over a range of user characteristics and interaction modalities. Video of interaction can be found at https://youtu.be/Eh-82ZJ1o4I.

Closed-loop Bayesian Semantic Data Fusion for Collaborative Human-Autonomy Target Search

Jun 03, 2018

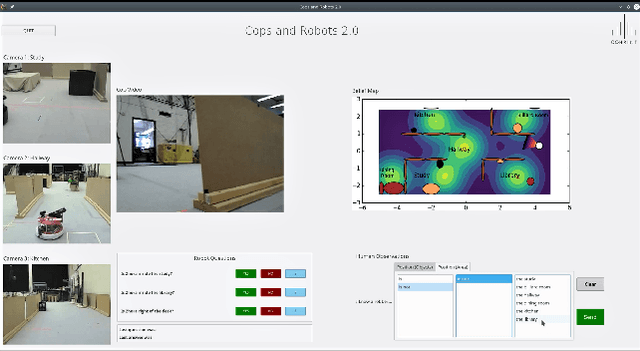

Abstract:In search applications, autonomous unmanned vehicles must be able to efficiently reacquire and localize mobile targets that can remain out of view for long periods of time in large spaces. As such, all available information sources must be actively leveraged -- including imprecise but readily available semantic observations provided by humans. To achieve this, this work develops and validates a novel collaborative human-machine sensing solution for dynamic target search. Our approach uses continuous partially observable Markov decision process (CPOMDP) planning to generate vehicle trajectories that optimally exploit imperfect detection data from onboard sensors, as well as semantic natural language observations that can be specifically requested from human sensors. The key innovation is a scalable hierarchical Gaussian mixture model formulation for efficiently solving CPOMDPs with semantic observations in continuous dynamic state spaces. The approach is demonstrated and validated with a real human-robot team engaged in dynamic indoor target search and capture scenarios on a custom testbed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge