James Wingate

SAM2CLIP2SAM: Vision Language Model for Segmentation of 3D CT Scans for Covid-19 Detection

Jul 23, 2024Abstract:This paper presents a new approach for effective segmentation of images that can be integrated into any model and methodology; the paradigm that we choose is classification of medical images (3-D chest CT scans) for Covid-19 detection. Our approach includes a combination of vision-language models that segment the CT scans, which are then fed to a deep neural architecture, named RACNet, for Covid-19 detection. In particular, a novel framework, named SAM2CLIP2SAM, is introduced for segmentation that leverages the strengths of both Segment Anything Model (SAM) and Contrastive Language-Image Pre-Training (CLIP) to accurately segment the right and left lungs in CT scans, subsequently feeding these segmented outputs into RACNet for classification of COVID-19 and non-COVID-19 cases. At first, SAM produces multiple part-based segmentation masks for each slice in the CT scan; then CLIP selects only the masks that are associated with the regions of interest (ROIs), i.e., the right and left lungs; finally SAM is given these ROIs as prompts and generates the final segmentation mask for the lungs. Experiments are presented across two Covid-19 annotated databases which illustrate the improved performance obtained when our method has been used for segmentation of the CT scans.

A Unified Deep Learning Approach for Prediction of Parkinson's Disease

Nov 25, 2019

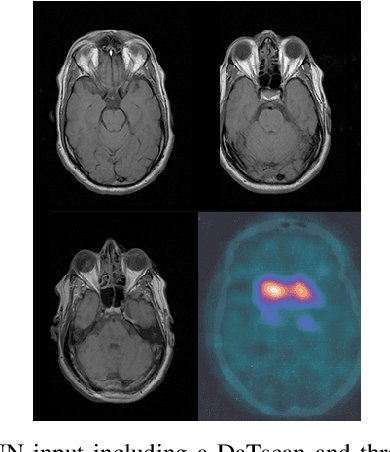

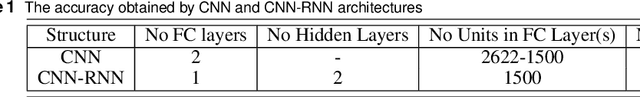

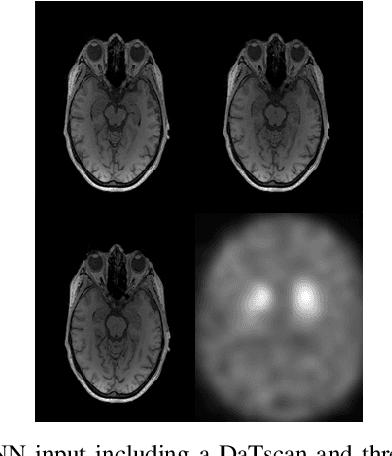

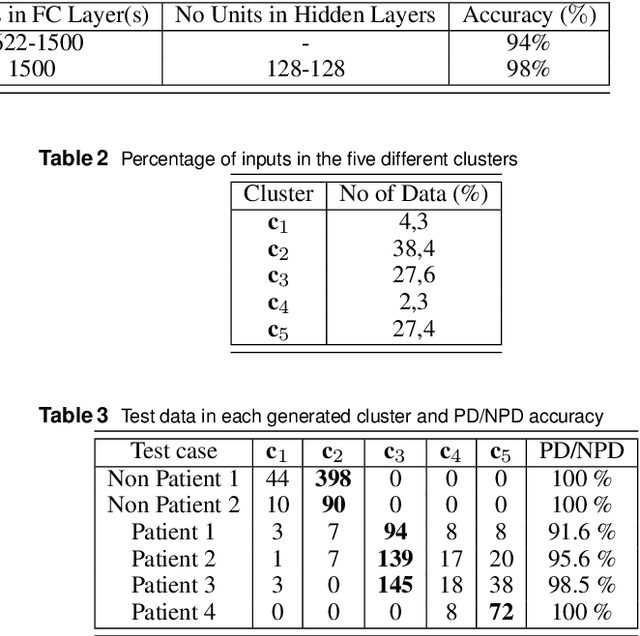

Abstract:The paper presents a novel approach, based on deep learning, for diagnosis of Parkinson's disease through medical imaging. The approach includes analysis and use of the knowledge extracted by Deep Convolutional and Recurrent Neural Networks (DNNs) when trained with medical images, such as Magnetic Resonance Images and DaTscans. Internal representations of the trained DNNs constitute the extracted knowledge which is used in a transfer learning and domain adaptation manner, so as to create a unified framework for prediction of Parkinson's across different medical environments. A large experimental study is presented illustrating the ability of the proposed approach to effectively predict Parkinson's, using different medical image sets from real environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge