James Janopaul-Naylor

Deep Convolutional Neural Networks for Imaging Data Based Survival Analysis of Rectal Cancer

Jan 05, 2019

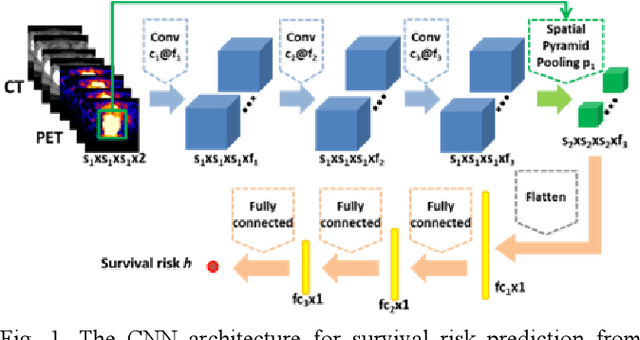

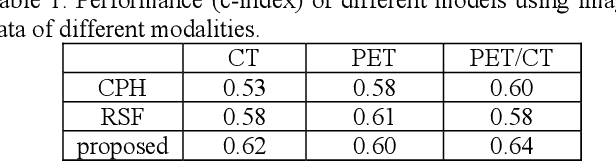

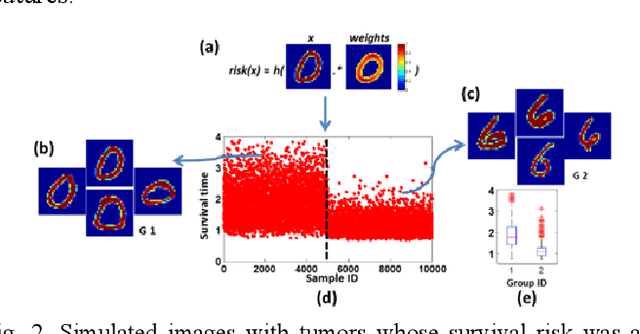

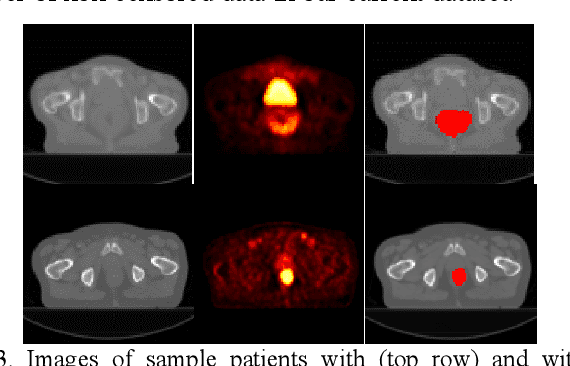

Abstract:Recent radiomic studies have witnessed promising performance of deep learning techniques in learning radiomic features and fusing multimodal imaging data. Most existing deep learning based radiomic studies build predictive models in a setting of pattern classification, not appropriate for survival analysis studies where some data samples have incomplete observations. To improve existing survival analysis techniques whose performance is hinged on imaging features, we propose a deep learning method to build survival regression models by optimizing imaging features with deep convolutional neural networks (CNNs) in a proportional hazards model. To make the CNNs applicable to tumors with varied sizes, a spatial pyramid pooling strategy is adopted. Our method has been validated based on a simulated imaging dataset and a FDG-PET/CT dataset of rectal cancer patients treated for locally advanced rectal cancer. Compared with survival prediction models built upon hand-crafted radiomic features using Cox proportional hazards model and random survival forests, our method achieved competitive prediction performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge