Jakeoung Koo

Bayesian Based Unrolling for Reconstruction and Super-resolution of Single-Photon Lidar Systems

Jul 24, 2023

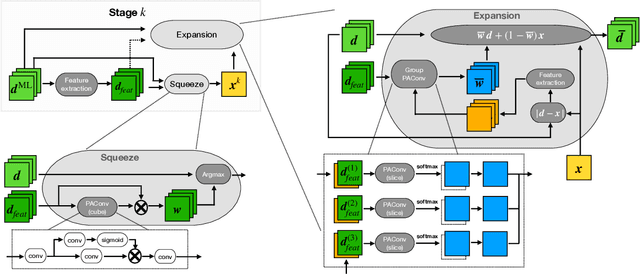

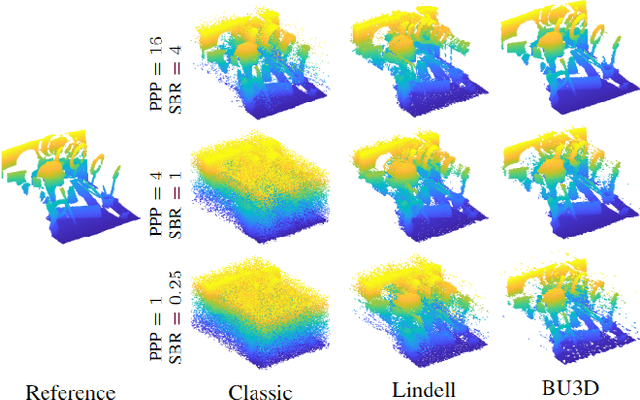

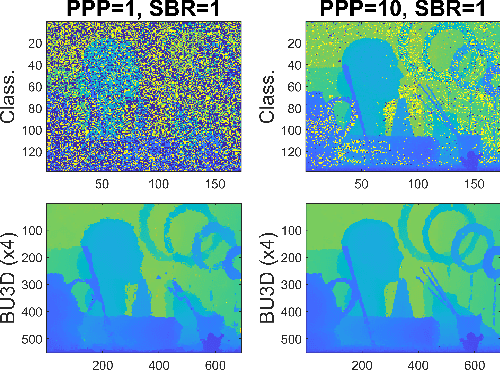

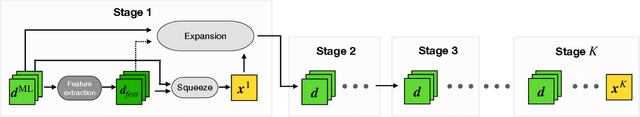

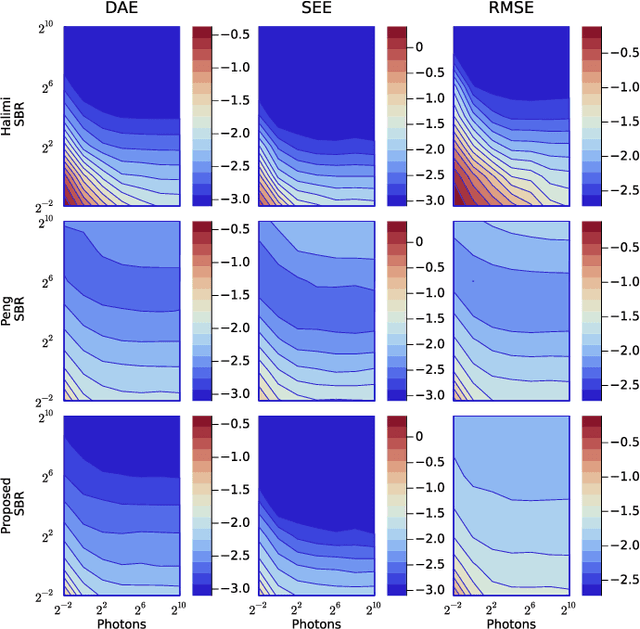

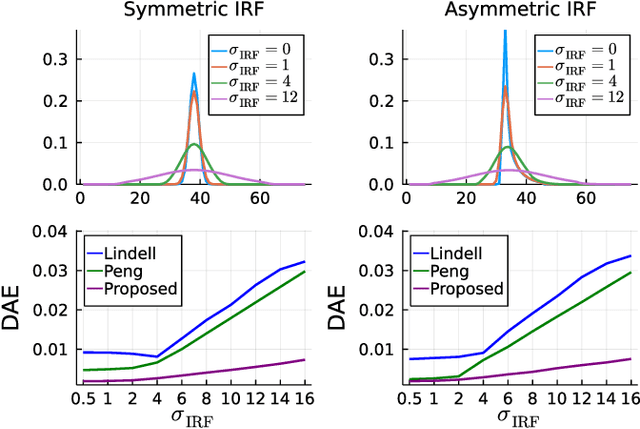

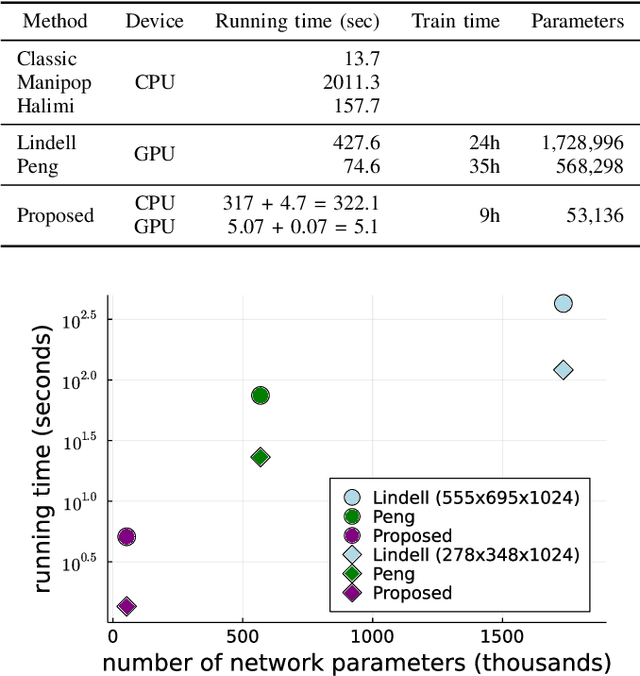

Abstract:Deploying 3D single-photon Lidar imaging in real world applications faces several challenges due to imaging in high noise environments and with sensors having limited resolution. This paper presents a deep learning algorithm based on unrolling a Bayesian model for the reconstruction and super-resolution of 3D single-photon Lidar. The resulting algorithm benefits from the advantages of both statistical and learning based frameworks, providing best estimates with improved network interpretability. Compared to existing learning-based solutions, the proposed architecture requires a reduced number of trainable parameters, is more robust to noise and mismodelling of the system impulse response function, and provides richer information about the estimates including uncertainty measures. Results on synthetic and real data show competitive results regarding the quality of the inference and computational complexity when compared to state-of-the-art algorithms. This short paper is based on contributions published in [1] and [2].

A Bayesian Based Deep Unrolling Algorithm for Single-Photon Lidar Systems

Jan 26, 2022

Abstract:Deploying 3D single-photon Lidar imaging in real world applications faces multiple challenges including imaging in high noise environments. Several algorithms have been proposed to address these issues based on statistical or learning-based frameworks. Statistical methods provide rich information about the inferred parameters but are limited by the assumed model correlation structures, while deep learning methods show state-of-the-art performance but limited inference guarantees, preventing their extended use in critical applications. This paper unrolls a statistical Bayesian algorithm into a new deep learning architecture for robust image reconstruction from single-photon Lidar data, i.e., the algorithm's iterative steps are converted into neural network layers. The resulting algorithm benefits from the advantages of both statistical and learning based frameworks, providing best estimates with improved network interpretability. Compared to existing learning-based solutions, the proposed architecture requires a reduced number of trainable parameters, is more robust to noise and mismodelling effects, and provides richer information about the estimates including uncertainty measures. Results on synthetic and real data show competitive results regarding the quality of the inference and computational complexity when compared to state-of-the-art algorithms.

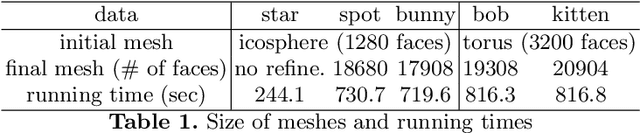

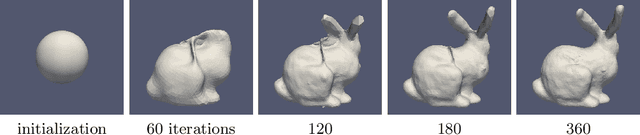

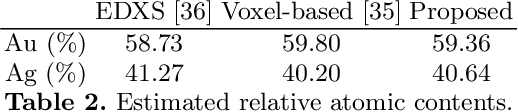

Shape from Projections via Differentiable Forward Projector

Jun 29, 2020

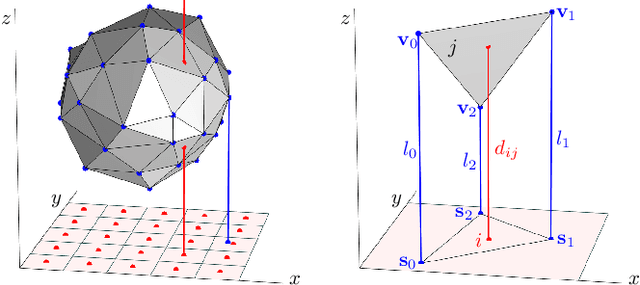

Abstract:In tomography, forward projection of 3D meshes has been mostly studied to simulate data acquisition. However, such works did not consider an inverse process of estimating shapes from projections. In this paper, we propose a differentiable forward projector for 3D meshes, to bridge the gap between the forward model for 3D surfaces and optimization. We view the forward projection as a rendering process, and make it differentiable by extending a recent work in differentiable rasterization. We use the proposed forward projector to reconstruct 3D shapes directly from projections. Experimental results for single-object problems show that our method outperforms the traditional voxel-based methods on noisy simulated data. We also apply our method on real data from electron tomography to estimate the shapes of some nanoparticles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge