Jaka Sodnik

Contactless pulse rate assessment: Results and insights for application in driving simulator

May 02, 2025

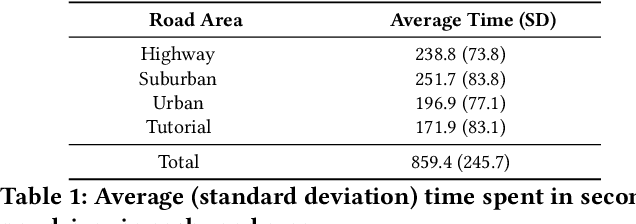

Abstract:Camera-based monitoring of Pulse Rate (PR) enables continuous and unobtrusive assessment of driver's state, allowing estimation of fatigue or stress that could impact traffic safety. Commonly used wearable Photoplethysmography (PPG) sensors, while effective, suffer from motion artifacts and user discomfort. This study explores the feasibility of non-contact PR assessment using facial video recordings captured by a Red, Green, and Blue (RGB) camera in a driving simulation environment. The proposed approach detects subtle skin color variations due to blood flow and compares extracted PR values against reference measurements from a wearable wristband Empatica E4. We evaluate the impact of Eulerian Video Magnification (EVM) on signal quality and assess statistical differences in PR between age groups. Data obtained from 80 recordings from 64 healthy subjects covering a PR range of 45-160 bpm are analyzed, and signal extraction accuracy is quantified using metrics, such as Mean Absolute Error (MAE) and Root Mean Square Error (RMSE). Results show that EVM slightly improves PR estimation accuracy, reducing MAE from 6.48 bpm to 5.04 bpm and RMSE from 7.84 bpm to 6.38 bpm. A statistically significant difference is found between older and younger groups with both video-based and ground truth evaluation procedures. Additionally, we discuss Empatica E4 bias and its potential impact on the overall assessment of contact measurements. Altogether the findings demonstrate the feasibility of camera-based PR monitoring in dynamic environments and its potential integration into driving simulators for real-time physiological assessment.

Data-driven Method for Generating Synthetic Electrogastrogram Time Series

Mar 04, 2023Abstract:Objective: A new method for generating realistic electrogastrogram (EGG) time series is presented and evaluated. Methods: We used EGG data from an existing open database to set model parameters and Monte Carlo simulation to evaluate a new model based on the hypothesis that EGG dominant frequency should be statistically significantly different between fasting and postprandial states. Additionally, we illustrated method customization for generating artificial EGG alterations caused by the simulator sickness. Results: The user can specify the following input parameters of developed data-driven model: (1) duration of the generated sequence, (2) sampling frequency, (3) recording state (postprandial or fasting state), (3) breathing artifact contamination, (4) a flag whether the output would produce plots, (5) seed for the sake of reproducibility, (6) pauses in the gastric rhythm (arrhythmia occurrence), and (7) overall noise contamination to produce proper variability in EGG signals. The simulated EGG provided expected results of Monte Carlo simulation while features obtained from the synthetic EGG signal resembling simulator sickness occurrence displayed expected trends. Conclusion: The code for generation of synthetic EGG time series is freely available and can be further customized to assess robustness of the signal processing algorithms to noises and especially to movement artifacts, as well as to simulate alterations of gastric electrical activity. Significance: The proposed approach is customized for EGG data synthesis, but it can be further utilized to other biosignals with similar nature such as electroencephalogram.

Sensing Time Effectiveness for Fitness to Drive Evaluation in Neurological Patients

May 31, 2022

Abstract:We present a method to automatically calculate sensing time (ST) from the eye tracker data in subjects with neurological impairment using a driving simulator. ST presents the time interval for a person to notice the stimulus from its first occurrence. Precisely, we measured the time since the children started to cross the street until the drivers directed their look to the children. In comparison to the commonly used reaction time, ST does not require additional neuro-muscular responses such as braking and presents unique information on the sensory function. From 108 neurological patients recruited for the study, the analysis of ST was performed in overall 56 patients to assess fit-, unfit-, and conditionally-fit-to-drive patients. The results showed that the proposed method based on the YOLO (You Only Look Once) object detector is efficient for computing STs from the eye tracker data in neurological patients. We obtained discriminative results for fit-to-drive patients by application of Tukey's Honest Significant Difference post hoc test (p < 0.01), while no difference was observed between conditionally-fit and unfit-to-drive groups (p = 0.542). Moreover, we show that time-to-collision (TTC), initial gaze distance (IGD) from pedestrians, and speed at the hazard onset did not influence the result, while the only significant interaction is among fitness, IGD, and TTC on ST. Although the proposed method can be applied to assess fitness to drive, we provide directions for future driving simulation-based evaluation and propose processing workflow to secure reliable ST calculation in other domains such as psychology, neuroscience, marketing, etc.

Driver2vec: Driver Identification from Automotive Data

Feb 10, 2021

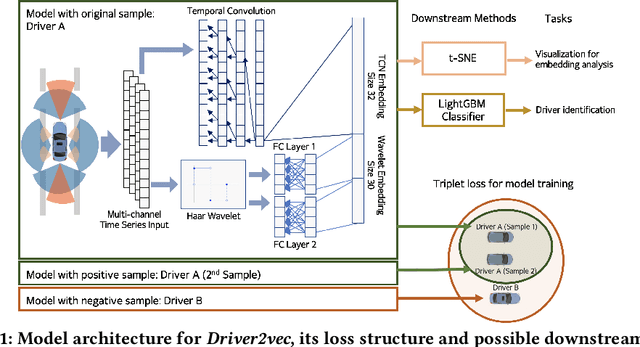

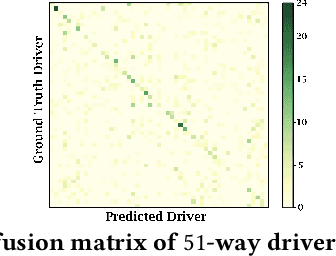

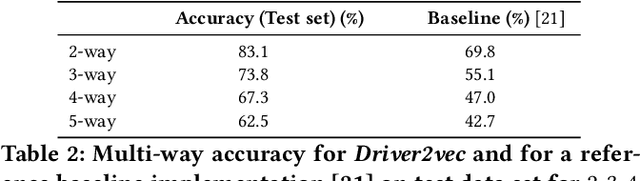

Abstract:With increasing focus on privacy protection, alternative methods to identify vehicle operator without the use of biometric identifiers have gained traction for automotive data analysis. The wide variety of sensors installed on modern vehicles enable autonomous driving, reduce accidents and improve vehicle handling. On the other hand, the data these sensors collect reflect drivers' habit. Drivers' use of turn indicators, following distance, rate of acceleration, etc. can be transformed to an embedding that is representative of their behavior and identity. In this paper, we develop a deep learning architecture (Driver2vec) to map a short interval of driving data into an embedding space that represents the driver's behavior to assist in driver identification. We develop a custom model that leverages performance gains of temporal convolutional networks, embedding separation power of triplet loss and classification accuracy of gradient boosting decision trees. Trained on a dataset of 51 drivers provided by Nervtech, Driver2vec is able to accurately identify the driver from a short 10-second interval of sensor data, achieving an average pairwise driver identification accuracy of 83.1% from this 10-second interval, which is remarkably higher than performance obtained in previous studies. We then analyzed performance of Driver2vec to show that its performance is consistent across scenarios and that modeling choices are sound.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge