Jaime Ruiz-Serra

Artificial Theory of Mind and Self-Guided Social Organisation

Nov 14, 2024Abstract:One of the challenges artificial intelligence (AI) faces is how a collection of agents coordinate their behaviour to achieve goals that are not reachable by any single agent. In a recent article by Ozmen et al this was framed as one of six grand challenges: That AI needs to respect human cognitive processes at the human-AI interaction frontier. We suggest that this extends to the AI-AI frontier and that it should also reflect human psychology, as it is the only successful framework we have from which to build out. In this extended abstract we first make the case for collective intelligence in a general setting, drawing on recent work from single neuron complexity in neural networks and ant network adaptability in ant colonies. From there we introduce how species relate to one another in an ecological network via niche selection, niche choice, and niche conformity with the aim of forming an analogy with human social network development as new agents join together and coordinate. From there we show how our social structures are influenced by our neuro-physiology, our psychology, and our language. This emphasises how individual people within a social network influence the structure and performance of that network in complex tasks, and that cognitive faculties such as Theory of Mind play a central role. We finish by discussing the current state of the art in AI and where there is potential for further development of a socially embodied collective artificial intelligence that is capable of guiding its own social structures.

Theory of Mind Enhances Collective Intelligence

Nov 14, 2024

Abstract:Collective Intelligence plays a central role in a large variety of fields, from economics and evolutionary theory to neural networks and eusocial insects, and it is also core to much of the work on emergence and self-organisation in complex systems theory. However, in human collective intelligence there is still much more to be understood in the relationship between specific psychological processes at the individual level and the emergence of self-organised structures at the social level. Previously psychological factors have played a relatively minor role in the study of collective intelligence as the principles are often quite general and applicable to humans just as readily as insects or other agents without sophisticated psychologies. In this article we emphasise, with examples from other complex adaptive systems, the broad applicability of collective intelligence principles while the mechanisms and time-scales differ significantly between examples. We contend that flexible collective intelligence in human social settings is improved by our use of a specific cognitive tool: our Theory of Mind. We identify several key characteristics of psychologically mediated collective intelligence and show that the development of a Theory of Mind is a crucial factor distinguishing social collective intelligence from general collective intelligence. We then place these capabilities in the context of the next steps in artificial intelligence embedded in a future that includes an effective human-AI hybrid social ecology.

Factorised Active Inference for Strategic Multi-Agent Interactions

Nov 11, 2024

Abstract:Understanding how individual agents make strategic decisions within collectives is important for advancing fields as diverse as economics, neuroscience, and multi-agent systems. Two complementary approaches can be integrated to this end. The Active Inference framework (AIF) describes how agents employ a generative model to adapt their beliefs about and behaviour within their environment. Game theory formalises strategic interactions between agents with potentially competing objectives. To bridge the gap between the two, we propose a factorisation of the generative model whereby each agent maintains explicit, individual-level beliefs about the internal states of other agents, and uses them for strategic planning in a joint context. We apply our model to iterated general-sum games with 2 and 3 players, and study the ensemble effects of game transitions, where the agents' preferences (game payoffs) change over time. This non-stationarity, beyond that caused by reciprocal adaptation, reflects a more naturalistic environment in which agents need to adapt to changing social contexts. Finally, we present a dynamical analysis of key AIF quantities: the variational free energy (VFE) and the expected free energy (EFE) from numerical simulation data. The ensemble-level EFE allows us to characterise the basins of attraction of games with multiple Nash Equilibria under different conditions, and we find that it is not necessarily minimised at the aggregate level. By integrating AIF and game theory, we can gain deeper insights into how intelligent collectives emerge, learn, and optimise their actions in dynamic environments, both cooperative and non-cooperative.

Towards self-attention based visual navigation in the real world

Sep 19, 2022

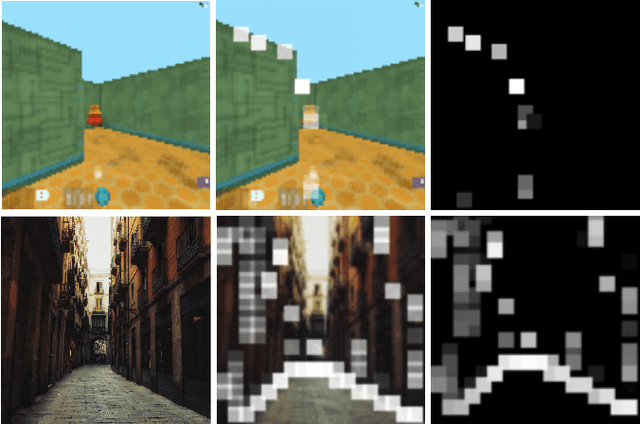

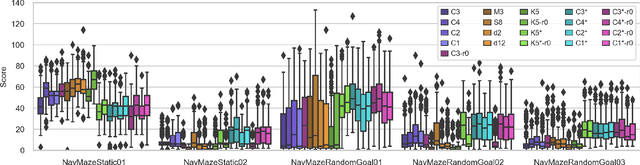

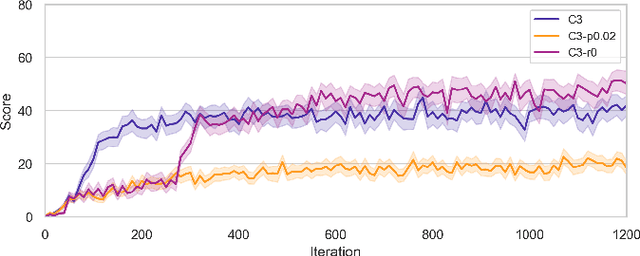

Abstract:Vision guided navigation requires processing complex visual information to inform task-orientated decisions. Applications include autonomous robots, self-driving cars, and assistive vision for humans. A key element is the extraction and selection of relevant features in pixel space upon which to base action choices, for which Machine Learning techniques are well suited. However, Deep Reinforcement Learning agents trained in simulation often exhibit unsatisfactory results when deployed in the real-world due to perceptual differences known as the $\textit{reality gap}$. An approach that is yet to be explored to bridge this gap is self-attention. In this paper we (1) perform a systematic exploration of the hyperparameter space for self-attention based navigation of 3D environments and qualitatively appraise behaviour observed from different hyperparameter sets, including their ability to generalise; (2) present strategies to improve the agents' generalisation abilities and navigation behaviour; and (3) show how models trained in simulation are capable of processing real world images meaningfully in real time. To our knowledge, this is the first demonstration of a self-attention based agent successfully trained in navigating a 3D action space, using less than 4000 parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge