Jaehyeon Lee

MToFNet: Object Anti-Spoofing with Mobile Time-of-Flight Data

Oct 06, 2021

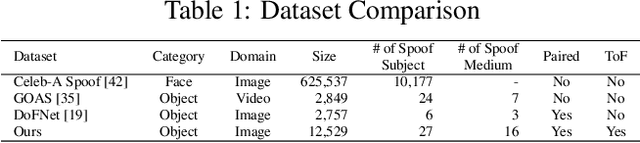

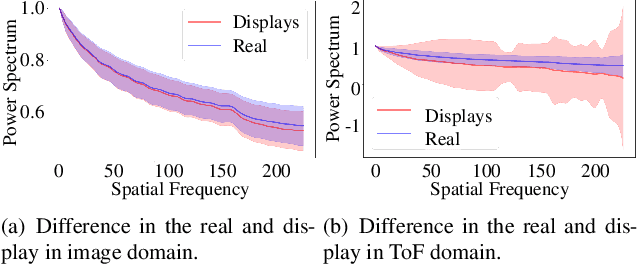

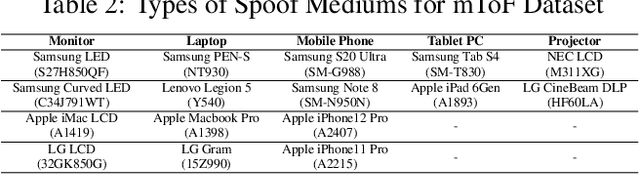

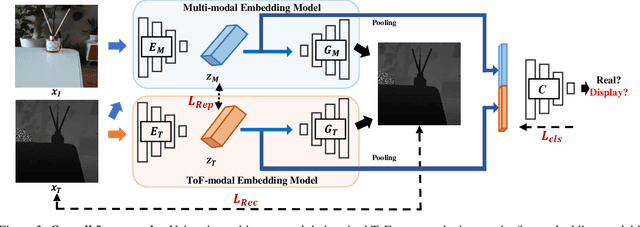

Abstract:In online markets, sellers can maliciously recapture others' images on display screens to utilize as spoof images, which can be challenging to distinguish in human eyes. To prevent such harm, we propose an anti-spoofing method using the paired rgb images and depth maps provided by the mobile camera with a Time-of-Fight sensor. When images are recaptured on display screens, various patterns differing by the screens as known as the moir\'e patterns can be also captured in spoof images. These patterns lead the anti-spoofing model to be overfitted and unable to detect spoof images recaptured on unseen media. To avoid the issue, we build a novel representation model composed of two embedding models, which can be trained without considering the recaptured images. Also, we newly introduce mToF dataset, the largest and most diverse object anti-spoofing dataset, and the first to utilize ToF data. Experimental results confirm that our model achieves robust generalization even across unseen domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge