J. Irving Vasquez-Gomez

2D Grid Map Generation for Deep-Learning-based Navigation Approaches

Oct 25, 2021

Abstract:In the last decade, autonomous navigation for roboticshas been leveraged by deep learning and other approachesbased on machine learning. These approaches have demon-strated significant advantages in robotics performance. Butthey have the disadvantage that they require a lot of data toinfer knowledge. In this paper, we present an algorithm forbuilding 2D maps with attributes that make them useful fortraining and testing machine-learning-based approaches.The maps are based on dungeons environments where sev-eral random rooms are built and then those rooms are con-nected. In addition, we provide a dataset with 10,000 mapsproduced by the proposed algorithm and a description withextensive information for algorithm evaluation. Such infor-mation includes validation of path existence, the best path,distances, among other attributes. We believe that thesemaps and their related information can be very useful forrobotics enthusiasts and researchers who want to test deeplearning approaches. The dataset is available athttps://github.com/gbriel21/map2D_dataSet.git

Coverage Path Planning for Spraying Drones

May 18, 2021

Abstract:The COVID-19 pandemic is causing a devastating effect on the health of global population. There are several efforts to prevent the spread of the virus. Among those efforts, cleaning and disinfecting public areas has become an important task. This task is not restricted to disinfection, but it is also applied to painting or precision agriculture. Current state of the art planners determine a route, but they do not consider that the plan will be executed in closed areas or they do not model the sprinkler coverage. In this paper, we propose a coverage path planning algorithm for area disinfection, our method considers the scene restrictions as well as the sprinkler coverage. We have tested the method in several simulation scenes. Our experiments show that the method is efficient and covers more areas with respect to current methods.

Custom Distribution for Sampling-Based Motion Planning

Apr 21, 2021

Abstract:Sampling-based algorithms are widely used in robotics because they are very useful in high dimensional spaces. However, the rate of success and quality of the solutions are determined by an adequate selection of their parameters such as the distance between states, the local planner, and the sampling method. For robots with large configuration spaces or dynamic restrictions selecting these parameters is a challenging task. This paper proposes a method for improving the results for a set of the most popular sampling-based algorithms, the Rapidly-exploring Random Trees (RRTs) by adjusting the sampling method. The idea is to replace the sampling function, traditionally a Uniform Probability Density Function (U-PDF) with a custom distribution (C-PDF) learned from previously successful queries of a similar task. With few samples, our method builds the custom distribution allowing a higher success rate and sparser trees in randomly new queries. We test our method in several common tasks of autonomous driving such as parking maneuvers or obstacle clearance and also in complex scenarios outperforming the base original and bias RRT. In addition, the proposed method requires a relative small set of examples, unlike current deep learning techniques that require a vast amount of examples.

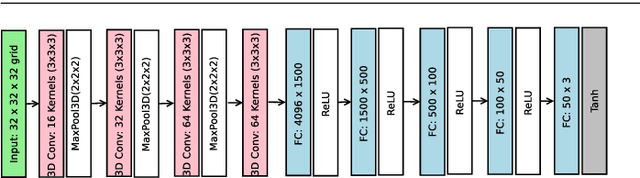

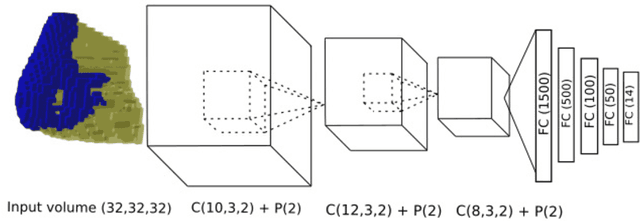

Next-best-view Regression using a 3D Convolutional Neural Network

Jan 23, 2021

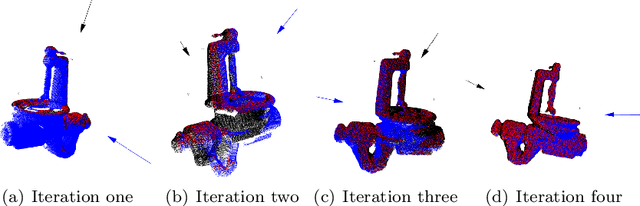

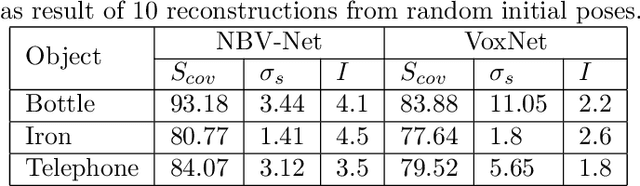

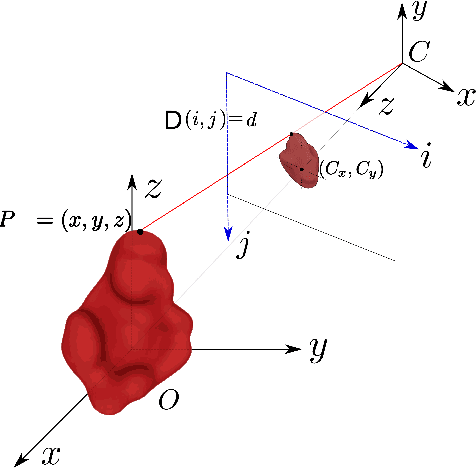

Abstract:Automated three-dimensional (3D) object reconstruction is the task of building a geometric representation of a physical object by means of sensing its surface. Even though new single view reconstruction techniques can predict the surface, they lead to incomplete models, specially, for non commons objects such as antique objects or art sculptures. Therefore, to achieve the task's goals, it is essential to automatically determine the locations where the sensor will be placed so that the surface will be completely observed. This problem is known as the next-best-view problem. In this paper, we propose a data-driven approach to address the problem. The proposed approach trains a 3D convolutional neural network (3D CNN) with previous reconstructions in order to regress the \btxt{position of the} next-best-view. To the best of our knowledge, this is one of the first works that directly infers the next-best-view in a continuous space using a data-driven approach for the 3D object reconstruction task. We have validated the proposed approach making use of two groups of experiments. In the first group, several variants of the proposed architecture are analyzed. Predicted next-best-views were observed to be closely positioned to the ground truth. In the second group of experiments, the proposed approach is requested to reconstruct several unseen objects, namely, objects not considered by the 3D CNN during training nor validation. Coverage percentages of up to 90 \% were observed. With respect to current state-of-the-art methods, the proposed approach improves the performance of previous next-best-view classification approaches and it is quite fast in running time (3 frames per second), given that it does not compute the expensive ray tracing required by previous information metrics.

* Accepted to Machine Vision and Applications

Supervised Learning of the Next-Best-View for 3D Object Reconstruction

May 14, 2019

Abstract:Motivated by the advances in 3D sensing technology and the spreading of low-cost robotic platforms, 3D object reconstruction has become a common task in many areas. Nevertheless, the selection of the optimal sensor pose that maximizes the reconstructed surface is a problem that remains open. It is known in the literature as the next-best-view planning problem. In this paper, we propose a novel next-best-view planning scheme based on supervised deep learning. The scheme contains an algorithm for automatic generation of datasets and an original three-dimensional convolutional neural network (3D-CNN) used to learn the next-best-view. Unlike previous work where the problem is addressed as a search, the trained 3D-CNN directly predicts the sensor pose. We present a comparison of the proposed network against a similar net, and we present several experiments of the reconstruction of unknown objects validating the effectiveness of the proposed scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge