Jörg Becker

No Time Like the Present: Effects of Language Change on Automated Comment Moderation

Jul 08, 2022

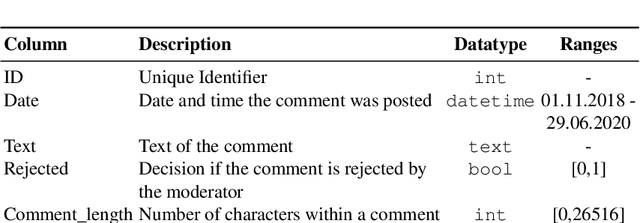

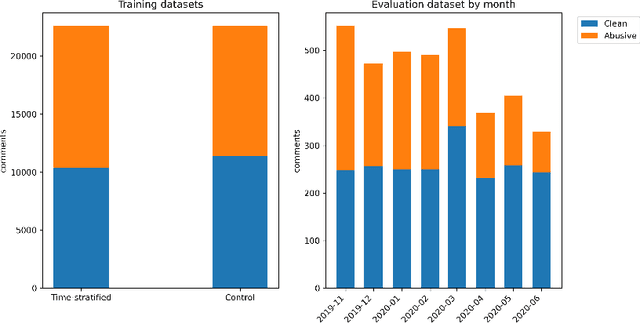

Abstract:The spread of online hate has become a significant problem for newspapers that host comment sections. As a result, there is growing interest in using machine learning and natural language processing for (semi-) automated abusive language detection to avoid manual comment moderation costs or having to shut down comment sections altogether. However, much of the past work on abusive language detection assumes that classifiers operate in a static language environment, despite language and news being in a state of constant flux. In this paper, we show using a new German newspaper comments dataset that the classifiers trained with naive ML techniques like a random-test train split will underperform on future data, and that a time stratified evaluation split is more appropriate. We also show that classifier performance rapidly degrades when evaluated on data from a different period than the training data. Our findings suggest that it is necessary to consider the temporal dynamics of language when developing an abusive language detection system or risk deploying a model that will quickly become defunct.

* Published in proceedings of the 2022 IEEE 24th Conference on Business Informatics (CBI), Amsterdam, Netherlands. 17 pages, 4 figures

XNAP: Making LSTM-based Next Activity Predictions Explainable by Using LRP

Aug 18, 2020

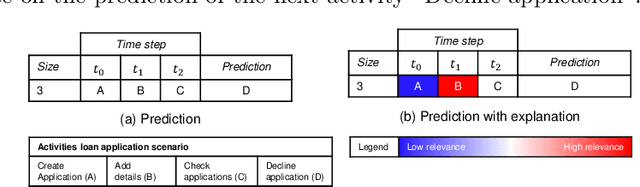

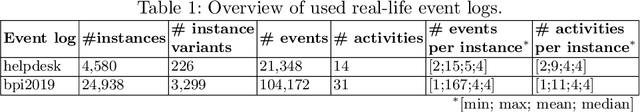

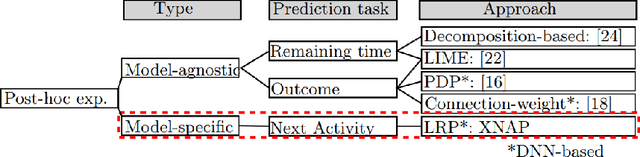

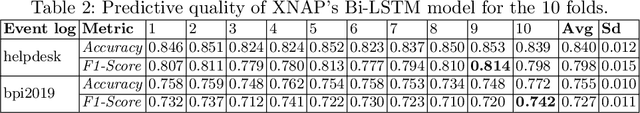

Abstract:Predictive business process monitoring (PBPM) is a class of techniques designed to predict behaviour, such as next activities, in running traces. PBPM techniques aim to improve process performance by providing predictions to process analysts, supporting them in their decision making. However, the PBPM techniques` limited predictive quality was considered as the essential obstacle for establishing such techniques in practice. With the use of deep neural networks (DNNs), the techniques` predictive quality could be improved for tasks like the next activity prediction. While DNNs achieve a promising predictive quality, they still lack comprehensibility due to their hierarchical approach of learning representations. Nevertheless, process analysts need to comprehend the cause of a prediction to identify intervention mechanisms that might affect the decision making to secure process performance. In this paper, we propose XNAP, the first explainable, DNN-based PBPM technique for the next activity prediction. XNAP integrates a layer-wise relevance propagation method from the field of explainable artificial intelligence to make predictions of a long short-term memory DNN explainable by providing relevance values for activities. We show the benefit of our approach through two real-life event logs.

Cause vs. Effect in Context-Sensitive Prediction of Business Process Instances

Jul 15, 2020

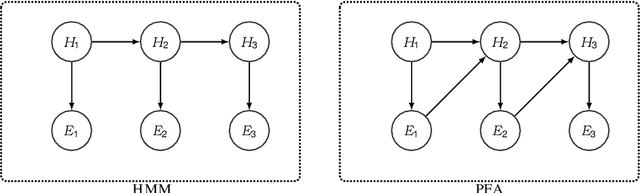

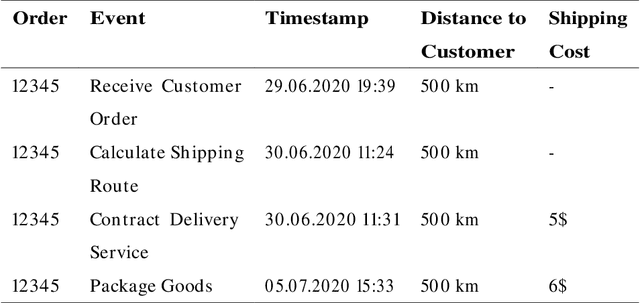

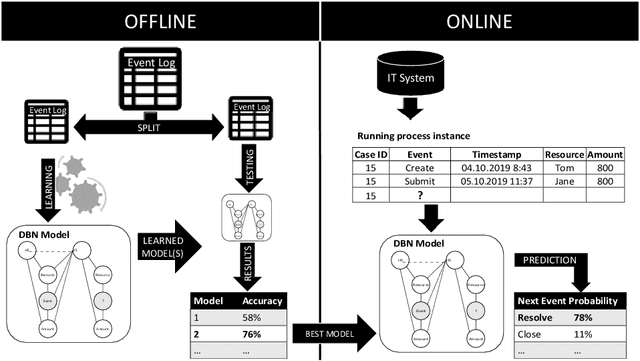

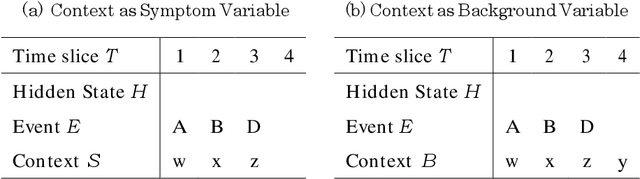

Abstract:Predicting undesirable events during the execution of a business process instance provides the process participants with an opportunity to intervene and keep the process aligned with its goals. Few approaches for tackling this challenge consider a multi-perspective view, where the flow perspective of the process is combined with its surrounding context. Given the many sources of data in today's world, context can vary widely and have various meanings. This paper addresses the issue of context being cause or effect of the next event and its impact on next event prediction. We leverage previous work on probabilistic models to develop a Dynamic Bayesian Network technique. Probabilistic models are considered comprehensible and they allow the end-user and his or her understanding of the domain to be involved in the prediction. Our technique models context attributes that have either a cause or effect relationship towards the event. We evaluate our technique with two real-life data sets and benchmark it with other techniques from the field of predictive process monitoring. The results show that our solution achieves superior prediction results if context information is correctly introduced into the model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge