Ismail Balaban

DataBoss A.S

Spatio-temporal Weather Forecasting and Attention Mechanism on Convolutional LSTMs

Feb 01, 2021

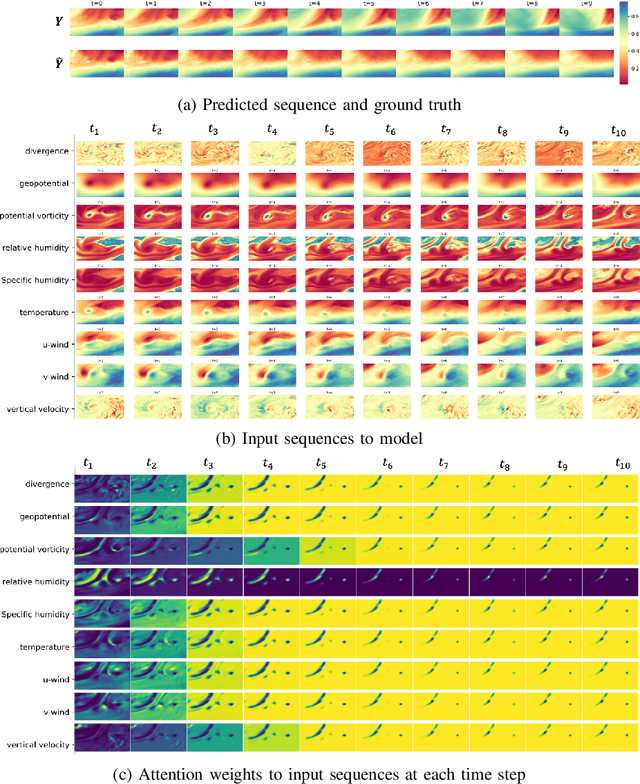

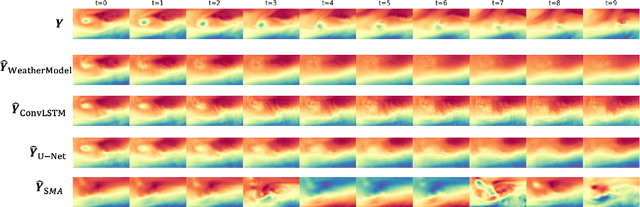

Abstract:Numerical weather forecasting on high-resolution physical models consume hours of computations on supercomputers. Application of deep learning and machine learning methods in forecasting revealed new solutions in this area. In this paper, we forecast high-resolution numeric weather data using both input weather data and observations by providing a novel deep learning architecture. We formulate the problem as spatio-temporal prediction. Our model is composed of Convolutional Long-short Term Memory, and Convolutional Neural Network units with encoder-decoder structure. We enhance the short-long term performance and interpretability with an attention and a context matcher mechanism. We perform experiments on high-scale, real-life, benchmark numerical weather dataset, ERA5 hourly data on pressure levels, and forecast the temperature. The results show significant improvements in capturing both spatial and temporal correlations with attention matrices focusing on different parts of the input series. Our model obtains the best validation and the best test score among the baseline models, including ConvLSTM forecasting network and U-Net. We provide qualitative and quantitative results and show that our model forecasts 10 time steps with 3 hour frequency with an average of 2 degrees error. Our code and the data are publicly available.

Markovian RNN: An Adaptive Time Series Prediction Network with HMM-based Switching for Nonstationary Environments

Jun 17, 2020

Abstract:We investigate nonlinear regression for nonstationary sequential data. In most real-life applications such as business domains including finance, retail, energy and economy, timeseries data exhibits nonstationarity due to the temporally varying dynamics of the underlying system. We introduce a novel recurrent neural network (RNN) architecture, which adaptively switches between internal regimes in a Markovian way to model the nonstationary nature of the given data. Our model, Markovian RNN employs a hidden Markov model (HMM) for regime transitions, where each regime controls hidden state transitions of the recurrent cell independently. We jointly optimize the whole network in an end-to-end fashion. We demonstrate the significant performance gains compared to vanilla RNN and conventional methods such as Markov Switching ARIMA through an extensive set of experiments with synthetic and real-life datasets. We also interpret the inferred parameters and regime belief values to analyze the underlying dynamics of the given sequences.

Unsupervised Online Anomaly Detection On Irregularly Sampled Or Missing Valued Time-Series Data Using LSTM Networks

May 25, 2020

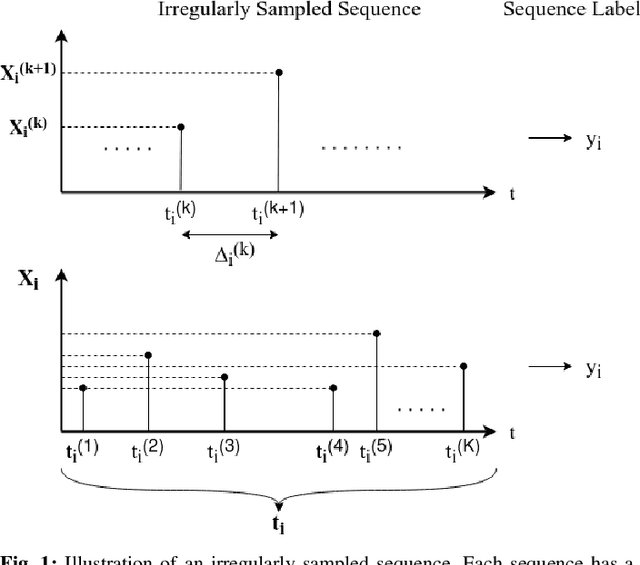

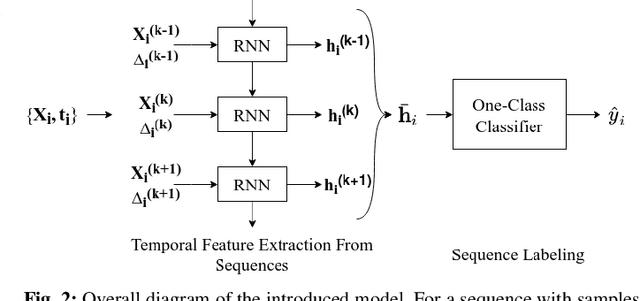

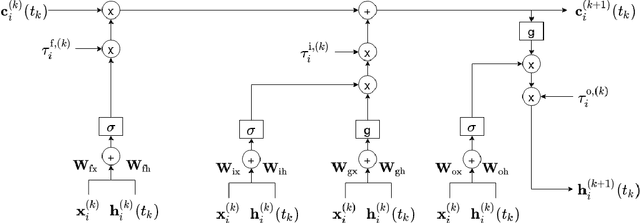

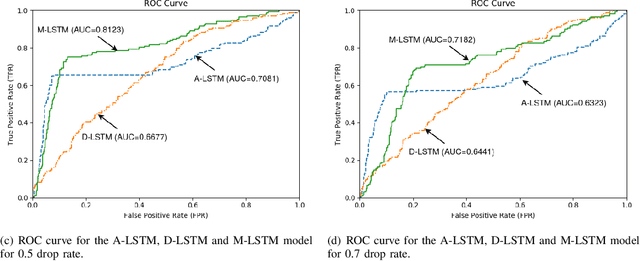

Abstract:We study anomaly detection and introduce an algorithm that processes variable length, irregularly sampled sequences or sequences with missing values. Our algorithm is fully unsupervised, however, can be readily extended to supervised or semisupervised cases when the anomaly labels are present as remarked throughout the paper. Our approach uses the Long Short Term Memory (LSTM) networks in order to extract temporal features and find the most relevant feature vectors for anomaly detection. We incorporate the sampling time information to our model by modulating the standard LSTM model with time modulation gates. After obtaining the most relevant features from the LSTM, we label the sequences using a Support Vector Data Descriptor (SVDD) model. We introduce a loss function and then jointly optimize the feature extraction and sequence processing mechanisms in an end-to-end manner. Through this joint optimization, the LSTM extracts the most relevant features for anomaly detection later to be used in the SVDD, hence completely removes the need for feature selection by expert knowledge. Furthermore, we provide a training algorithm for the online setup, where we optimize our model parameters with individual sequences as the new data arrives. Finally, on real-life datasets, we show that our model significantly outperforms the standard approaches thanks to its combination of LSTM with SVDD and joint optimization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge