Isaac Osei Agyemang

Structural damage detection via hierarchical damage information with volumetric assessment

Jul 29, 2024

Abstract:Image environments and noisy labels hinder deep learning-based inference models in structural damage detection. Post-detection, there is the challenge of reliance on manual assessments of detected damages. As a result, Guided-DetNet, characterized by Generative Attention Module (GAM), Hierarchical Elimination Algorithm (HEA), and Volumetric Contour Visual Assessment (VCVA), is proposed to mitigate complex image environments, noisy labeling, and post-detection manual assessment of structural damages. GAM leverages cross-horizontal and cross-vertical patch merging and cross foreground-background feature fusion to generate varied features to mitigate complex image environments. HEA addresses noisy labeling using hierarchical relationships among classes to refine instances given an image by eliminating unlikely class categories. VCVA assesses the severity of detected damages via volumetric representation and quantification leveraging the Dirac delta distribution. A comprehensive quantitative study, two robustness tests, and an application scenario based on the PEER Hub Image-Net dataset substantiate Guided-DetNet's promising performances. Guided-DetNet outperformed the best-compared models in a triple classification task by a difference of not less than 3% and not less than 2% in a dual detection task under varying metrics.

Gesture Control of Micro-drone: A Lightweight-Net with Domain Randomization and Trajectory Generators

Jan 29, 2023

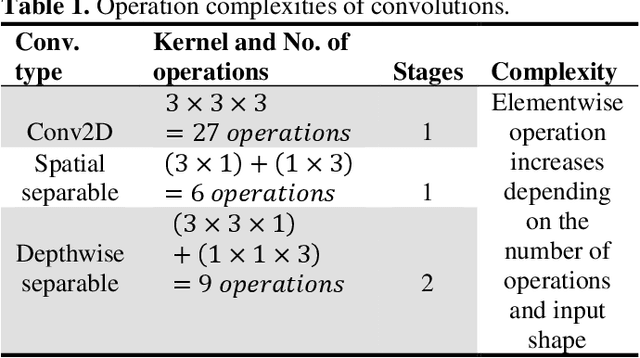

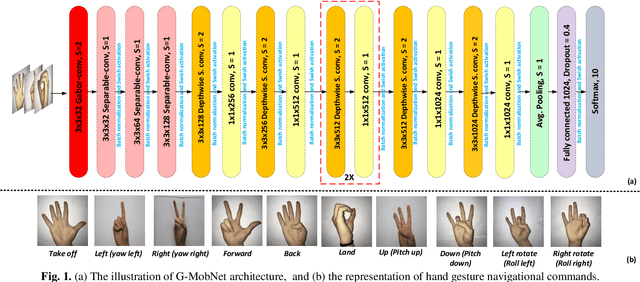

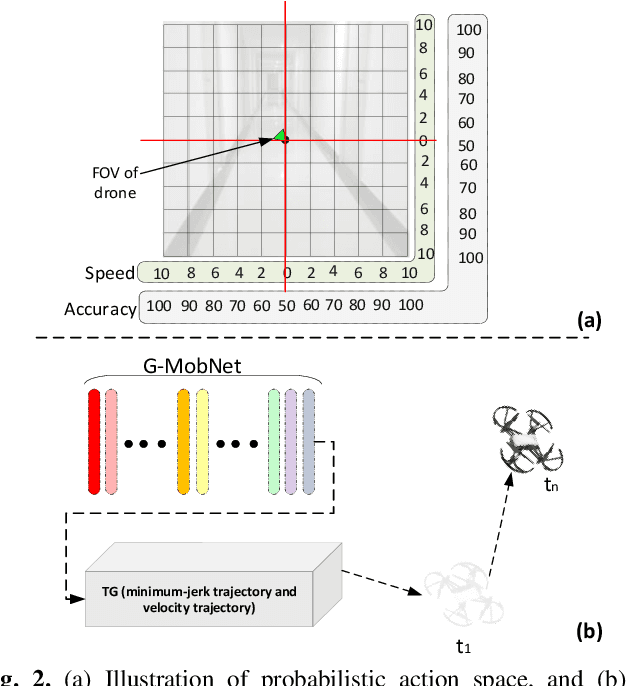

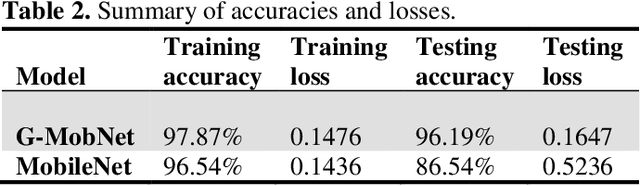

Abstract:Micro-drones can be integrated into various industrial applications but are constrained by their computing power and expert pilots, a secondary challenge. This study presents a computationally-efficient deep convolutional neural network that utilizes Gabor filters and spatial separable convolutions with low computational complexities. An attention module is integrated with the model to complement the performance. Further, perception-based action space and trajectory generators are integrated with the model's predictions for intuitive navigation. The computationally-efficient model aids a human operator in controlling a micro-drone via gestures. Nearly 18% of computational resources are conserved using the NVIDIA GPU profiler during training. Using a low-cost DJI Tello drone for experiment verification, the computationally-efficient model shows promising results compared to a state-of-the-art and conventional computer vision-based technique.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge