Ioana Marinescu

Teasing Apart Architecture and Initial Weights as Sources of Inductive Bias in Neural Networks

Feb 27, 2025Abstract:Artificial neural networks can acquire many aspects of human knowledge from data, making them promising as models of human learning. But what those networks can learn depends upon their inductive biases -- the factors other than the data that influence the solutions they discover -- and the inductive biases of neural networks remain poorly understood, limiting our ability to draw conclusions about human learning from the performance of these systems. Cognitive scientists and machine learning researchers often focus on the architecture of a neural network as a source of inductive bias. In this paper we explore the impact of another source of inductive bias -- the initial weights of the network -- using meta-learning as a tool for finding initial weights that are adapted for specific problems. We evaluate four widely-used architectures -- MLPs, CNNs, LSTMs, and Transformers -- by meta-training 430 different models across three tasks requiring different biases and forms of generalization. We find that meta-learning can substantially reduce or entirely eliminate performance differences across architectures and data representations, suggesting that these factors may be less important as sources of inductive bias than is typically assumed. When differences are present, architectures and data representations that perform well without meta-learning tend to meta-train more effectively. Moreover, all architectures generalize poorly on problems that are far from their meta-training experience, underscoring the need for stronger inductive biases for robust generalization.

Human and Automatic Interpretation of Romanian Noun Compounds

Mar 11, 2024Abstract:Determining the intended, context-dependent meanings of noun compounds like "shoe sale" and "fire sale" remains a challenge for NLP. Previous work has relied on inventories of semantic relations that capture the different meanings between compound members. Focusing on Romanian compounds, whose morphosyntax differs from that of their English counterparts, we propose a new set of relations and test it with human annotators and a neural net classifier. Results show an alignment of the network's predictions and human judgments, even where the human agreement rate is low. Agreement tracks with the frequency of the selected relations, regardless of structural differences. However, the most frequently selected relation was none of the sixteen labeled semantic relations, indicating the need for a better relation inventory.

Distilling Symbolic Priors for Concept Learning into Neural Networks

Feb 10, 2024

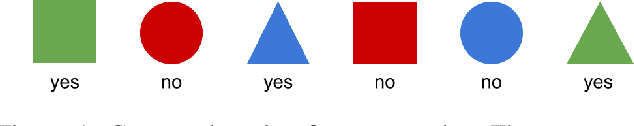

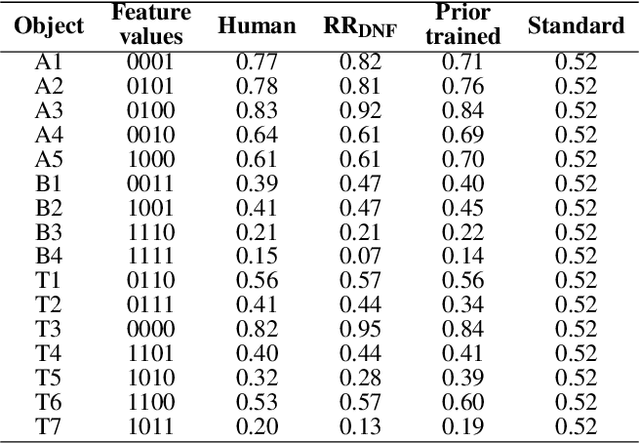

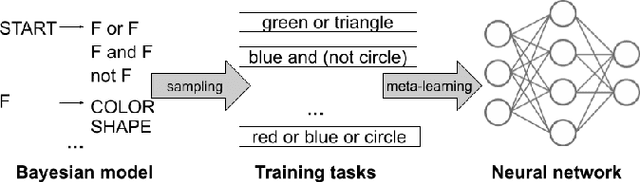

Abstract:Humans can learn new concepts from a small number of examples by drawing on their inductive biases. These inductive biases have previously been captured by using Bayesian models defined over symbolic hypothesis spaces. Is it possible to create a neural network that displays the same inductive biases? We show that inductive biases that enable rapid concept learning can be instantiated in artificial neural networks by distilling a prior distribution from a symbolic Bayesian model via meta-learning, an approach for extracting the common structure from a set of tasks. By generating the set of tasks used in meta-learning from the prior distribution of a Bayesian model, we are able to transfer that prior into a neural network. We use this approach to create a neural network with an inductive bias towards concepts expressed as short logical formulas. Analyzing results from previous behavioral experiments in which people learned logical concepts from a few examples, we find that our meta-trained models are highly aligned with human performance.

GFN-SR: Symbolic Regression with Generative Flow Networks

Dec 01, 2023

Abstract:Symbolic regression (SR) is an area of interpretable machine learning that aims to identify mathematical expressions, often composed of simple functions, that best fit in a given set of covariates $X$ and response $y$. In recent years, deep symbolic regression (DSR) has emerged as a popular method in the field by leveraging deep reinforcement learning to solve the complicated combinatorial search problem. In this work, we propose an alternative framework (GFN-SR) to approach SR with deep learning. We model the construction of an expression tree as traversing through a directed acyclic graph (DAG) so that GFlowNet can learn a stochastic policy to generate such trees sequentially. Enhanced with an adaptive reward baseline, our method is capable of generating a diverse set of best-fitting expressions. Notably, we observe that GFN-SR outperforms other SR algorithms in noisy data regimes, owing to its ability to learn a distribution of rewards over a space of candidate solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge