Ingyun Lee

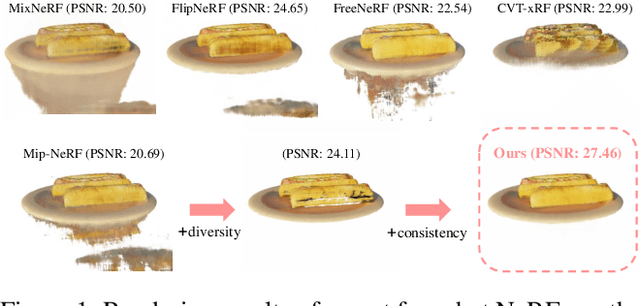

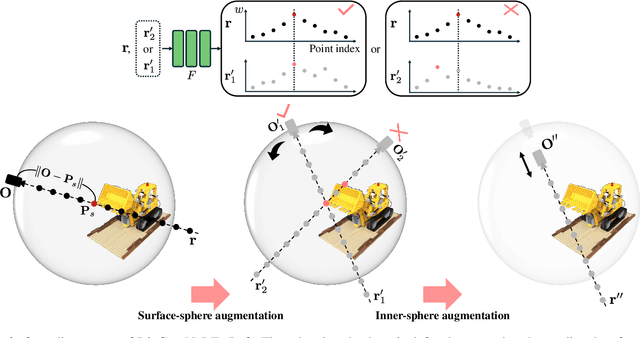

DivCon-NeRF: Generating Augmented Rays with Diversity and Consistency for Few-shot View Synthesis

Mar 17, 2025

Abstract:Neural Radiance Field (NeRF) has shown remarkable performance in novel view synthesis but requires many multiview images, making it impractical for few-shot scenarios. Ray augmentation was proposed to prevent overfitting for sparse training data by generating additional rays. However, existing methods, which generate augmented rays only near the original rays, produce severe floaters and appearance distortion due to limited viewpoints and inconsistent rays obstructed by nearby obstacles and complex surfaces. To address these problems, we propose DivCon-NeRF, which significantly enhances both diversity and consistency. It employs surface-sphere augmentation, which preserves the distance between the original camera and the predicted surface point. This allows the model to compare the order of high-probability surface points and filter out inconsistent rays easily without requiring the exact depth. By introducing inner-sphere augmentation, DivCon-NeRF randomizes angles and distances for diverse viewpoints, further increasing diversity. Consequently, our method significantly reduces floaters and visual distortions, achieving state-of-the-art performance on the Blender, LLFF, and DTU datasets. Our code will be publicly available.

Domain Generalization with Vital Phase Augmentation

Jan 08, 2024Abstract:Deep neural networks have shown remarkable performance in image classification. However, their performance significantly deteriorates with corrupted input data. Domain generalization methods have been proposed to train robust models against out-of-distribution data. Data augmentation in the frequency domain is one of such approaches that enable a model to learn phase features to establish domain-invariant representations. This approach changes the amplitudes of the input data while preserving the phases. However, using fixed phases leads to susceptibility to phase fluctuations because amplitudes and phase fluctuations commonly occur in out-of-distribution. In this study, to address this problem, we introduce an approach using finite variation of the phases of input data rather than maintaining fixed phases. Based on the assumption that the degree of domain-invariant features varies for each phase, we propose a method to distinguish phases based on this degree. In addition, we propose a method called vital phase augmentation (VIPAug) that applies the variation to the phases differently according to the degree of domain-invariant features of given phases. The model depends more on the vital phases that contain more domain-invariant features for attaining robustness to amplitude and phase fluctuations. We present experimental evaluations of our proposed approach, which exhibited improved performance for both clean and corrupted data. VIPAug achieved SOTA performance on the benchmark CIFAR-10 and CIFAR-100 datasets, as well as near-SOTA performance on the ImageNet-100 and ImageNet datasets. Our code is available at https://github.com/excitedkid/vipaug.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge