Igor Mineyev

Learning from One and Only One Shot

Jan 14, 2022Abstract:Humans can generalize from only a few examples and from little pre-training on similar tasks. Yet, machine learning (ML) typically requires large data to learn or pre-learn to transfer. Inspired by nativism, we directly model basic human-innate priors in abstract visual tasks e.g., character/doodle recognition. This yields a white-box model that learns general-appearance similarity -- how any two images look in general -- by mimicking how humans naturally "distort" an object at first sight. Using simply the nearest-neighbor classifier on this similarity space, we achieve human-level character recognition using only 1--10 examples per class and nothing else (no pre-training). This differs from few-shot learning (FSL) using significant pre-training. On standard benchmarks MNIST/EMNIST and the Omniglot challenge, we outperform both neural-network-based and classical ML in the "tiny-data" regime, including FSL pre-trained on large data. Our model enables unsupervised learning too: by learning the non-Euclidean, general-appearance similarity space in a k-means style, we can generate human-intuitive archetypes as cluster ``centroids''.

A Group-Theoretic Approach to Abstraction: Hierarchical, Interpretable, and Task-Free Clustering

Jul 30, 2018

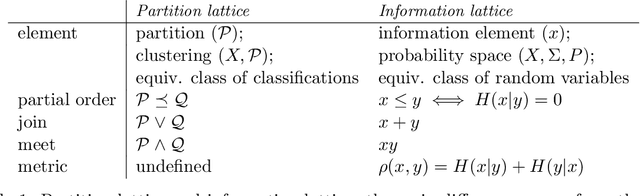

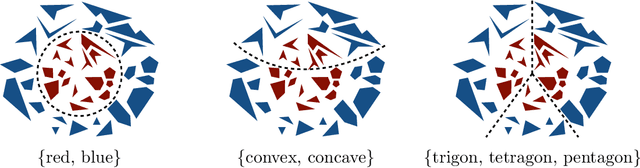

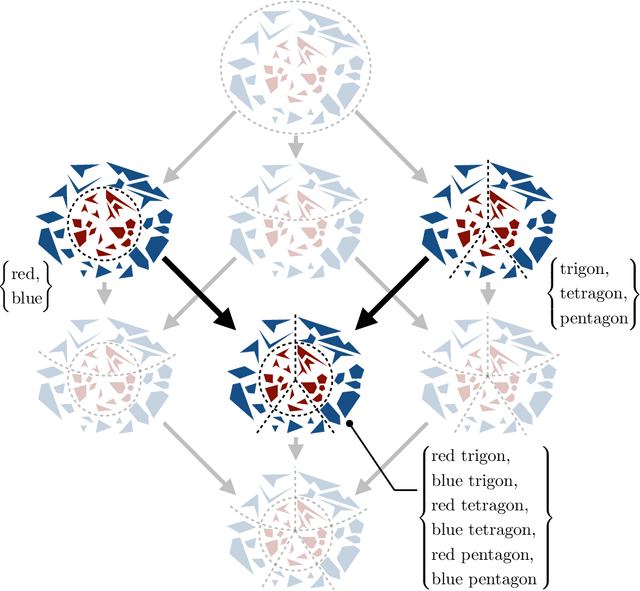

Abstract:Abstraction plays a key role in concept learning and knowledge discovery. While pervasive in both human and artificial intelligence, it remains mysterious how concepts are abstracted in the first place. We study the nature of abstraction through a group-theoretic approach, formalizing it as a hierarchical, interpretable, and task-free clustering problem. This clustering framework is data-free, feature-free, similarity-free, and globally hierarchical---the four key features that distinguish it from common clustering models. Beyond a theoretical foundation for abstraction, we also present a top-down and a bottom-up approach to establish an algorithmic foundation for practical abstraction-generating methods. Lastly, using both a theoretical explanation and a real-world application, we show that the coupling of our abstraction framework with statistics realizes Shannon's information lattice and even further, brings learning into the picture. This gives a first step towards a principled and cognitive way of automatic concept learning and knowledge discovery.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge