Igor Cvišić

Recalibrating the KITTI Dataset Camera Setup for Improved Odometry Accuracy

Sep 08, 2021

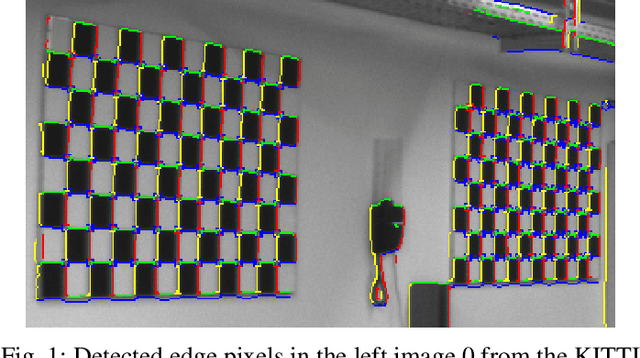

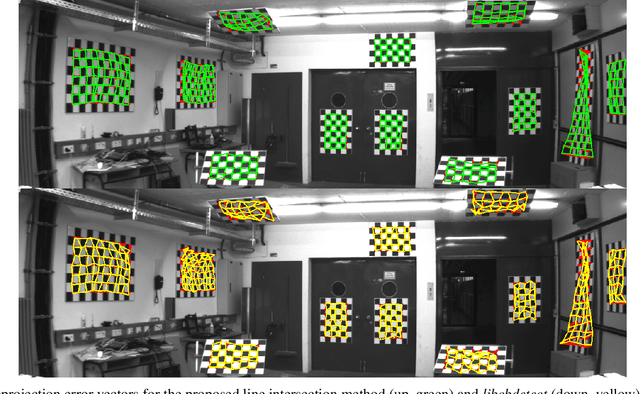

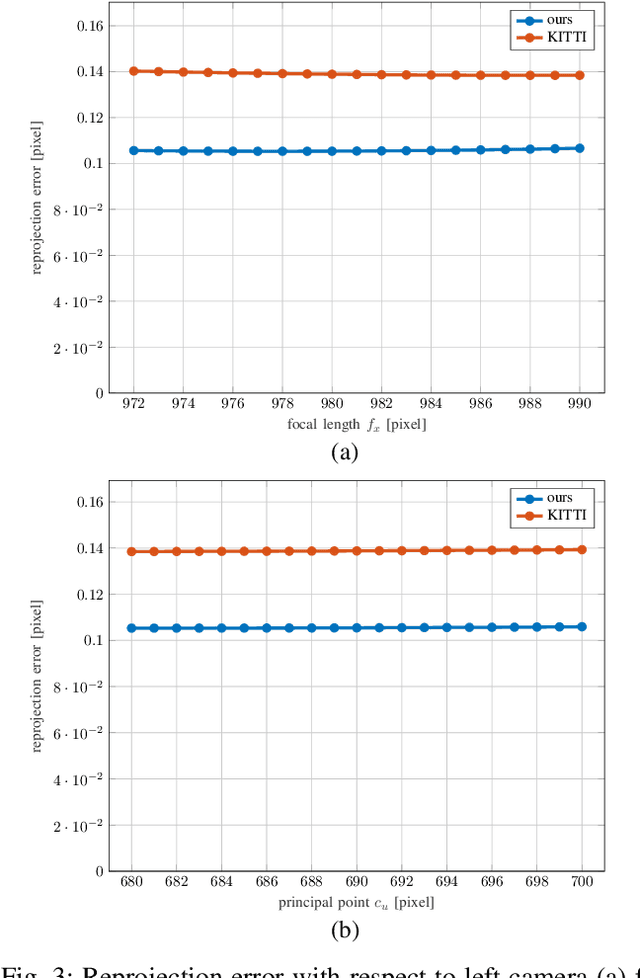

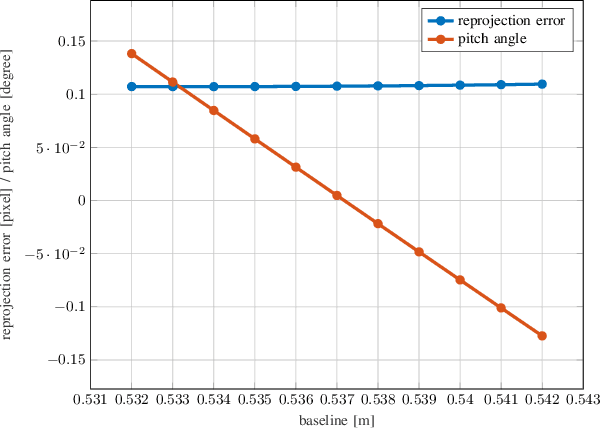

Abstract:Over the last decade, one of the most relevant public datasets for evaluating odometry accuracy is the KITTI dataset. Beside the quality and rich sensor setup, its success is also due to the online evaluation tool, which enables researchers to benchmark and compare algorithms. The results are evaluated on the test subset solely, without any knowledge about the ground truth, yielding unbiased, overfit free and therefore relevant validation for robot localization based on cameras, 3D laser or combination of both. However, as any sensor setup, it requires prior calibration and rectified stereo images are provided, introducing dependence on the default calibration parameters. Given that, a natural question arises if a better set of calibration parameters can be found that would yield higher odometry accuracy. In this paper, we propose a new approach for one shot calibration of the KITTI dataset multiple camera setup. The approach yields better calibration parameters, both in the sense of lower calibration reprojection errors and lower visual odometry error. We conducted experiments where we show for three different odometry algorithms, namely SOFT2, ORB-SLAM2 and VISO2, that odometry accuracy is significantly improved with the proposed calibration parameters. Moreover, our odometry, SOFT2, in conjunction with the proposed calibration method achieved the highest accuracy on the official KITTI scoreboard with 0.53% translational and 0.0009 deg/m rotational error, outperforming even 3D laser-based methods.

Feature-based Event Stereo Visual Odometry

Jul 10, 2021

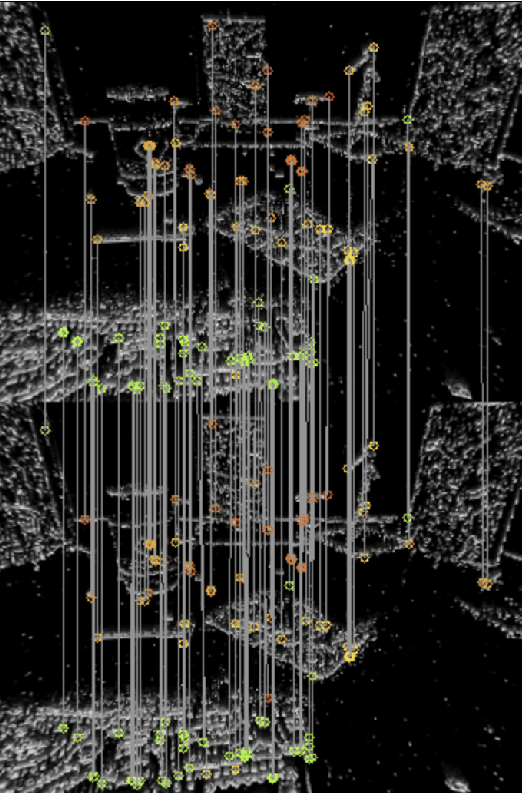

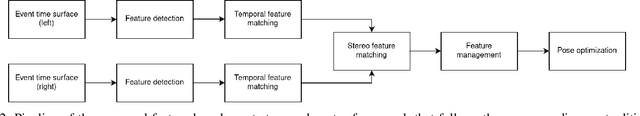

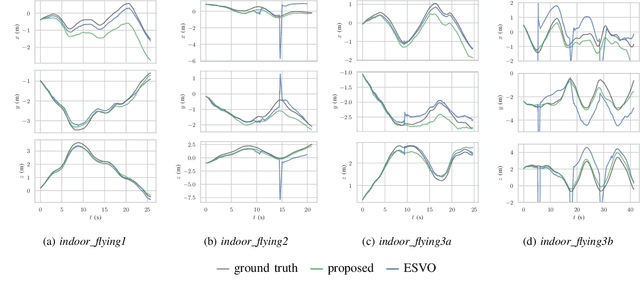

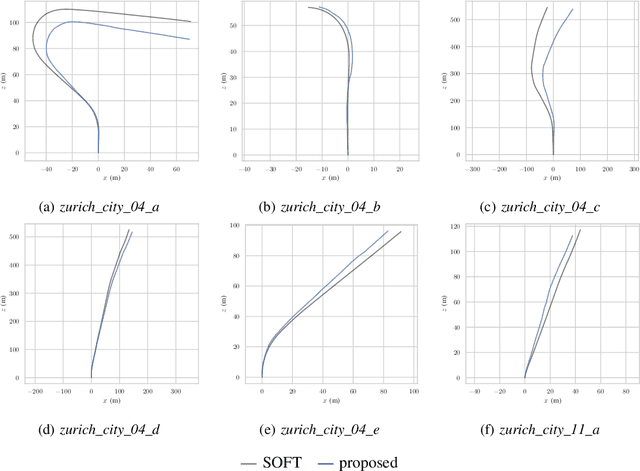

Abstract:Event-based cameras are biologically inspired sensors that output events, i.e., asynchronous pixel-wise brightness changes in the scene. Their high dynamic range and temporal resolution of a microsecond makes them more reliable than standard cameras in environments of challenging illumination and in high-speed scenarios, thus developing odometry algorithms based solely on event cameras offers exciting new possibilities for autonomous systems and robots. In this paper, we propose a novel stereo visual odometry method for event cameras based on feature detection and matching with careful feature management, while pose estimation is done by reprojection error minimization. We evaluate the performance of the proposed method on two publicly available datasets: MVSEC sequences captured by an indoor flying drone and DSEC outdoor driving sequences. MVSEC offers accurate ground truth from motion capture, while for DSEC, which does not offer ground truth, in order to obtain a reference trajectory on the standard camera frames we used our SOFT visual odometry, one of the highest ranking algorithms on the KITTI scoreboards. We compared our method to the ESVO method, which is the first and still the only stereo event odometry method, showing on par performance on the MVSEC sequences, while on the DSEC dataset ESVO, unlike our method, was unable to handle outdoor driving scenario with default parameters. Furthermore, two important advantages of our method over ESVO are that it adapts tracking frequency to the asynchronous event rate and does not require initialization.

Dense Disparity Estimation in Ego-motion Reduced Search Space

Aug 21, 2017

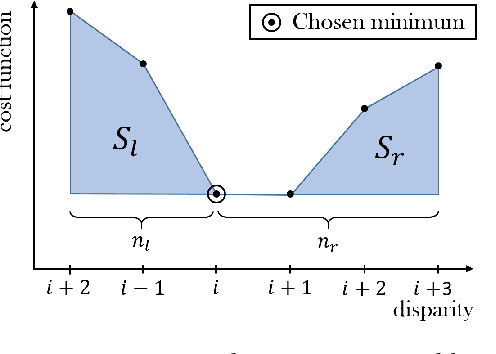

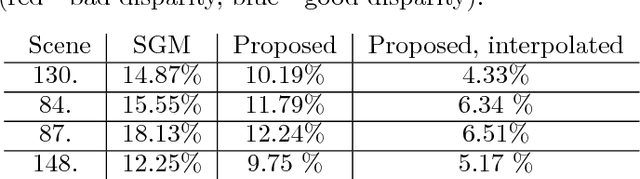

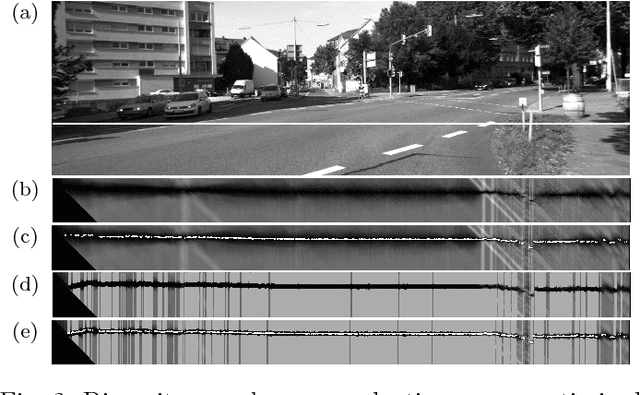

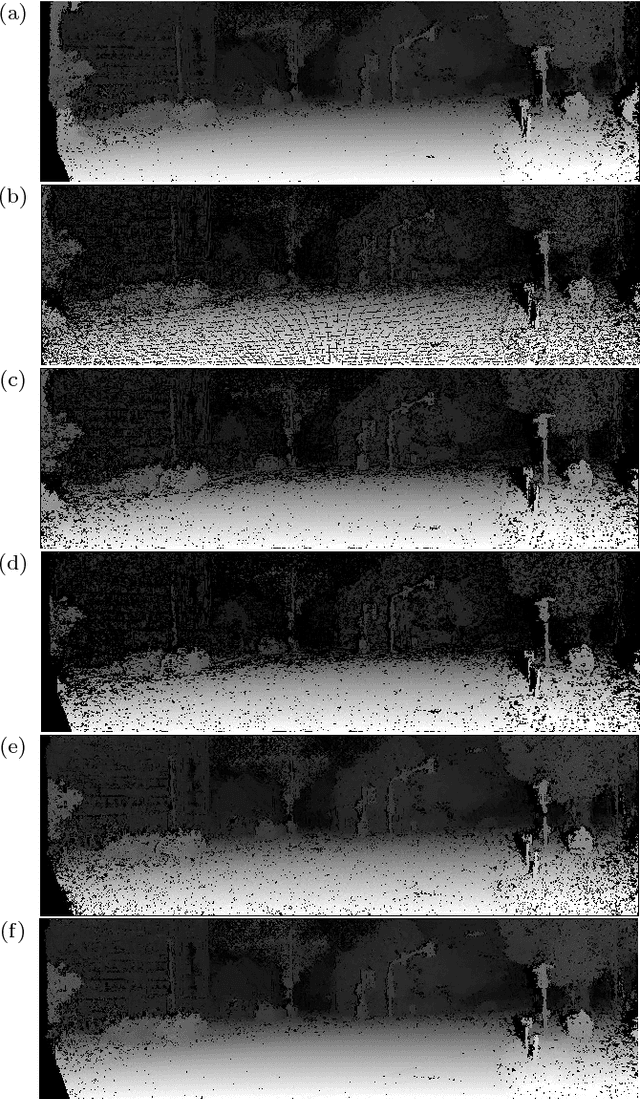

Abstract:Depth estimation from stereo images remains a challenge even though studied for decades. The KITTI benchmark shows that the state-of-the-art solutions offer accurate depth estimation, but are still computationally complex and often require a GPU or FPGA implementation. In this paper we aim at increasing the accuracy of depth map estimation and reducing the computational complexity by using information from previous frames. We propose to transform the disparity map of the previous frame into the current frame, relying on the estimated ego-motion, and use this map as the prediction for the Kalman filter in the disparity space. Then, we update the predicted disparity map using the newly matched one. This way we reduce disparity search space and flickering between consecutive frames, thus increasing the computational efficiency of the algorithm. In the end, we validate the proposed approach on real-world data from the KITTI benchmark suite and show that the proposed algorithm yields more accurate results, while at the same time reducing the disparity search space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge