Antea Hadviger

Feature-based Event Stereo Visual Odometry

Jul 10, 2021

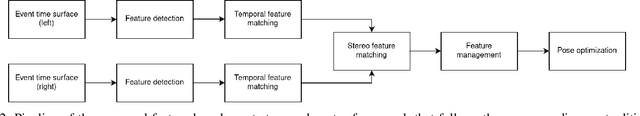

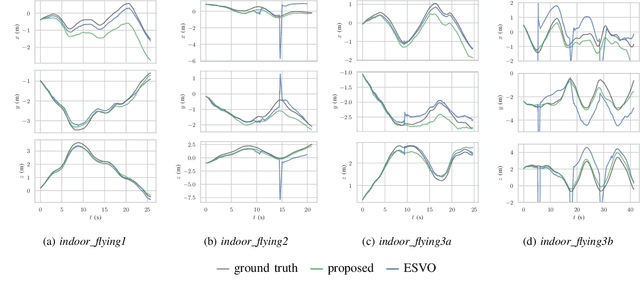

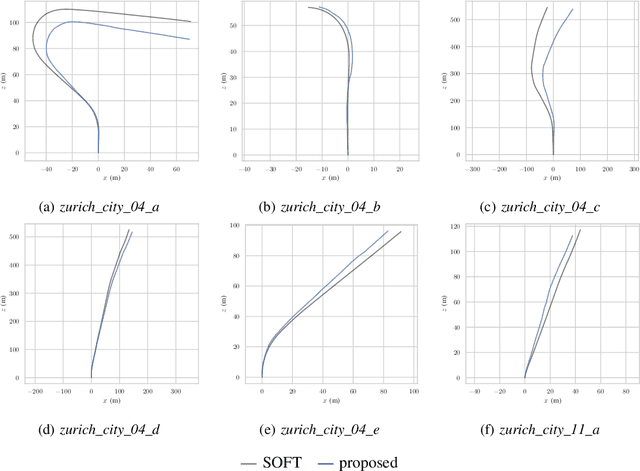

Abstract:Event-based cameras are biologically inspired sensors that output events, i.e., asynchronous pixel-wise brightness changes in the scene. Their high dynamic range and temporal resolution of a microsecond makes them more reliable than standard cameras in environments of challenging illumination and in high-speed scenarios, thus developing odometry algorithms based solely on event cameras offers exciting new possibilities for autonomous systems and robots. In this paper, we propose a novel stereo visual odometry method for event cameras based on feature detection and matching with careful feature management, while pose estimation is done by reprojection error minimization. We evaluate the performance of the proposed method on two publicly available datasets: MVSEC sequences captured by an indoor flying drone and DSEC outdoor driving sequences. MVSEC offers accurate ground truth from motion capture, while for DSEC, which does not offer ground truth, in order to obtain a reference trajectory on the standard camera frames we used our SOFT visual odometry, one of the highest ranking algorithms on the KITTI scoreboards. We compared our method to the ESVO method, which is the first and still the only stereo event odometry method, showing on par performance on the MVSEC sequences, while on the DSEC dataset ESVO, unlike our method, was unable to handle outdoor driving scenario with default parameters. Furthermore, two important advantages of our method over ESVO are that it adapts tracking frequency to the asynchronous event rate and does not require initialization.

Stereo Event Lifetime and Disparity Estimation for Dynamic Vision Sensors

Jul 17, 2019

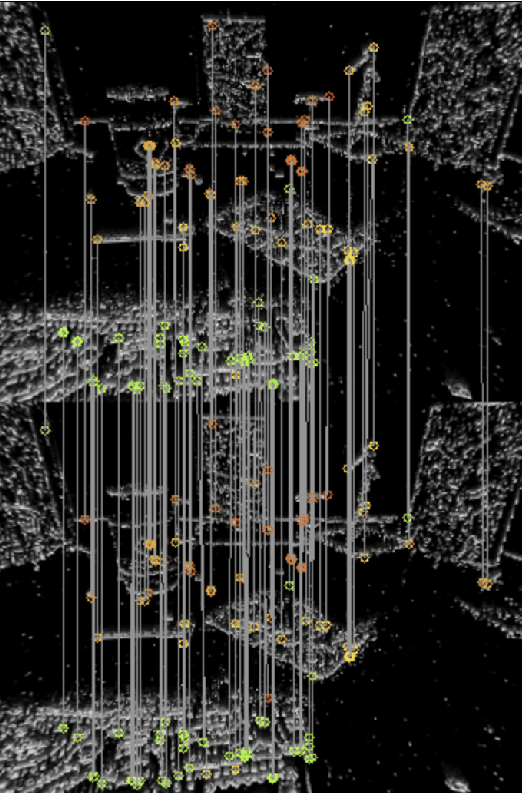

Abstract:Event-based cameras are biologically inspired sensors that output asynchronous pixel-wise brightness changes in the scene called events. They have a high dynamic range and temporal resolution of a microsecond, opposed to standard cameras that output frames at fixed frame rates and suffer from motion blur. Forming stereo pairs of such cameras can open novel application possibilities, since for each event depth can be readily estimated; however, to fully exploit asynchronous nature of the sensor and avoid fixed time interval event accumulation, stereo event lifetime estimation should be employed. In this paper, we propose a novel method for event lifetime estimation of stereo event-cameras, allowing generation of sharp gradient images of events that serve as input to disparity estimation methods. Since a single brightness change triggers events in both event-camera sensors, we propose a method for single shot event lifetime and disparity estimation, with association via stereo matching. The proposed method is approximately twice as fast and more accurate than if lifetimes were estimated separately for each sensor and then stereo matched. Results are validated on real-world data through multiple stereo event-camera experiments.

Computationally efficient dense moving object detection based on reduced space disparity estimation

Sep 21, 2018

Abstract:Computationally efficient moving object detection and depth estimation from a stereo camera is an extremely useful tool for many computer vision applications, including robotics and autonomous driving. In this paper we show how moving objects can be densely detected by estimating disparity using an algorithm that improves complexity and accuracy of stereo matching by relying on information from previous frames. The main idea behind this approach is that by using the ego-motion estimation and the disparity map of the previous frame, we can set a prior base that enables us to reduce the complexity of the current frame disparity estimation, subsequently also detecting moving objects in the scene. For each pixel we run a Kalman filter that recursively fuses the disparity prediction and reduced space semi-global matching (SGM) measurements. The proposed algorithm has been implemented and optimized using streaming single instruction multiple data instruction set and multi-threading. Furthermore, in order to estimate the process and measurement noise as reliably as possible, we conduct extensive experiments on the KITTI suite using the ground truth obtained by the 3D laser range sensor. Concerning disparity estimation, compared to the OpenCV SGM implementation, the proposed method yields improvement on the KITTI dataset sequences in terms of both speed and accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge