Ignacio Gómez

GenDA: Generative Data Assimilation on Complex Urban Areas via Classifier-Free Diffusion Guidance

Jan 16, 2026Abstract:Urban wind flow reconstruction is essential for assessing air quality, heat dispersion, and pedestrian comfort, yet remains challenging when only sparse sensor data are available. We propose GenDA, a generative data assimilation framework that reconstructs high-resolution wind fields on unstructured meshes from limited observations. The model employs a multiscale graph-based diffusion architecture trained on computational fluid dynamics (CFD) simulations and interprets classifier-free guidance as a learned posterior reconstruction mechanism: the unconditional branch learns a geometry-aware flow prior, while the sensor-conditioned branch injects observational constraints during sampling. This formulation enables obstacle-aware reconstruction and generalization across unseen geometries, wind directions, and mesh resolutions without retraining. We consider both sparse fixed sensors and trajectory-based observations using the same reconstruction procedure. When evaluated against supervised graph neural network (GNN) baselines and classical reduced-order data assimilation methods, GenDA reduces the relative root-mean-square error (RRMSE) by 25-57% and increases the structural similarity index (SSIM) by 23-33% across the tested meshes. Experiments are conducted on Reynolds-averaged Navier-Stokes (RANS) simulations of a real urban neighbourhood in Bristol, United Kingdom, at a characteristic Reynolds number of $\mathrm{Re}\approx2\times10^{7}$, featuring complex building geometry and irregular terrain. The proposed framework provides a scalable path toward generative, geometry-aware data assimilation for environmental monitoring in complex domains.

Generative Urban Flow Modeling: From Geometry to Airflow with Graph Diffusion

Dec 09, 2025

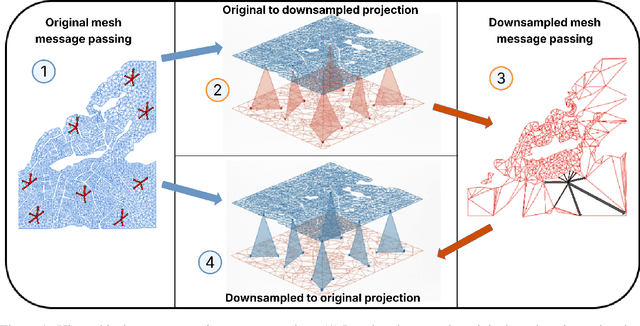

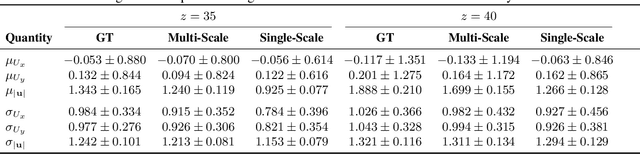

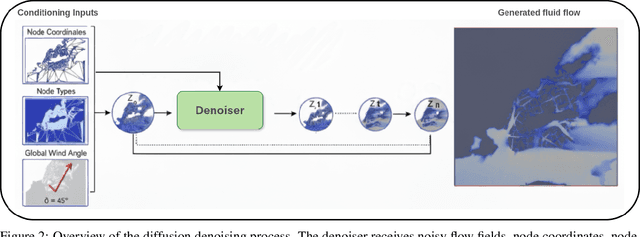

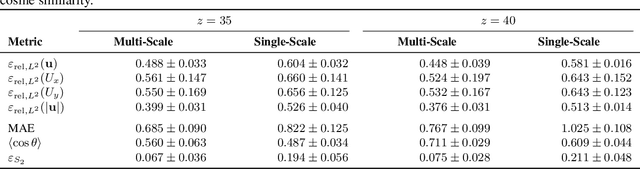

Abstract:Urban wind flow modeling and simulation play an important role in air quality assessment and sustainable city planning. A key challenge for modeling and simulation is handling the complex geometries of the urban landscape. Low order models are limited in capturing the effects of geometry, while high-fidelity Computational Fluid Dynamics (CFD) simulations are prohibitively expensive, especially across multiple geometries or wind conditions. Here, we propose a generative diffusion framework for synthesizing steady-state urban wind fields over unstructured meshes that requires only geometry information. The framework combines a hierarchical graph neural network with score-based diffusion modeling to generate accurate and diverse velocity fields without requiring temporal rollouts or dense measurements. Trained across multiple mesh slices and wind angles, the model generalizes to unseen geometries, recovers key flow structures such as wakes and recirculation zones, and offers uncertainty-aware predictions. Ablation studies confirm robustness to mesh variation and performance under different inference regimes. This work develops is the first step towards foundation models for the built environment that can help urban planners rapidly evaluate design decisions under densification and climate uncertainty.

Transformer-Based Fault-Tolerant Control for Fixed-Wing UAVs Using Knowledge Distillation and In-Context Adaptation

Nov 05, 2024

Abstract:This study presents a transformer-based approach for fault-tolerant control in fixed-wing Unmanned Aerial Vehicles (UAVs), designed to adapt in real time to dynamic changes caused by structural damage or actuator failures. Unlike traditional Flight Control Systems (FCSs) that rely on classical control theory and struggle under severe alterations in dynamics, our method directly maps outer-loop reference values -- altitude, heading, and airspeed -- into control commands using the in-context learning and attention mechanisms of transformers, thus bypassing inner-loop controllers and fault-detection layers. Employing a teacher-student knowledge distillation framework, the proposed approach trains a student agent with partial observations by transferring knowledge from a privileged expert agent with full observability, enabling robust performance across diverse failure scenarios. Experimental results demonstrate that our transformer-based controller outperforms industry-standard FCS and state-of-the-art reinforcement learning (RL) methods, maintaining high tracking accuracy and stability in nominal conditions and extreme failure cases, highlighting its potential for enhancing UAV operational safety and reliability.

Towards certification: A complete statistical validation pipeline for supervised learning in industry

Nov 04, 2024

Abstract:Methods of Machine and Deep Learning are gradually being integrated into industrial operations, albeit at different speeds for different types of industries. The aerospace and aeronautical industries have recently developed a roadmap for concepts of design assurance and integration of neural network-related technologies in the aeronautical sector. This paper aims to contribute to this paradigm of AI-based certification in the context of supervised learning, by outlining a complete validation pipeline that integrates deep learning, optimization and statistical methods. This pipeline is composed by a directed graphical model of ten steps. Each of these steps is addressed by a merging key concepts from different contributing disciplines (from machine learning or optimization to statistics) and adapting them to an industrial scenario, as well as by developing computationally efficient algorithmic solutions. We illustrate the application of this pipeline in a realistic supervised problem arising in aerostructural design: predicting the likelikood of different stress-related failure modes during different airflight maneuvers based on a (large) set of features characterising the aircraft internal loads and geometric parameters.

Intercepting Unauthorized Aerial Robots in Controlled Airspace Using Reinforcement Learning

Jul 09, 2024

Abstract:The proliferation of unmanned aerial vehicles (UAVs) in controlled airspace presents significant risks, including potential collisions, disruptions to air traffic, and security threats. Ensuring the safe and efficient operation of airspace, particularly in urban environments and near critical infrastructure, necessitates effective methods to intercept unauthorized or non-cooperative UAVs. This work addresses the critical need for robust, adaptive systems capable of managing such threats through the use of Reinforcement Learning (RL). We present a novel approach utilizing RL to train fixed-wing UAV pursuer agents for intercepting dynamic evader targets. Our methodology explores both model-based and model-free RL algorithms, specifically DreamerV3, Truncated Quantile Critics (TQC), and Soft Actor-Critic (SAC). The training and evaluation of these algorithms were conducted under diverse scenarios, including unseen evasion strategies and environmental perturbations. Our approach leverages high-fidelity flight dynamics simulations to create realistic training environments. This research underscores the importance of developing intelligent, adaptive control systems for UAV interception, significantly contributing to the advancement of secure and efficient airspace management. It demonstrates the potential of RL to train systems capable of autonomously achieving these critical tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge