Hyunkwang Lee

Towards generative adversarial networks as a new paradigm for radiology education

Dec 04, 2018

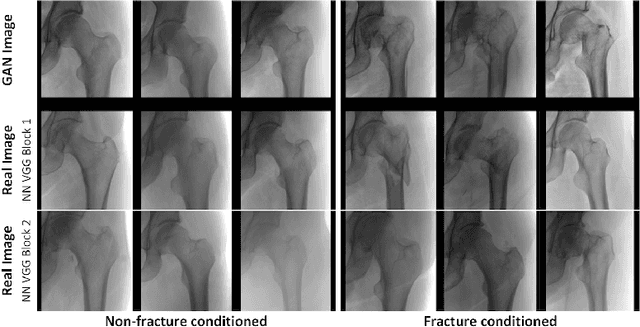

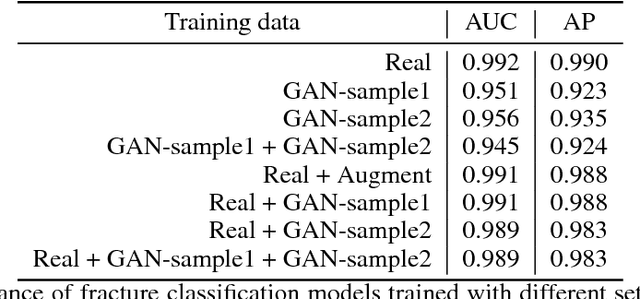

Abstract:Medical students and radiology trainees typically view thousands of images in order to "train their eye" to detect the subtle visual patterns necessary for diagnosis. Nevertheless, infrastructural and legal constraints often make it difficult to access and quickly query an abundance of images with a user-specified feature set. In this paper, we use a conditional generative adversarial network (GAN) to synthesize $1024\times1024$ pixel pelvic radiographs that can be queried with conditioning on fracture status. We demonstrate that the conditional GAN learns features that distinguish fractures from non-fractures by training a convolutional neural network exclusively on images sampled from the GAN and achieving an AUC of $>0.95$ on a held-out set of real images. We conduct additional analysis of the images sampled from the GAN and describe ongoing work to validate educational efficacy.

Machine Friendly Machine Learning: Interpretation of Computed Tomography Without Image Reconstruction

Dec 03, 2018

Abstract:Recent advancements in deep learning for automated image processing and classification have accelerated many new applications for medical image analysis. However, most deep learning applications have been developed using reconstructed, human-interpretable medical images. While image reconstruction from raw sensor data is required for the creation of medical images, the reconstruction process only uses a partial representation of all the data acquired. Here we report the development of a system to directly process raw computed tomography (CT) data in sinogram-space, bypassing the intermediary step of image reconstruction. Two classification tasks were evaluated for their feasibility for sinogram-space machine learning: body region identification and intracranial hemorrhage (ICH) detection. Our proposed SinoNet performed favorably compared to conventional reconstructed image-space-based systems for both tasks, regardless of scanning geometries in terms of projections or detectors. Further, SinoNet performed significantly better when using sparsely sampled sinograms than conventional networks operating in image-space. As a result, sinogram-space algorithms could be used in field settings for binary diagnosis testing, triage, and in clinical settings where low radiation dose is desired. These findings also demonstrate another strength of deep learning where it can analyze and interpret sinograms that are virtually impossible for human experts.

Practical Window Setting Optimization for Medical Image Deep Learning

Dec 03, 2018

Abstract:The recent advancements in deep learning have allowed for numerous applications in computed tomography (CT), with potential to improve diagnostic accuracy, speed of interpretation, and clinical efficiency. However, the deep learning community has to date neglected window display settings - a key feature of clinical CT interpretation and opportunity for additional optimization. Here we propose a window setting optimization (WSO) module that is fully trainable with convolutional neural networks (CNNs) to find optimal window settings for clinical performance. Our approach was inspired by the method commonly used by practicing radiologists to interpret CT images by adjusting window settings to increase the visualization of certain pathologies. Our approach provides optimal window ranges to enhance the conspicuity of abnormalities, and was used to enable performance enhancement for intracranial hemorrhage and urinary stone detection. On each task, the WSO model outperformed models trained over the full range of Hounsfield unit values in CT images, as well as images windowed with pre-defined settings. The WSO module can be readily applied to any analysis of CT images, and can be further generalized to tasks on other medical imaging modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge