Hyunchul Bae

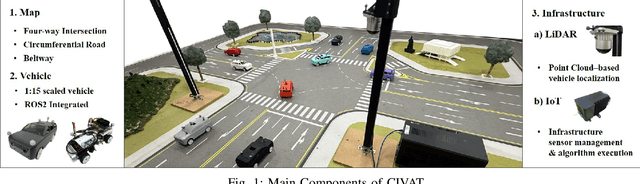

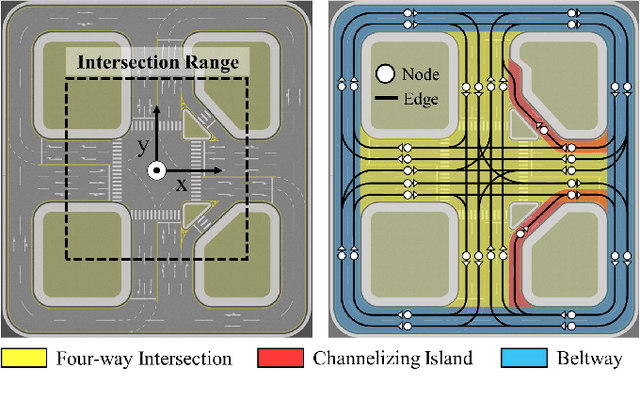

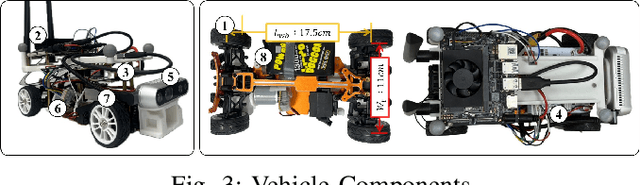

Miniature Testbed for Validating Multi-Agent Cooperative Autonomous Driving

Nov 14, 2025

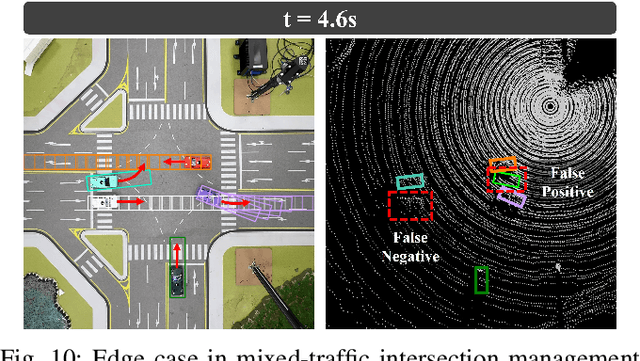

Abstract:Cooperative autonomous driving, which extends vehicle autonomy by enabling real-time collaboration between vehicles and smart roadside infrastructure, remains a challenging yet essential problem. However, none of the existing testbeds employ smart infrastructure equipped with sensing, edge computing, and communication capabilities. To address this gap, we design and implement a 1:15-scale miniature testbed, CIVAT, for validating cooperative autonomous driving, consisting of a scaled urban map, autonomous vehicles with onboard sensors, and smart infrastructure. The proposed testbed integrates V2V and V2I communication with the publish-subscribe pattern through a shared Wi-Fi and ROS2 framework, enabling information exchange between vehicles and infrastructure to realize cooperative driving functionality. As a case study, we validate the system through infrastructure-based perception and intersection management experiments.

Rethinking the Role of Infrastructure in Collaborative Perception

Oct 15, 2024Abstract:Collaborative Perception (CP) is a process in which an ego agent receives and fuses sensor information from surrounding vehicles and infrastructure to enhance its perception capability. To evaluate the need for infrastructure equipped with sensors, extensive and quantitative analysis of the role of infrastructure data in CP is crucial, yet remains underexplored. To address this gap, we first quantitatively assess the importance of infrastructure data in existing vehicle-centric CP, where the ego agent is a vehicle. Furthermore, we compare vehicle-centric CP with infra-centric CP, where the ego agent is now the infrastructure, to evaluate the effectiveness of each approach. Our results demonstrate that incorporating infrastructure data improves 3D detection accuracy by up to 10.87%, and infra-centric CP shows enhanced noise robustness and increases accuracy by up to 42.53% compared with vehicle-centric CP.

V2X-M2C: Efficient Multi-Module Collaborative Perception with Two Connections

Jul 16, 2024

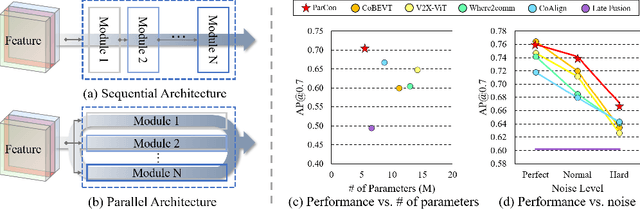

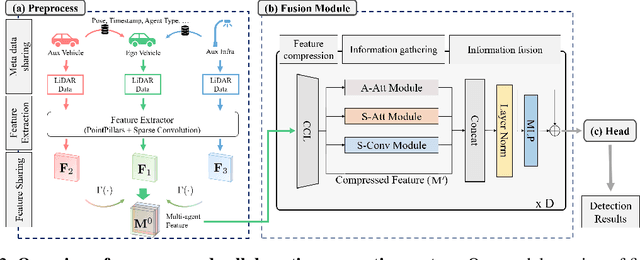

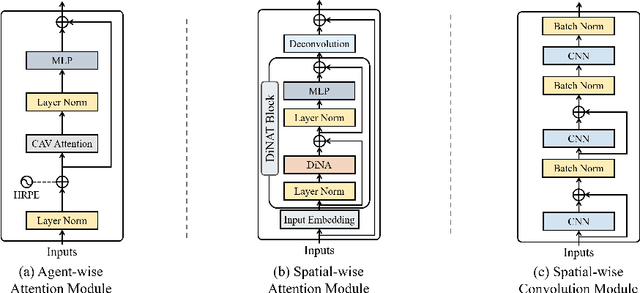

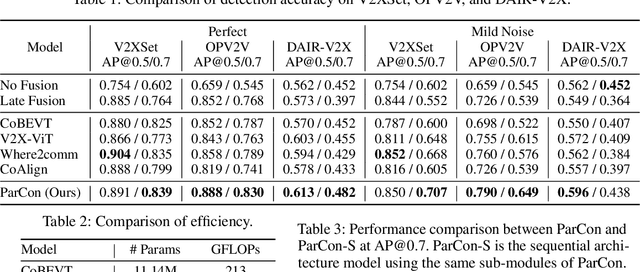

Abstract:In this paper, we investigate improving the perception performance of autonomous vehicles through communication with other vehicles and road infrastructures. To this end, we introduce a collaborative perception model $\textbf{V2X-M2C}$, consisting of multiple modules, each generating inter-agent complementary information, spatial global context, and spatial local information. Inspired by the question of why most existing architectures are sequential, we analyze both the $\textit{sequential}$ and $\textit{parallel}$ connections of the modules. The sequential connection synergizes the modules, whereas the parallel connection independently improves each module. Extensive experiments demonstrate that V2X-M2C achieves state-of-the-art perception performance, increasing the detection accuracy by 8.00% to 10.87% and decreasing the FLOPs by 42.81% to 52.64%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge