V2X-M2C: Efficient Multi-Module Collaborative Perception with Two Connections

Paper and Code

Jul 16, 2024

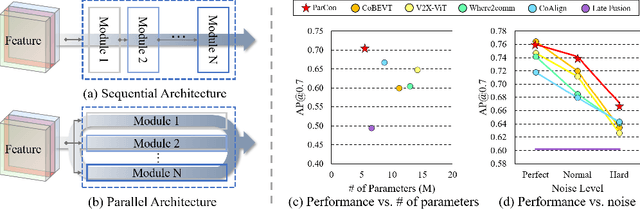

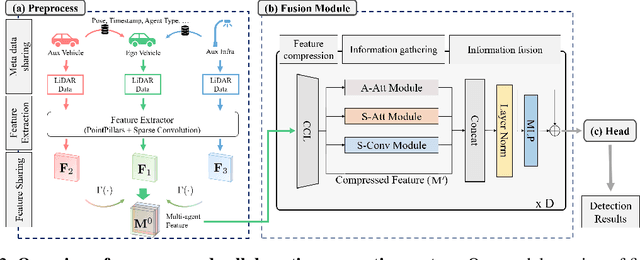

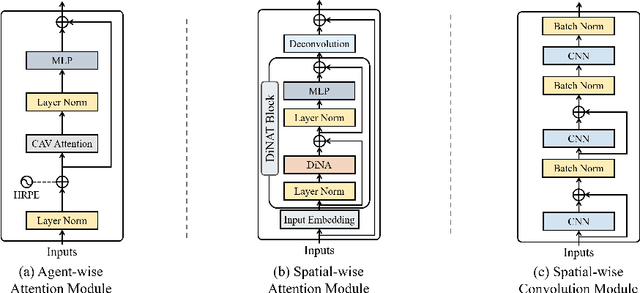

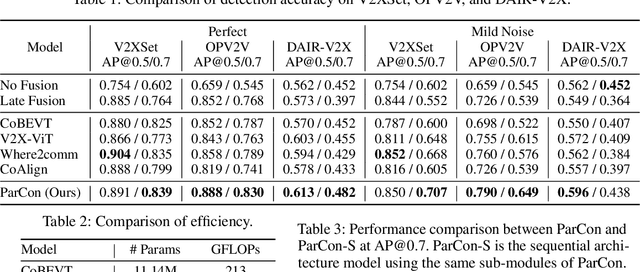

In this paper, we investigate improving the perception performance of autonomous vehicles through communication with other vehicles and road infrastructures. To this end, we introduce a collaborative perception model $\textbf{V2X-M2C}$, consisting of multiple modules, each generating inter-agent complementary information, spatial global context, and spatial local information. Inspired by the question of why most existing architectures are sequential, we analyze both the $\textit{sequential}$ and $\textit{parallel}$ connections of the modules. The sequential connection synergizes the modules, whereas the parallel connection independently improves each module. Extensive experiments demonstrate that V2X-M2C achieves state-of-the-art perception performance, increasing the detection accuracy by 8.00% to 10.87% and decreasing the FLOPs by 42.81% to 52.64%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge