Hyoseok Hwang

Stabilizing the Q-Gradient Field for Policy Smoothness in Actor-Critic

Jan 30, 2026Abstract:Policies learned via continuous actor-critic methods often exhibit erratic, high-frequency oscillations, making them unsuitable for physical deployment. Current approaches attempt to enforce smoothness by directly regularizing the policy's output. We argue that this approach treats the symptom rather than the cause. In this work, we theoretically establish that policy non-smoothness is fundamentally governed by the differential geometry of the critic. By applying implicit differentiation to the actor-critic objective, we prove that the sensitivity of the optimal policy is bounded by the ratio of the Q-function's mixed-partial derivative (noise sensitivity) to its action-space curvature (signal distinctness). To empirically validate this theoretical insight, we introduce PAVE (Policy-Aware Value-field Equalization), a critic-centric regularization framework that treats the critic as a scalar field and stabilizes its induced action-gradient field. PAVE rectifies the learning signal by minimizing the Q-gradient volatility while preserving local curvature. Experimental results demonstrate that PAVE achieves smoothness and robustness comparable to policy-side smoothness regularization methods, while maintaining competitive task performance, without modifying the actor.

Scale-Consistent State-Space Dynamics via Fractal of Stationary Transformations

Jan 27, 2026Abstract:Recent deep learning models increasingly rely on depth without structural guarantees on the validity of intermediate representations, rendering early stopping and adaptive computation ill-posed. We address this limitation by formulating a structural requirement for state-space model's scale-consistent latent dynamics across iterative refinement, and derive Fractal of Stationary Transformations (FROST), which enforces a self-similar representation manifold through a fractal inductive bias. Under this geometry, intermediate states correspond to different resolutions of a shared representation, and we provide a geometric analysis establishing contraction and stable convergence across iterations. As a consequence of this scale-consistent structure, halting naturally admits a ranking-based formulation driven by intrinsic feature quality rather than extrinsic objectives. Controlled experiments on ImageNet-100 empirically verify the predicted scale-consistent behavior, showing that adaptive efficiency emerges from the aligned latent geometry.

Enhancing Control Policy Smoothness by Aligning Actions with Predictions from Preceding States

Jan 26, 2026Abstract:Deep reinforcement learning has proven to be a powerful approach to solving control tasks, but its characteristic high-frequency oscillations make it difficult to apply in real-world environments. While prior methods have addressed action oscillations via architectural or loss-based methods, the latter typically depend on heuristic or synthetic definitions of state similarity to promote action consistency, which often fail to accurately reflect the underlying system dynamics. In this paper, we propose a novel loss-based method by introducing a transition-induced similar state. The transition-induced similar state is defined as the distribution of next states transitioned from the previous state. Since it utilizes only environmental feedback and actually collected data, it better captures system dynamics. Building upon this foundation, we introduce Action Smoothing by Aligning Actions with Predictions from Preceding States (ASAP), an action smoothing method that effectively mitigates action oscillations. ASAP enforces action smoothness by aligning the actions with those taken in transition-induced similar states and by penalizing second-order differences to suppress high-frequency oscillations. Experiments in Gymnasium and Isaac-Lab environments demonstrate that ASAP yields smoother control and improved policy performance over existing methods.

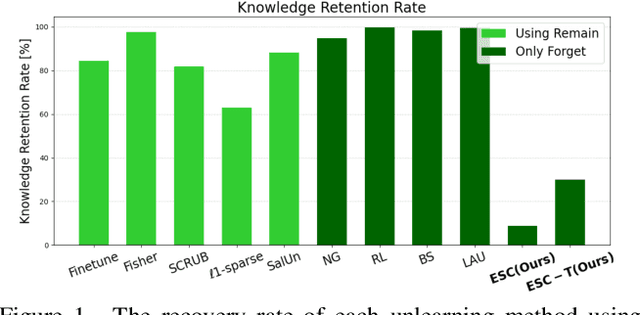

ESC: Erasing Space Concept for Knowledge Deletion

Apr 03, 2025

Abstract:As concerns regarding privacy in deep learning continue to grow, individuals are increasingly apprehensive about the potential exploitation of their personal knowledge in trained models. Despite several research efforts to address this, they often fail to consider the real-world demand from users for complete knowledge erasure. Furthermore, our investigation reveals that existing methods have a risk of leaking personal knowledge through embedding features. To address these issues, we introduce a novel concept of Knowledge Deletion (KD), an advanced task that considers both concerns, and provides an appropriate metric, named Knowledge Retention score (KR), for assessing knowledge retention in feature space. To achieve this, we propose a novel training-free erasing approach named Erasing Space Concept (ESC), which restricts the important subspace for the forgetting knowledge by eliminating the relevant activations in the feature. In addition, we suggest ESC with Training (ESC-T), which uses a learnable mask to better balance the trade-off between forgetting and preserving knowledge in KD. Our extensive experiments on various datasets and models demonstrate that our proposed methods achieve the fastest and state-of-the-art performance. Notably, our methods are applicable to diverse forgetting scenarios, such as facial domain setting, demonstrating the generalizability of our methods. The code is available at http://github.com/KU-VGI/ESC .

ForceGrip: Data-Free Curriculum Learning for Realistic Grip Force Control in VR Hand Manipulation

Mar 11, 2025

Abstract:Realistic hand manipulation is a key component of immersive virtual reality (VR), yet existing methods often rely on a kinematic approach or motion-capture datasets that omit crucial physical attributes such as contact forces and finger torques. Consequently, these approaches prioritize tight, one-size-fits-all grips rather than reflecting users' intended force levels. We present ForceGrip, a deep learning agent that synthesizes realistic hand manipulation motions, faithfully reflecting the user's grip force intention. Instead of mimicking predefined motion datasets, ForceGrip uses generated training scenarios-randomizing object shapes, wrist movements, and trigger input flows-to challenge the agent with a broad spectrum of physical interactions. To effectively learn from these complex tasks, we employ a three-phase curriculum learning framework comprising Finger Positioning, Intention Adaptation, and Dynamic Stabilization. This progressive strategy ensures stable hand-object contact, adaptive force control based on user inputs, and robust handling under dynamic conditions. Additionally, a proximity reward function enhances natural finger motions and accelerates training convergence. Quantitative and qualitative evaluations reveal ForceGrip's superior force controllability and plausibility compared to state-of-the-art methods.

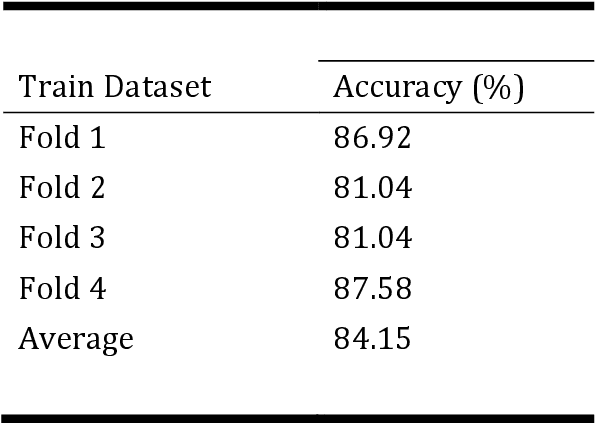

A2XP: Towards Private Domain Generalization

Nov 17, 2023

Abstract:Deep Neural Networks (DNNs) have become pivotal in various fields, especially in computer vision, outperforming previous methodologies. A critical challenge in their deployment is the bias inherent in data across different domains, such as image style, and environmental conditions, leading to domain gaps. This necessitates techniques for learning general representations from biased training data, known as domain generalization. This paper presents Attend to eXpert Prompts (A2XP), a novel approach for domain generalization that preserves the privacy and integrity of the network architecture. A2XP consists of two phases: Expert Adaptation and Domain Generalization. In the first phase, prompts for each source domain are optimized to guide the model towards the optimal direction. In the second phase, two embedder networks are trained to effectively amalgamate these expert prompts, aiming for an optimal output. Our extensive experiments demonstrate that A2XP achieves state-of-the-art results over existing non-private domain generalization methods. The experimental results validate that the proposed approach not only tackles the domain generalization challenge in DNNs but also offers a privacy-preserving, efficient solution to the broader field of computer vision.

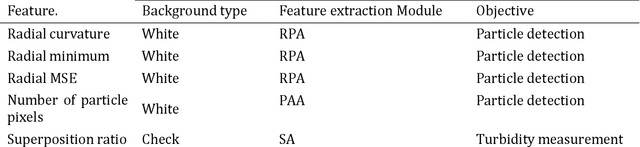

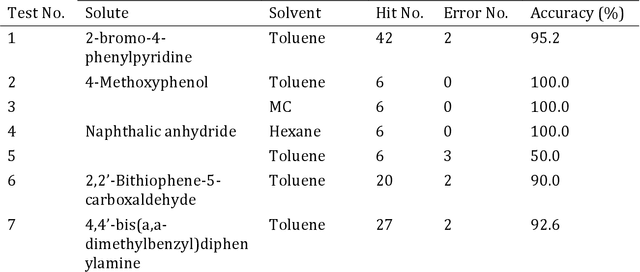

Automated Solubility Analysis System and Method Using Computer Vision and Machine Learning

Apr 07, 2023

Abstract:In this study, a novel active solubility sensing device using computer vision is proposed to improve separation purification performance and prevent malfunctions of separation equipment such as preparative liquid chromatographers and evaporators. The proposed device actively measures the solubility by transmitting a solution using a background image. The proposed system is a combination of a device that uses a background image and a method for estimating the dissolution and particle presence by changing the background image. The proposed device consists of four parts: camera, display, adjustment, and server units. The camera unit is made up of a rear image sensor on a mobile phone. The display unit is comprised of a tablet screen. The adjustment unit is composed of rotating and height-adjustment jigs. Finally, the server unit consists of a socket server for communication between the units and a PC, including an automated solubility analysis system implemented in Python. The dissolution status of the solution was divided into four categories and a case study was conducted. The algorithms were trained based on these results. Six organic materials and four organic solvents were combined with 202 tests to train the developed algorithm. As a result, the evaluation rate for the dissolution state exhibited an accuracy of 95 %. In addition, the device and method must develop a feedback function that can add a solvent or solute after dissolution detection using solubility results for use in autonomous systems, such as a synthetic automation system. Finally, the diversification of the sensing method is expected to extend not only to the solution but also to the solubility and homogeneity analysis of the film.

Decision-BADGE: Decision-based Adversarial Batch Attack with Directional Gradient Estimation

Mar 09, 2023Abstract:The vulnerability of deep neural networks to adversarial examples has led to the rise in the use of adversarial attacks. While various decision-based and universal attack methods have been proposed, none have attempted to create a decision-based universal adversarial attack. This research proposes Decision-BADGE, which uses random gradient-free optimization and batch attack to generate universal adversarial perturbations for decision-based attacks. Multiple adversarial examples are combined to optimize a single universal perturbation, and the accuracy metric is reformulated into a continuous Hamming distance form. The effectiveness of accuracy metric as a loss function is demonstrated and mathematically proven. The combination of Decision-BADGE and the accuracy loss function performs better than both score-based image-dependent attack and white-box universal attack methods in terms of attack time efficiency. The research also shows that Decision-BADGE can successfully deceive unseen victims and accurately target specific classes.

3D Display Calibration by Visual Pattern Analysis

Jun 23, 2016

Abstract:Nearly all 3D displays need calibration for correct rendering. More often than not, the optical elements in a 3D display are misaligned from the designed parameter setting. As a result, 3D magic does not perform well as intended. The observed images tend to get distorted. In this paper, we propose a novel display calibration method to fix the situation. In our method, a pattern image is displayed on the panel and a camera takes its pictures twice at different positions. Then, based on a quantitative model, we extract all display parameters (i.e., pitch, slanted angle, gap or thickness, offset) from the observed patterns in the captured images. For high accuracy and robustness, our method analyzes the patterns mostly in frequency domain. We conduct two types of experiments for validation; one with optical simulation for quantitative results and the other with real-life displays for qualitative assessment. Experimental results demonstrate that our method is quite accurate, about a half order of magnitude higher than prior work; is efficient, spending less than 2 s for computation; and is robust to noise, working well in the SNR regime as low as 6 dB.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge