Huy X. Pham

Autonomous UAV Navigation Using Reinforcement Learning

Jan 16, 2018

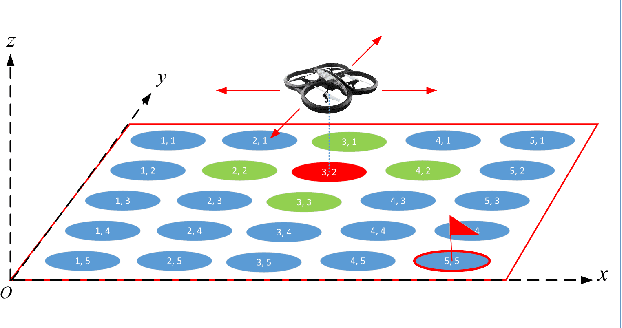

Abstract:Unmanned aerial vehicles (UAV) are commonly used for missions in unknown environments, where an exact mathematical model of the environment may not be available. This paper provides a framework for using reinforcement learning to allow the UAV to navigate successfully in such environments. We conducted our simulation and real implementation to show how the UAVs can successfully learn to navigate through an unknown environment. Technical aspects regarding to applying reinforcement learning algorithm to a UAV system and UAV flight control were also addressed. This will enable continuing research using a UAV with learning capabilities in more important applications, such as wildfire monitoring, or search and rescue missions.

A Distributed Control Framework for a Team of Unmanned Aerial Vehicles for Dynamic Wildfire Tracking

Apr 09, 2017

Abstract:Wildland fire fighting is a very dangerous job, and the lack of information of the fire front is one of main reasons that causes many accidents. Using unmanned aerial vehicle (UAV) to cover wildfire is promising because it can replace human in hazardous fire tracking and save operation costs significantly. In this paper we propose a distributed control framework designed for a team of UAVs that can closely monitor a wildfire in open space, and precisely track its development. The UAV team, designed for flexible deployment, can effectively avoid in-flight collision as well as cooperate well with other neighbors. Experimental results are conducted to demonstrate the capabilites of the UAV team in covering a spreading wildfire.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge