Hong-Wei Wu

APAR: Modeling Irregular Target Functions in Tabular Regression via Arithmetic-Aware Pre-Training and Adaptive-Regularized Fine-Tuning

Dec 14, 2024

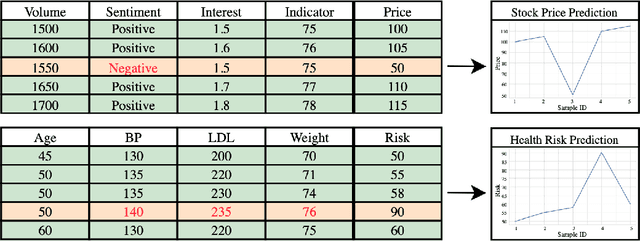

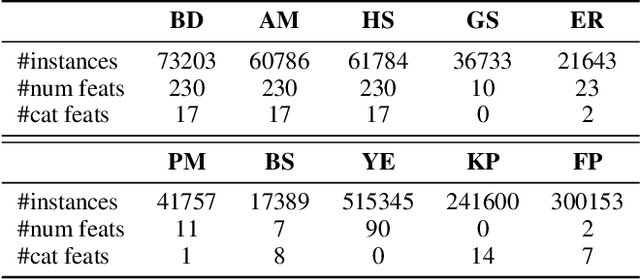

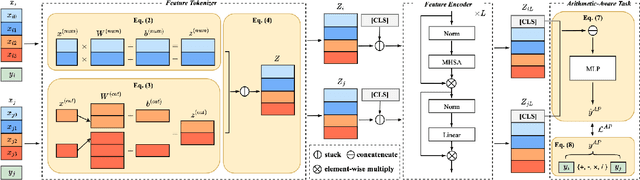

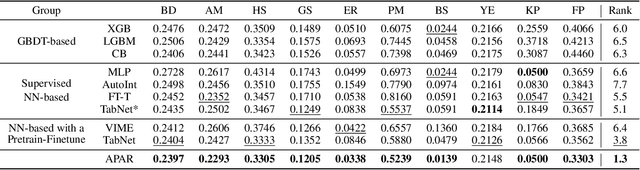

Abstract:Tabular data are fundamental in common machine learning applications, ranging from finance to genomics and healthcare. This paper focuses on tabular regression tasks, a field where deep learning (DL) methods are not consistently superior to machine learning (ML) models due to the challenges posed by irregular target functions inherent in tabular data, causing sensitive label changes with minor variations from features. To address these issues, we propose a novel Arithmetic-Aware Pre-training and Adaptive-Regularized Fine-tuning framework (APAR), which enables the model to fit irregular target function in tabular data while reducing the negative impact of overfitting. In the pre-training phase, APAR introduces an arithmetic-aware pretext objective to capture intricate sample-wise relationships from the perspective of continuous labels. In the fine-tuning phase, a consistency-based adaptive regularization technique is proposed to self-learn appropriate data augmentation. Extensive experiments across 10 datasets demonstrated that APAR outperforms existing GBDT-, supervised NN-, and pretrain-finetune NN-based methods in RMSE (+9.43% $\sim$ 20.37%), and empirically validated the effects of pre-training tasks, including the study of arithmetic operations. Our code and data are publicly available at https://github.com/johnnyhwu/APAR.

Team Triple-Check at Factify 2: Parameter-Efficient Large Foundation Models with Feature Representations for Multi-Modal Fact Verification

Feb 12, 2023

Abstract:Multi-modal fact verification has become an important but challenging issue on social media due to the mismatch between the text and images in the misinformation of news content, which has been addressed by considering cross-modalities to identify the veracity of the news in recent years. In this paper, we propose the Pre-CoFactv2 framework with new parameter-efficient foundation models for modeling fine-grained text and input embeddings with lightening parameters, multi-modal multi-type fusion for not only capturing relations for the same and different modalities but also for different types (i.e., claim and document), and feature representations for explicitly providing metadata for each sample. In addition, we introduce a unified ensemble method to boost model performance by adjusting the importance of each trained model with not only the weights but also the powers. Extensive experiments show that Pre-CoFactv2 outperforms Pre-CoFact by a large margin and achieved new state-of-the-art results at the Factify challenge at AAAI 2023. We further illustrate model variations to verify the relative contributions of different components. Our team won the first prize (F1-score: 81.82%) and we made our code publicly available at https://github.com/wwweiwei/Pre-CoFactv2-AAAI-2023.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge