Homayoun Hamedmoghadam

Learning Network Dismantling without Handcrafted Inputs

Aug 01, 2025

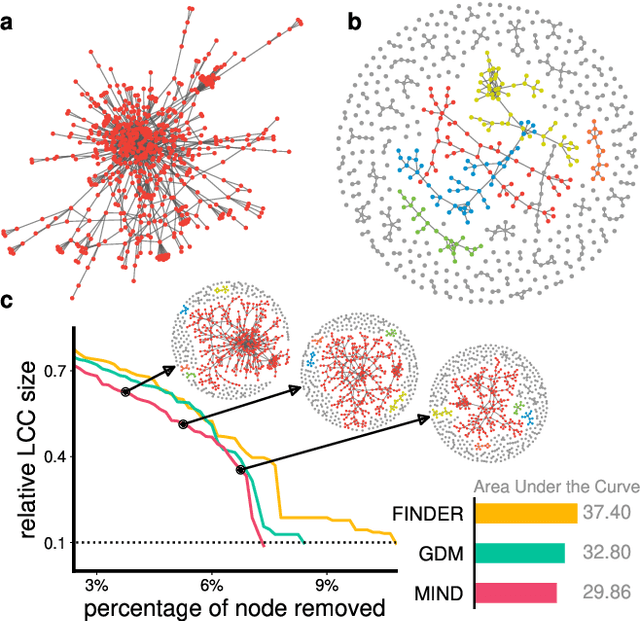

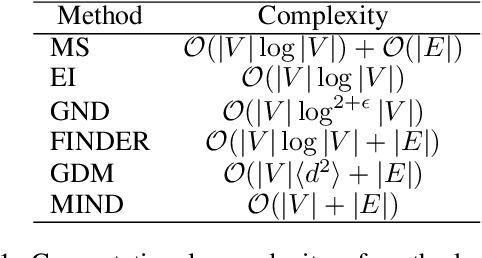

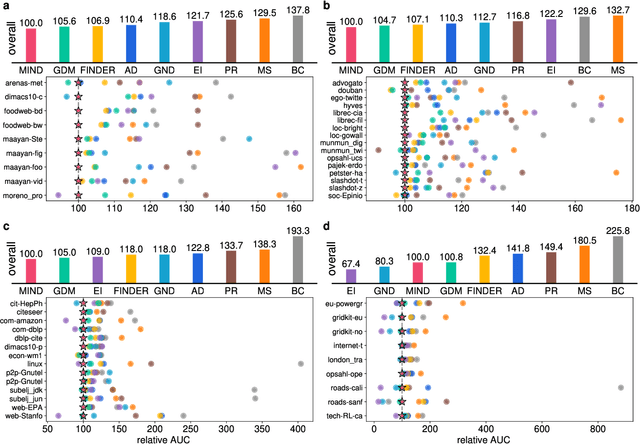

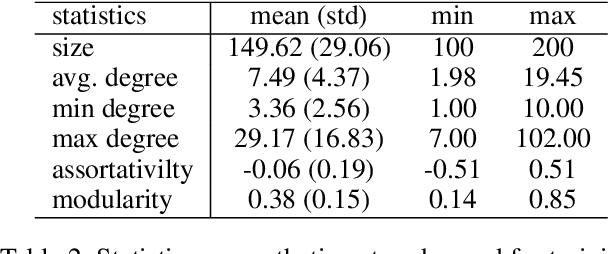

Abstract:The application of message-passing Graph Neural Networks has been a breakthrough for important network science problems. However, the competitive performance often relies on using handcrafted structural features as inputs, which increases computational cost and introduces bias into the otherwise purely data-driven network representations. Here, we eliminate the need for handcrafted features by introducing an attention mechanism and utilizing message-iteration profiles, in addition to an effective algorithmic approach to generate a structurally diverse training set of small synthetic networks. Thereby, we build an expressive message-passing framework and use it to efficiently solve the NP-hard problem of Network Dismantling, virtually equivalent to vital node identification, with significant real-world applications. Trained solely on diversified synthetic networks, our proposed model -- MIND: Message Iteration Network Dismantler -- generalizes to large, unseen real networks with millions of nodes, outperforming state-of-the-art network dismantling methods. Increased efficiency and generalizability of the proposed model can be leveraged beyond dismantling in a range of complex network problems.

Reinforcement Learning with Adaptive Control Regularization for Safe Control of Critical Systems

Apr 23, 2024

Abstract:Reinforcement Learning (RL) is a powerful method for controlling dynamic systems, but its learning mechanism can lead to unpredictable actions that undermine the safety of critical systems. Here, we propose RL with Adaptive Control Regularization (RL-ACR) that ensures RL safety by combining the RL policy with a control regularizer that hard-codes safety constraints over forecasted system behaviors. The adaptability is achieved by using a learnable "focus" weight trained to maximize the cumulative reward of the policy combination. As the RL policy improves through off-policy learning, the focus weight improves the initial sub-optimum strategy by gradually relying more on the RL policy. We demonstrate the effectiveness of RL-ACR in a critical medical control application and further investigate its performance in four classic control environments.

Australia's long-term electricity demand forecasting using deep neural networks

Jan 10, 2018

Abstract:Accurate prediction of long-term electricity demand has a significant role in demand side management and electricity network planning and operation. Demand over-estimation results in over-investment in network assets, driving up the electricity prices, while demand under-estimation may lead to under-investment resulting in unreliable and insecure electricity. In this manuscript, we apply deep neural networks to predict Australia's long-term electricity demand. A stacked autoencoder is used in combination with multilayer perceptrons or cascade-forward multilayer perceptrons to predict the nation-wide electricity consumption rates for 1-24 months ahead of time. The experimental results show that the deep structures have better performance than classical neural networks, especially for 12-month to 24-month prediction horizon.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge