Hoang Manh Hung

Emotion Recognition with Incomplete Labels Using Modified Multi-task Learning Technique

Jul 09, 2021

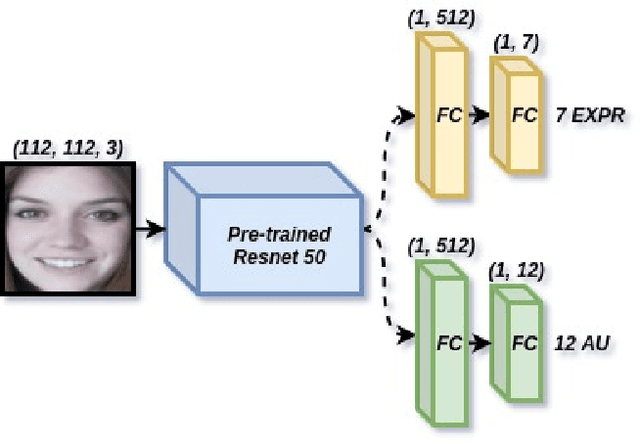

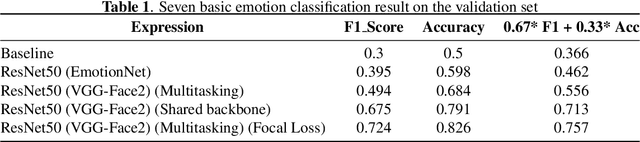

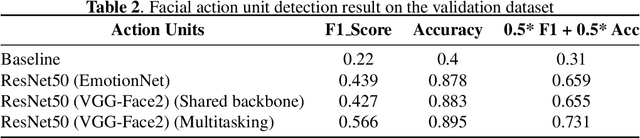

Abstract:The task of predicting affective information in the wild such as seven basic emotions or action units from human faces has gradually become more interesting due to the accessibility and availability of massive annotated datasets. In this study, we propose a method that utilizes the association between seven basic emotions and twelve action units from the AffWild2 dataset. The method based on the architecture of ResNet50 involves the multi-task learning technique for the incomplete labels of the two tasks. By combining the knowledge for two correlated tasks, both performances are improved by a large margin compared to those with the model employing only one kind of label.

Variants of BERT, Random Forests and SVM approach for Multimodal Emotion-Target Sub-challenge

Jul 28, 2020

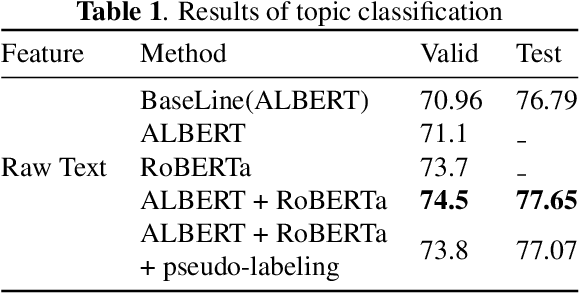

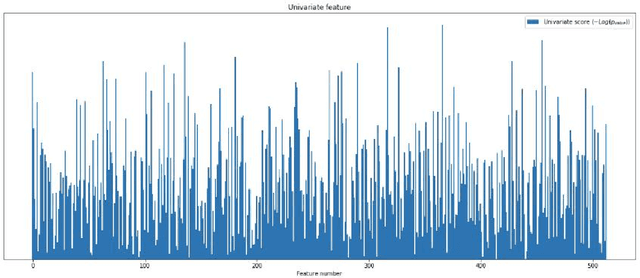

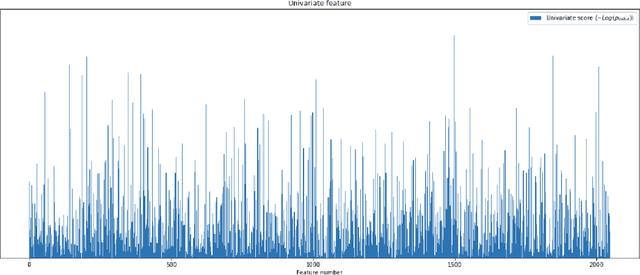

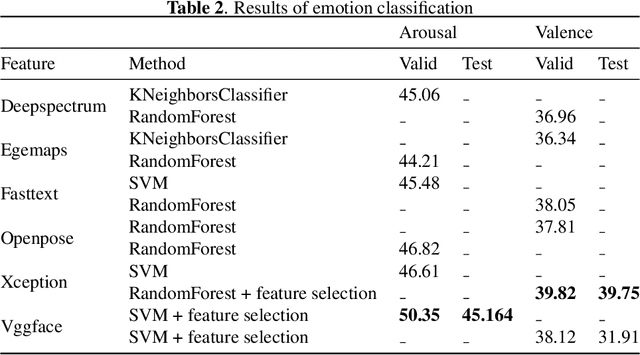

Abstract:Emotion recognition has become a major problem in computer vision in recent years that made a lot of effort by researchers to overcome the difficulties in this task. In the field of affective computing, emotion recognition has a wide range of applications, such as healthcare, robotics, human-computer interaction. Due to its practical importance for other tasks, many techniques and approaches have been investigated for different problems and various data sources. Nevertheless, comprehensive fusion of the audio-visual and language modalities to get the benefits from them is still a problem to solve. In this paper, we present and discuss our classification methodology for MuSe-Topic Sub-challenge, as well as the data and results. For the topic classification, we ensemble two language models which are ALBERT and RoBERTa to predict 10 classes of topics. Moreover, for the classification of valence and arousal, SVM and Random forests are employed in conjunction with feature selection to enhance the performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge