Himanshu Akolkar

Sup3r: A Semi-Supervised Algorithm for increasing Sparsity, Stability, and Separability in Hierarchy Of Time-Surfaces architectures

Apr 15, 2024Abstract:The Hierarchy Of Time-Surfaces (HOTS) algorithm, a neuromorphic approach for feature extraction from event data, presents promising capabilities but faces challenges in accuracy and compatibility with neuromorphic hardware. In this paper, we introduce Sup3r, a Semi-Supervised algorithm aimed at addressing these challenges. Sup3r enhances sparsity, stability, and separability in the HOTS networks. It enables end-to-end online training of HOTS networks replacing external classifiers, by leveraging semi-supervised learning. Sup3r learns class-informative patterns, mitigates confounding features, and reduces the number of processed events. Moreover, Sup3r facilitates continual and incremental learning, allowing adaptation to data distribution shifts and learning new tasks without forgetting. Preliminary results on N-MNIST demonstrate that Sup3r achieves comparable accuracy to similarly sized Artificial Neural Networks trained with back-propagation. This work showcases the potential of Sup3r to advance the capabilities of HOTS networks, offering a promising avenue for neuromorphic algorithms in real-world applications.

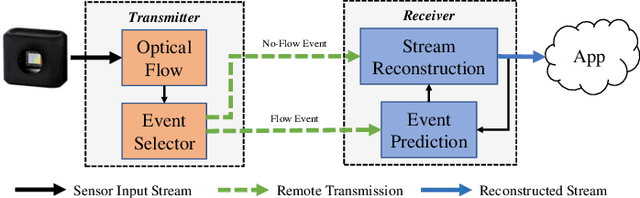

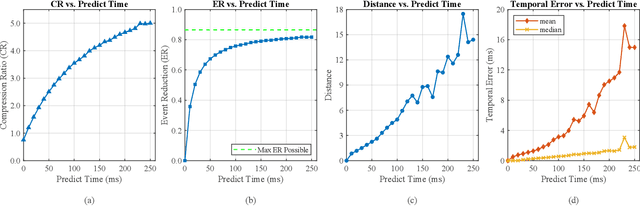

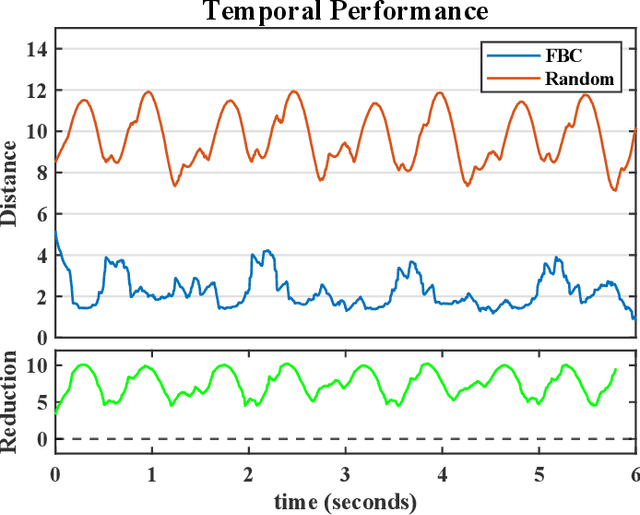

Flow-Based Visual Stream Compression for Event Cameras

Mar 12, 2024

Abstract:As the use of neuromorphic, event-based vision sensors expands, the need for compression of their output streams has increased. While their operational principle ensures event streams are spatially sparse, the high temporal resolution of the sensors can result in high data rates from the sensor depending on scene dynamics. For systems operating in communication-bandwidth-constrained and power-constrained environments, it is essential to compress these streams before transmitting them to a remote receiver. Therefore, we introduce a flow-based method for the real-time asynchronous compression of event streams as they are generated. This method leverages real-time optical flow estimates to predict future events without needing to transmit them, therefore, drastically reducing the amount of data transmitted. The flow-based compression introduced is evaluated using a variety of methods including spatiotemporal distance between event streams. The introduced method itself is shown to achieve an average compression ratio of 2.81 on a variety of event-camera datasets with the evaluation configuration used. That compression is achieved with a median temporal error of 0.48 ms and an average spatiotemporal event-stream distance of 3.07. When combined with LZMA compression for non-real-time applications, our method can achieve state-of-the-art average compression ratios ranging from 10.45 to 17.24. Additionally, we demonstrate that the proposed prediction algorithm is capable of performing real-time, low-latency event prediction.

hARMS: A Hardware Acceleration Architecture for Real-Time Event-Based Optical Flow

Dec 13, 2021

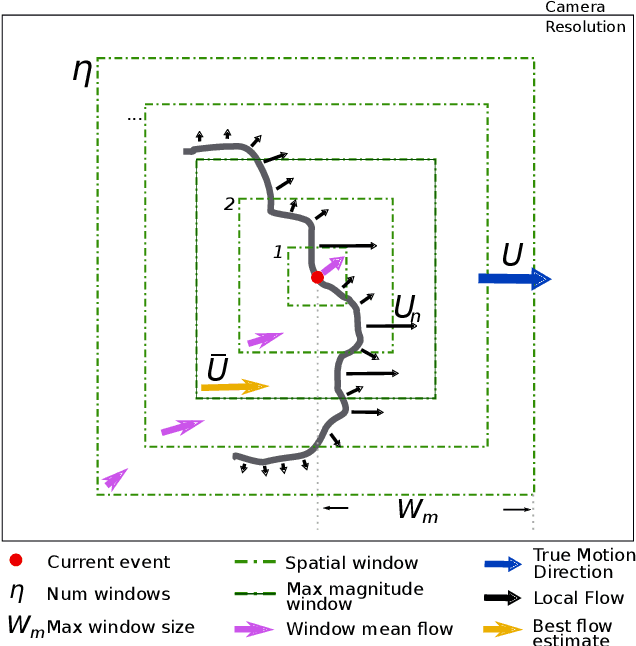

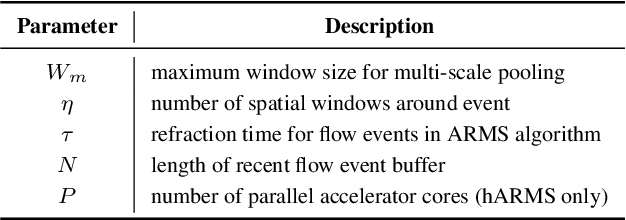

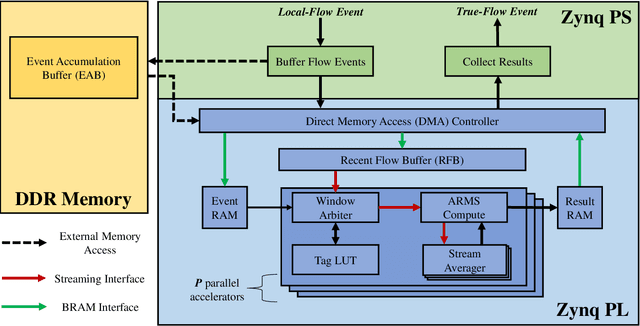

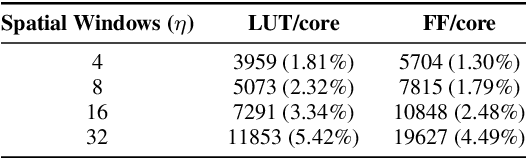

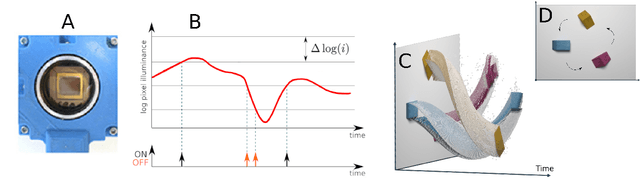

Abstract:Event-based vision sensors produce asynchronous event streams with high temporal resolution based on changes in the visual scene. The properties of these sensors allow for accurate and fast calculation of optical flow as events are generated. Existing solutions for calculating optical flow from event data either fail to capture the true direction of motion due to the aperture problem, do not use the high temporal resolution of the sensor, or are too computationally expensive to be run in real time on embedded platforms. In this research, we first present a faster version of our previous algorithm, ARMS (Aperture Robust Multi-Scale flow). The new optimized software version (fARMS) significantly improves throughput on a traditional CPU. Further, we present hARMS, a hardware realization of the fARMS algorithm allowing for real-time computation of true flow on low-power, embedded platforms. The proposed hARMS architecture targets hybrid system-on-chip devices and was designed to maximize configurability and throughput. The hardware architecture and fARMS algorithm were developed with asynchronous neuromorphic processing in mind, abandoning the common use of an event frame and instead operating using only a small history of relevant events, allowing latency to scale independently of the sensor resolution. This change in processing paradigm improved the estimation of flow directions by up to 73% compared to the existing method and yielded a demonstrated hARMS throughput of up to 1.21 Mevent/s on the benchmark configuration selected. This throughput enables real-time performance and makes it the fastest known realization of aperture-robust, event-based optical flow to date.

Neutron-Induced, Single-Event Effects on Neuromorphic Event-based Vision Sensor: A First Step Towards Space Applications

Jan 29, 2021

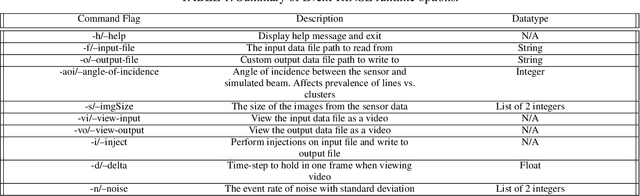

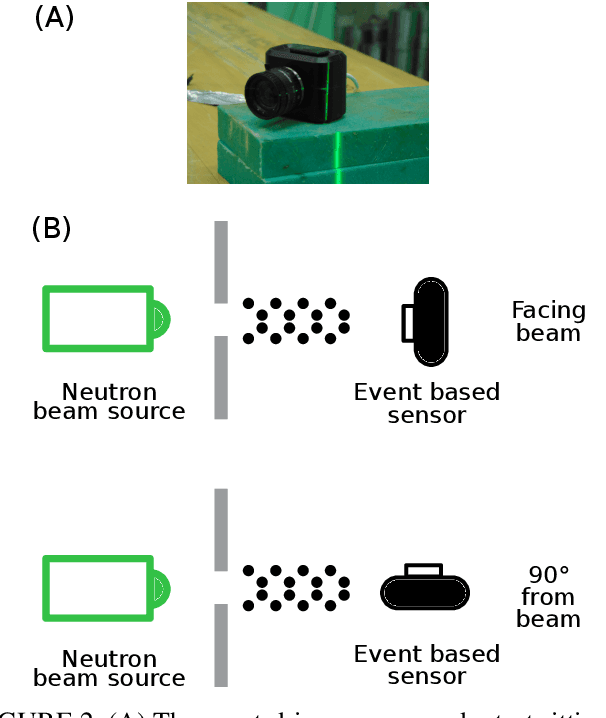

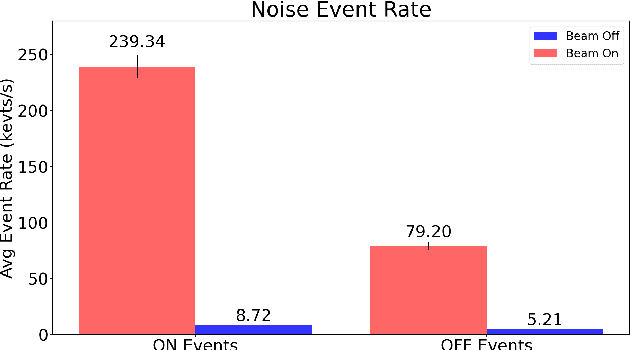

Abstract:This paper studies the suitability of neuromorphic event-based vision cameras for spaceflight, and the effects of neutron radiation on their performance. Neuromorphic event-based vision cameras are novel sensors that implement asynchronous, clockless data acquisition, providing information about the change in illuminance greater than 120dB with sub-millisecond temporal precision. These sensors have huge potential for space applications as they provide an extremely sparse representation of visual dynamics while removing redundant information, thereby conforming to low-resource requirements. An event-based sensor was irradiated under wide-spectrum neutrons at Los Alamos Neutron Science Center and its effects were classified. We found that the sensor had very fast recovery during radiation, showing high correlation of noise event bursts with respect to source macro-pulses. No significant differences were observed between the number of events induced at different angles of incidence but significant differences were found in the spatial structure of noise events at different angles. The results show that event-based cameras are capable of functioning in a space-like, radiative environment with a signal-to-noise ratio of 3.355. They also show that radiation-induced noise does not affect event-level computation. We also introduce the Event-based Radiation-Induced Noise Simulation Environment (Event-RINSE), a simulation environment based on the noise-modelling we conducted and capable of injecting the effects of radiation-induced noise from the collected data to any stream of events in order to ensure that developed code can operate in a radiative environment. To the best of our knowledge, this is the first time such analysis of neutron-induced noise analysis has been performed on a neuromorphic vision sensor, and this study shows the advantage of using such sensors for space applications.

See before you see: Real-time high speed motion prediction using fast aperture-robust event-driven visual flow

Nov 27, 2018

Abstract:Optical flow is a crucial component of the feature space for early visual processing of dynamic scenes especially in new applications such as self-driving vehicles, drones and autonomous robots. The dynamic vision sensors are well suited for such applications because of their asynchronous, sparse and temporally precise representation of the visual dynamics. Many algorithms proposed for computing visual flow for these sensors suffer from the aperture problem as the direction of the estimated flow is governed by the curvature of the object rather than the true motion direction. Some methods that do overcome this problem by temporal windowing under-utilize the true precise temporal nature of the dynamic sensors. In this paper, we propose a novel multi-scale plane fitting based visual flow algorithm that is robust to the aperture problem and also computationally fast and efficient. Our algorithm performs well in many scenarios ranging from fixed camera recording simple geometric shapes to real world scenarios such as camera mounted on a moving car and can successfully perform event-by-event motion estimation of objects in the scene to allow for predictions of upto 500 ms i.e. equivalent to 10 to 25 frames with traditional cameras.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge